Continuous deployment is when every commit that passes your tests is automatically deployed to production. While related practices like test-driven development, trunk-based development, continuous integration and continuous delivery have significant mindshare, comparatively few teams take the final step to remove all manual gates to production.

This article is for teams who are familiar with continuous delivery who want to know what it takes to adopt full continuous deployment: how you can do it without breaking production, and therefore the trust of your stakeholders — and your users.

This approach shows how to perform safe and incremental releases during everyday story work, which reconciles the ‘one-commit = one-deploy’ developer workflow with the complexities of distributed systems. I have used it with agile teams comprising developers of mixed experience levels, working on high-value, high-traffic software projects over a sustained period. This is a collection of existing concepts and practises, but they are structured specifically around the challenges of continuous deployment.

But why should we send every commit to production?

It seems scary, but the one-commit = one-deploy workflow can improve team productivity in several ways:

Immediate integration. There is rapid feedback on how changes integrate with other team members’ code, real production load, data, user behavior and third-party systems. This means when a task is done it is really done and proven to work.

Smaller changes. Each individual deployment becomes less risky because there are fewer lines of code to it. If problems arise, it’s easy to trace them back to a specific problematic change.

Developer friendliness. Version history is straightforward with no time wasted on guessing which commits are in which branch, complex merges or tracking what changes are “done” but not yet in production.

A mindset shift

Contracts between distributed components

We are used to thinking about contracts between our system and the outside world. These contracts include expectations about the format of data exchanged, and also the behavior of the interacting systems. For example, our service might rely on a third-party system to send emails. Every time a change is made to that third-party service its developers need to consider the impact on us and any other consumers. If they change the format of the requests their service accepts it could affect our integration. If they keep the format the same but change how it works, for example batching up emails rather than sending them right away, this could also affect us.

This type of contract has been extensively talked about in literature, and there are often a lot of practices around it such as consumer-driven contracts and contract testing. After all, when different teams need to collaborate — together or with a vendor — there is a lot of back and forth required within the organization, which leads to higher visibility of the changes.

However, we don't always think about the contracts that exist between the different components of our service itself, as they’re usually managed silently within the team owning the service. As soon as there is inter-process communication there is also a contract. This includes interactions between databases and application servers and between application servers and client-side JavaScript, different backend systems talking to each other through messaging or events, different applications sharing a persistence layer, even different processes on the same machine talking to each other through sockets or signals.

These internal contracts are relevant to any system where you can’t upgrade all the parts simultaneously: so pretty much all modern software systems. However, while you might ignore a few minutes of intermittent 500 errors or failed transactions on a system you deploy once a week, that’s almost impossible when every developer triggers multiple deployments a day.

That’s why upgrades to systems connected by a contract need to be heavily orchestrated, a task that is difficult enough — and risks being disrupted completely by continuous deployment if we don’t change the way we think about our workflow. Next, we will explore how this orchestration takes place with a gate to production, and the implications of that gate being removed.

Before: Orchestrating order of deployment

In my experience, when deployment to production does not happen every commit, dependencies between producers and consumers are usually managed by manually releasing each system's changes in the correct order, or by automatically redeploying and restarting the whole stack. This means that anyone who picks up a task can mindlessly start working on whichever codebase they wish, as all of their changes will wait to be deployed correctly by humans clicking buttons.

Or, we can say, code changes go live in their order of deployment.

Now: Orchestrating order of development

Now imagine that the gate to production (and the human) have been removed. Suddenly changes don't stop in a staging environment: they are deployed immediately.

Or, features go live in their order of development.

The same developers thoughtlessly deciding to start from one place or another would now release a UI change that has no business being in production before the API is finished. The only way to avoid these situations (without extra overhead) is to make sure that the order of development is the same order in which code changes should be released.

This is a substantial change from the past: developers used to be able to pick a new task and just start somewhere, maybe on the codebase they’re most comfortable with or with the first change that comes to mind. Now it's not enough to be able to know in advance which component needs changing and how, but also there needs to be deliberate planning of all code changes going live.

In the rest of the article I want to elaborate on some tools and techniques that reduce this cognitive load: they can be used to bypass or simplify planning the order of development in advance. I would like, in particular, to propose a framework that allows us to approach changes differently depending on their nature: adding new features and refactoring existing code.

Adding new features

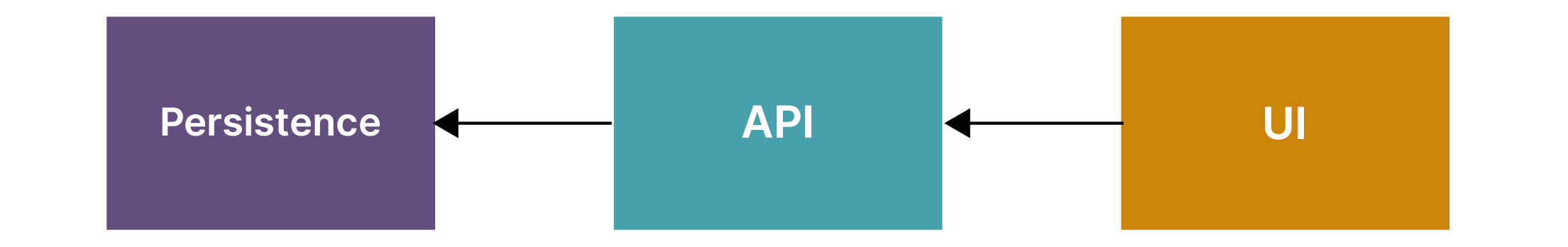

Firstly, we will cover the case where a new feature is added, perhaps something that requires a JavaScript front end to trigger a call to a server-side endpoint, that in turn persists user data to a database.

How do we get there without breaking production?

Without continuous deployment we might simply start implementing from any one of the three layers and work in no particular order. But, as we saw before, now that everything is going to production immediately, we need to pay more attention. If we want to avoid exposing broken functionality, we need to start with the producer systems first, and gradually move upwards to the interface.

This allows every consumer to rely on the component underneath, with the UI eventually being released on top of a fully working system, ready for customers to start using.

The approach above certainly works. But is it necessarily the optimal way to approach new features? What if we preferred to work another way?

Outside-in

Many developers prefer to approach the application from the outside in when developing something new. This is especially true if they're practicing "outside-in" TDD and would love to write a failing end-to-end test first. Again, it's beyond the scope of this blog post to explain all of the benefits of this practice, but, to keep it simple, instead of developing from "inside" the application stack (ie. persistence), you approach it in slices, facing the outside (ie. UI), first.

Starting with the layers visible to the user allows for early validation of the business requirements: if it is unclear what the visible effects of the feature should be from the outside, this step will reveal it immediately. Starting development becomes the last responsible moment for challenging badly written user stories.

The API of each layer is directly driven by its client (the layer above it), which makes designing each layer much simpler and less speculative. This reduces the risk of having to re-work components because we did not foresee how they would be invoked, or to add functionality that will end up not being used.

It works really well with the "mockist", or London school of TDD approach to implement one component at a time, mocking all of its collaborators.

However, this would imply that the ideal order for development is exactly the opposite of the order needed to not break the application!

In the following section, we will see a technique we can use to resolve this conflict.

Technique: Feature toggles

Feature toggles are a technique often mentioned together with continuous delivery. To quote Pete Hodgson, a feature toggle is a flag that allows us to "ship alternative code paths within one deployable unit and choose between them at runtime".

In other words, they are switches values that you can use to decide whether to execute one branch of behavior or another. Their value usually comes from some state external to the application so that they can be changed without a new deployment.

With continuous deployment, we can use feature toggles to decouple the order of changes needed to not break contracts from the order in which we want to develop. This is achieved by using a feature toggle to hide incomplete functionality from the users, even if its code is in production.

The trade-offs of feature toggles

As we saw, a new feature can be added under a feature toggle, approaching the application from the outside in. This creates more overhead for developers as they have to manage the lifecycle of the toggle, and remember to clean it up once the feature is consolidated in production. However, it also frees them from having to worry about when to commit each component. And it allows them to enable a feature in production independently of a deployment. Some brave teams even allow their stakeholders to enable toggles by themselves.

Refactoring across components

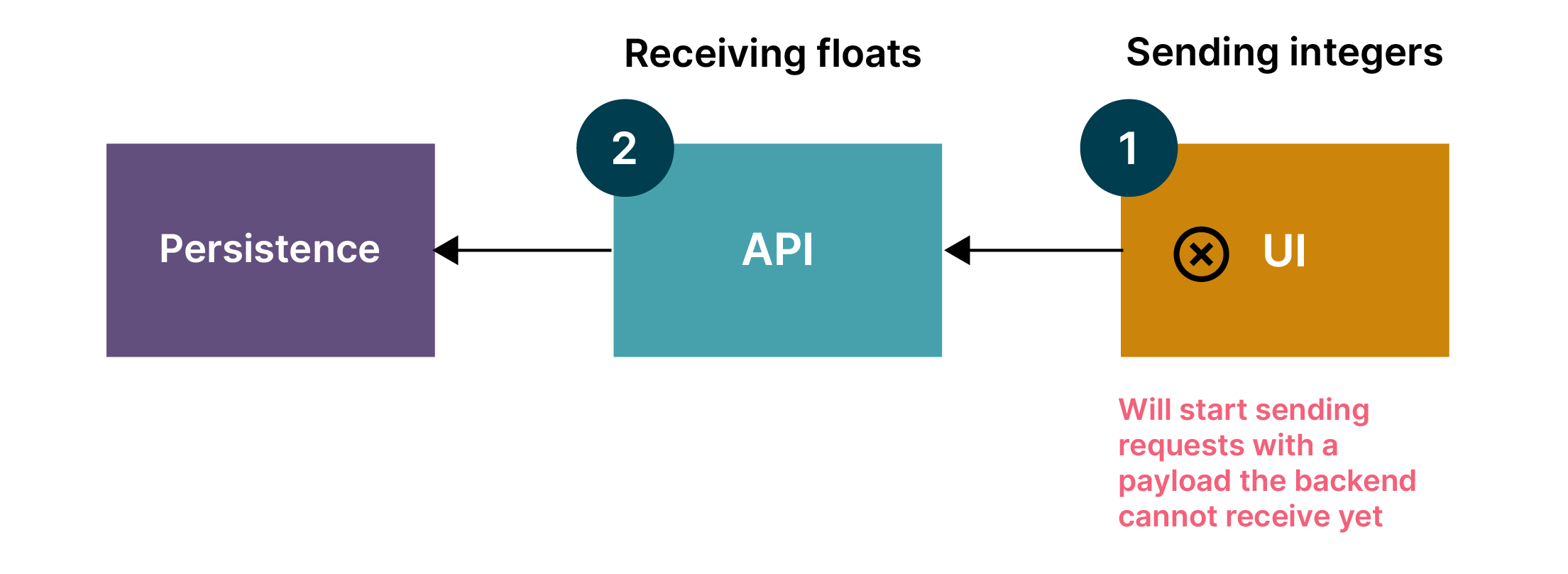

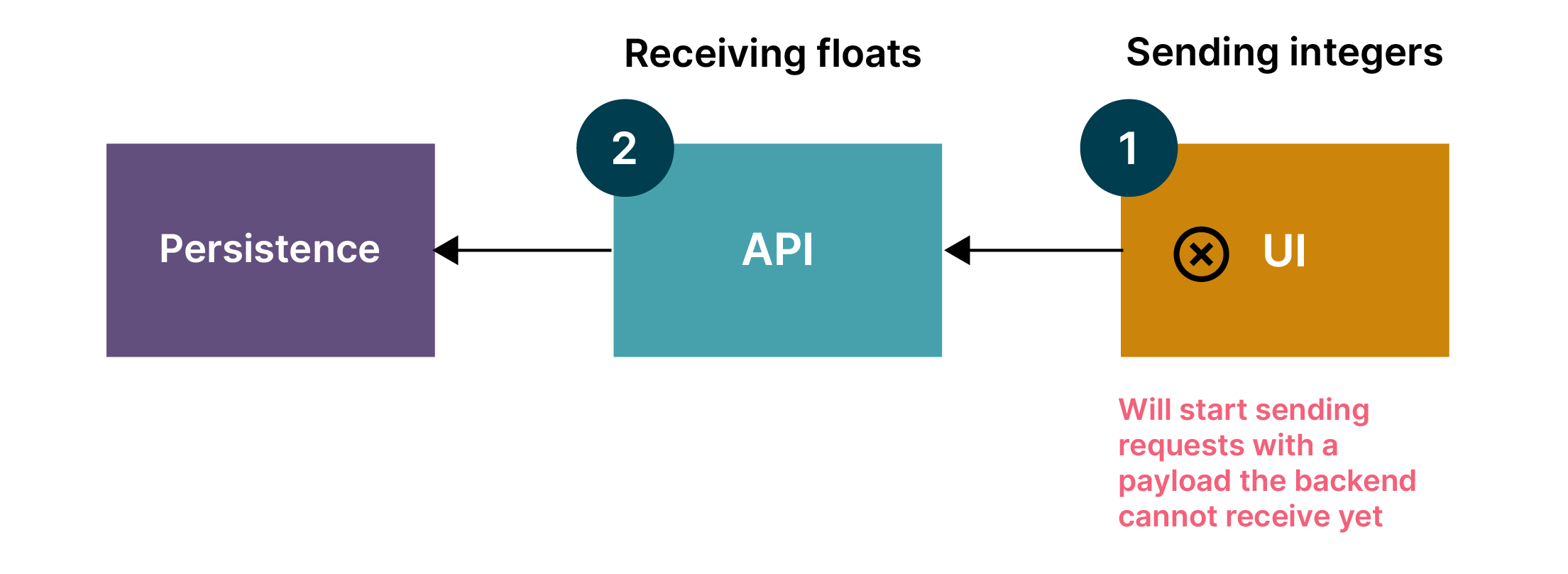

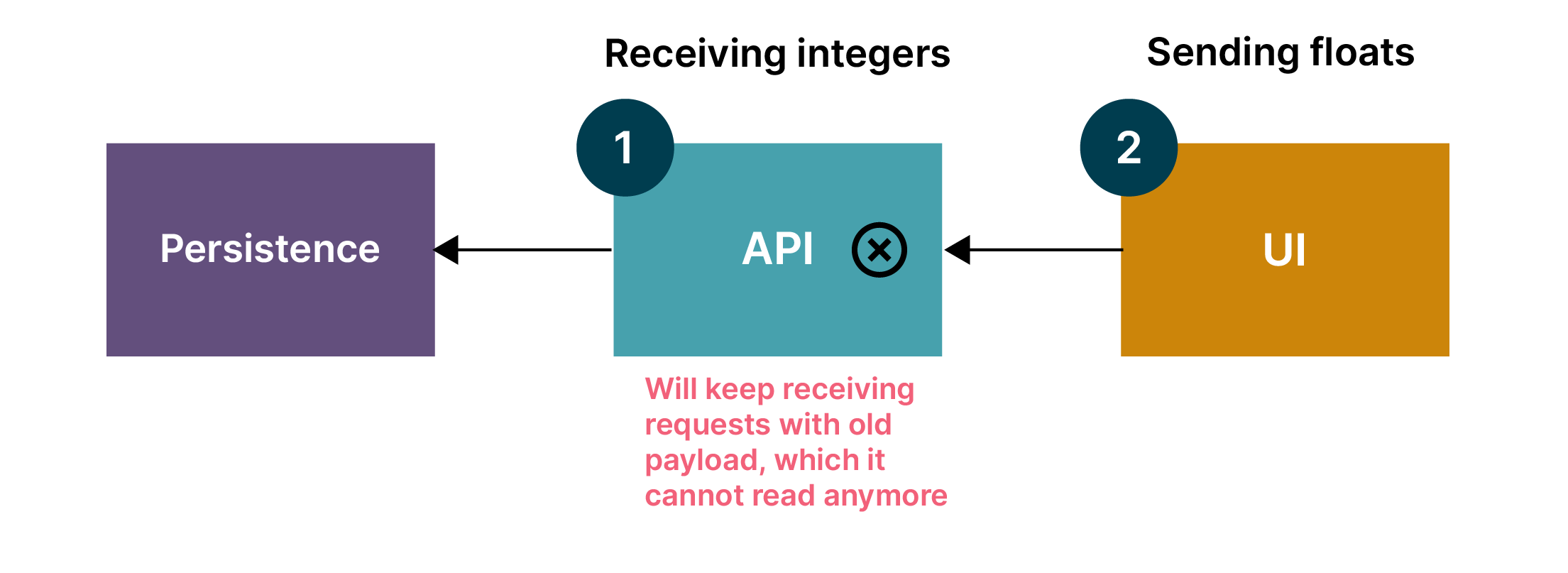

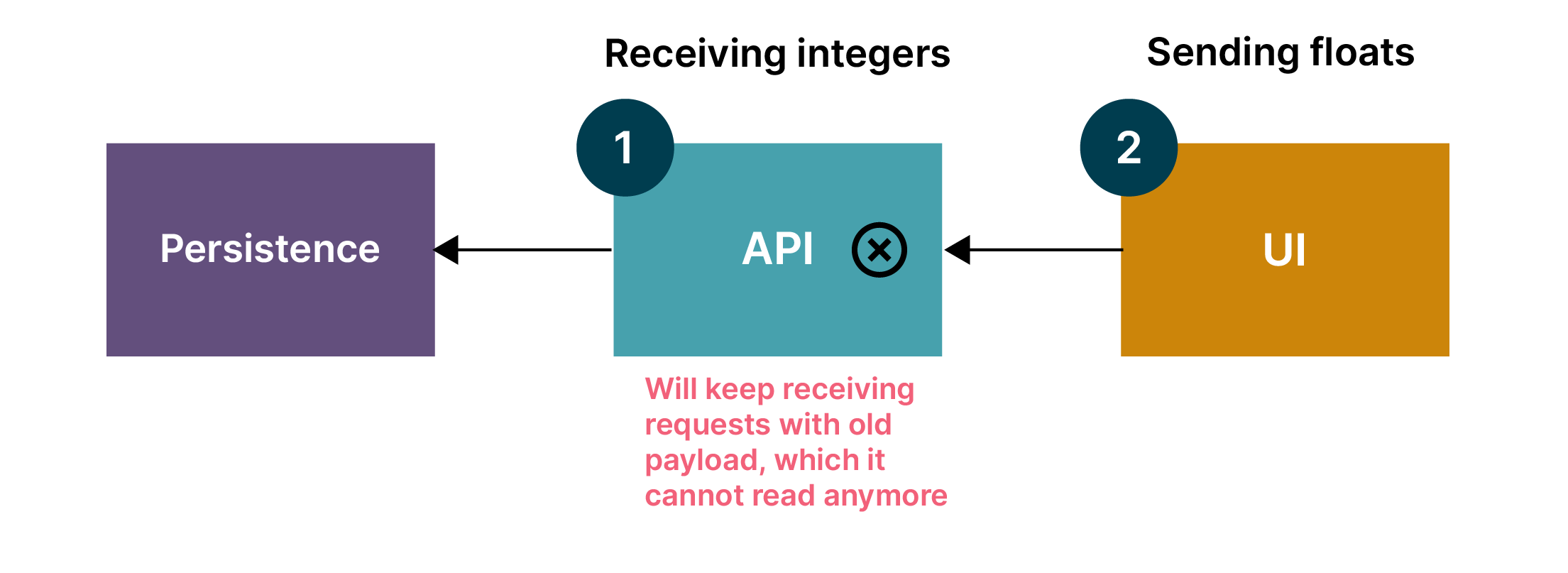

Now we will cover the case where we improve the internal structure of our system without making changes that are visible to users or anyone else outside our team. The case where a change is entirely internal to a component presents no special challenges (there are no contracts involved), so we will focus on coordinated changes across multiple components, for example if we choose to restructure an endpoint that is relied upon by client side javascript to implement an existing feature.

How do we get there without breaking production?

This is different from the previous case, as there is no new feature that can be hidden from users. So, feature toggles are probably not the right approach here, as most of their benefits are diminished while their overhead remains.

We can then take a step back and ask ourselves if there is an order in which we can release our changes to not break anything.

Whichever order we choose, it is clear that the functionality will be broken in front of the users for some time unless we take special precautions to avoid that.

Technique: expand and contract

Expand and contract is a technique that allows changing the shape of a contract while preserving functionality, without even temporary feature degradation.

It is frequently mentioned in the context of code-level refactoring (changes within sets of classes or functions), but it’s even more useful in the context of whole systems depending on each other.

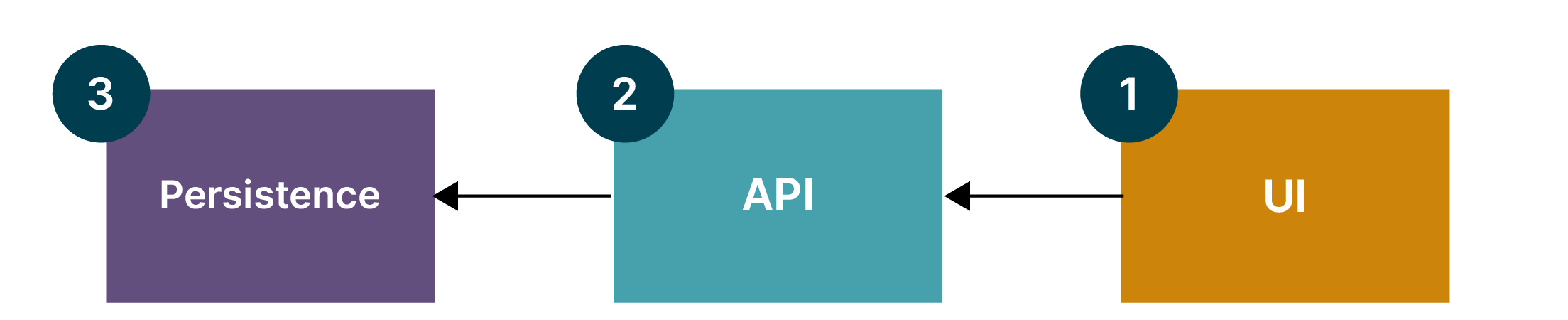

It consists of three steps:

Expand phase. In this phase, we create the new logic in the producer systems under a separate or expanded interface that their consumers can use, without removing or breaking the old one.

Transition phase. All consumers are migrated to the new interface.

Contract phase. Once all the consumers are using the new flow, the old one can finally be removed.

For example, this is how these steps might be applied to our example of an endpoint changing its contract:

Expand phase. We change the backend to accept both the new and old format. The controller can examine the incoming request, decode it appropriately and persist the results to the database using the original queries.

Transition phase. Now we change the front end to work with the new data format. That makes the server side flow decoding the old format obsolete.

Contract phase. Once the front end code has been migrated to use the new format, we remove the old flow and boilerplate code from the API.

Inside-out

With the expand and contract approach, we have to start expansions with the producer systems and then migrate the consumers. This means we have to start with our innermost layers, working our way out to the ultimate client (UI code).

Notice that this is the opposite of the direction we took in the previous section.

The trade-offs of expand and contract

Existing features should be refactored with the expand and contract pattern, approached from the inside out. This is a superior alternative to using feature toggles for day-to-day refactoring, which are costly and require clean up.

Of course, there might be some rare exceptions where the refactoring we’re performing is especially risky, and we’d like to still use a toggle to be able to switch the new flow off immediately or even run both versions in production and compare the results. Such situations, however, shouldn’t be the norm and the team should question whether it’s possible to take smaller steps whenever these situations arise.

This example was focusing specifically on the contract between frontend and backend within our system, but the same pattern can be applied between any two distributed systems that need to alter the shape of their contract (and with synchronous and asynchronous communication).

Special precautions should be taken however when the exclusive job of one of those systems is to persist state, as we will see in the next section.

Avoiding data loss

The attentive reader might have noticed what a lucky coincidence it was that the persistence layer was missing from our previous refactoring example, and how tricky it could have been to deal with. But programmers in the wild are seldom that lucky. That layer was conveniently left out as it deserves its own section: we will now address the heart of that trickiness and see how to refactor the database layer without any data loss.

In this section we’ll explore what would have happened if our change had also included a requirement to migrate the data owned by the endpoint to a new format (for simplicity, we will assume our persistence layer is a relational database).

How do we get there without breaking production?

It might also be tempting to simply try and apply the expand and contract pattern from the last section (we are dealing with refactoring an existing functionality, after all). We could imagine the phases to look something like this:

Expand. Expand our schema by creating another column. Copy and convert all existing data to it in the same database evolution. The old clients still write to the old column and need to be migrated.

Transition. Migrate all clients to write to the new column.

Contract. Finally, remove the old column.

However, this will also cause a data loss! Nothing is written to the new column between the expand and contract phases so any transactions that occur in this period will be missing a value in the new column.

Technique: Database compatibility

In the book "Refactoring Databases", Scott J Ambler and Pramod J. Sadalage suggest relying on a database trigger to deal with this scenario.

This could indeed start synchronising old and new columns from the moment the new column is created. However, if like this author, you're not exactly thrilled to be implementing important logic in SQL (and just generally shiver at the thought of database triggers), you might find the next section more appealing.

Technique: Preemptive double write

We can make a small extension to the expand and contract pattern: before starting, we can change the application to attempt to write to both columns. The new column will not exist yet, but we will code the application in a way that tolerates a failure when writing to it. Then we can proceed with the steps we had originally planned:

Double write phase. Attempt to write to the new column, and if that fails due to it not existing yet, fall back to writing to the old column.

Expand phase. Expand our schema to add the new column. Copy and convert all existing data to it. Old clients still write to the old column and will need to be migrated

Transition phase. Migrate all clients to write to the new column only, without the fallback.

Contract phase. Finally, remove the old column.

This will ensure that as soon as the new column is created, data will start successfully being written to it, removing the gap we have observed in the previous section.

The above is written from the perspective of a user of a relational database with an explicit schema. For NoSQL databases with implicit schemas (or other persistence mechanisms with few constraints, like filesystems), the double write can be implemented without fallback because the database will accept the new values immediately. It should be noted that some messaging systems can also act as a persistence layer, so most of the considerations in this section could be applied to them depending on your use case.

Editor’s note: while Valentina is correct to say that this article is largely a systematization of existing techniques, I’m not aware of preemptive double write having been previously described.

The trade-offs of the double-write technique

We can safely apply expand and contract to interaction with a database by using preemptive double-write technique to avoid transitory data loss.

This might appear time-consuming as it could take many releases to arrive at the final state. However, unless your system can be upgraded and its data migrated quickly enough to avoid interruption to service then this dilemma necessarily exists. It’s not caused by continuous deployment, though it’s brought into focus and perhaps magnified by continuous deployment.

Not all applications have this requirement. Check with your stakeholders to understand if some data loss or downtime would be acceptable to simplify development.

Principles for safe continuous deployment

We can summarize these four principles for safely practicing continuous deployment:

Use feature toggles to add behavior

Use expand and contract to refactor across components

Use preemptive double write or database compatibility to avoid data loss

Plan your releases and order of development

Using these principles, I often create a development plan before starting any user story. Is the task about adding new functionality? Refactoring? Is there any way it could be split in different phases, maybe with the Preparatory Refactoring pattern? Knowing the answer to those questions enables me to map out different steps of development (therefore deployments) using the techniques described above, and safely release every step of the way.

You don’t have to make a full story plan before every feature of course. But if I had to leave the reader with just one thought, it would be this: when every commit goes to production we cannot afford to not know what the impact of our code is going to be, at every step of its readiness lifecycle. We must spend the time investigating or spiking out our changes to map out how we are sending them live.

As amazing and liberating as continuous deployment might be compared to older ways of working, it also forces us to hold ourselves and our peers accountable to a higher standard of professionalism and deliberateness over the code and tests we check in. Our users are just always a few minutes away from the latest version of our code, after all.

If you’d like to see this system applied to an Animal Shelter Management System example, check out my XConf talk or long-form blog post on the same subject.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.