“There is only one way to eat an elephant: one bite at a time.”

When faced with an overwhelmingly complex task, it can be hard to know where to start. This saying, attributed to a variety of people, including Desmond Tutu, tells us to start where we can, break the challenge into bitesize pieces, and then in time we can achieve what feels impossible.

Once you have reached the difficult conclusion that your mainframe is no longer serving the needs of your organization, the task ahead may feel like you need to “eat the elephant”.

Mainframe replacement is a dauntingly complex and costly exercise. Your current systems will have been built up over decades, will support a complex landscape and are likely to contain substantial technical debt and undocumented functions. A multi-year programme will be required. The environment around you will change as you execute. Your organization will need to transform to support modernized processes and systems. Legacy replacement programmes that are necessary in the medium to long term but are high cost and duration struggle to produce ROI before the end of the programme. We see many false and stuttered starts, which are costly and delay benefit realization.

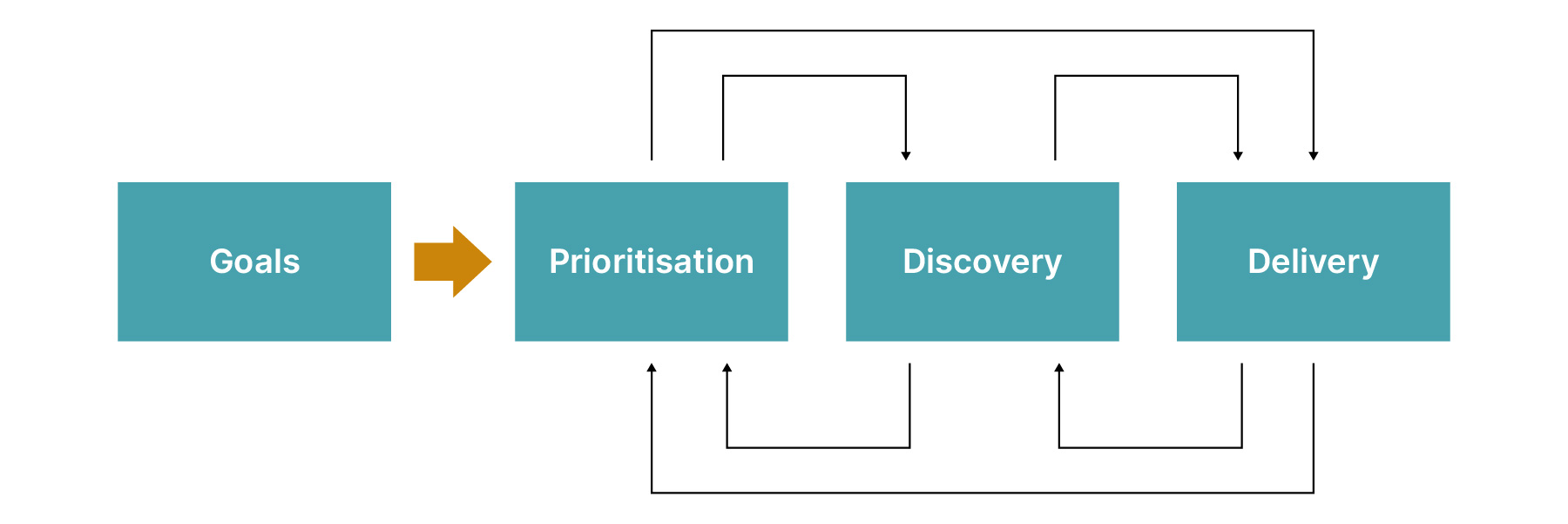

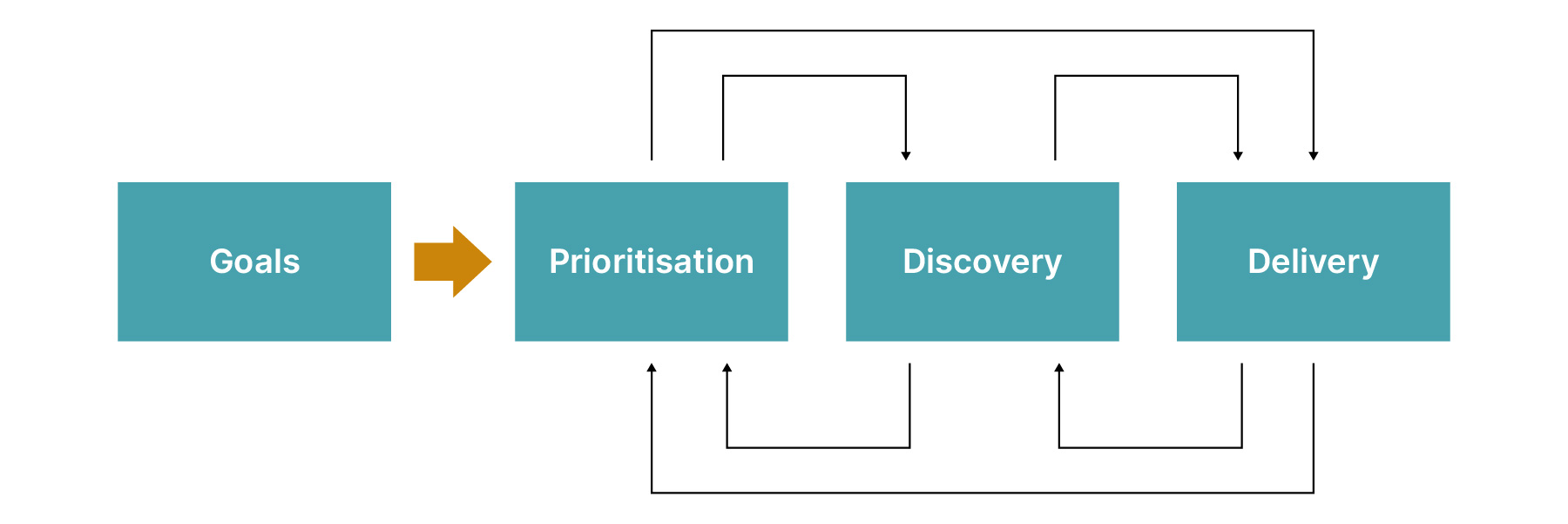

To manage the impact of inevitable business change throughout the duration of the program, you need a vision that allows for changing priority and pivots. An incremental roadmap tailored to this vision will reduce risk, build confidence and allow the release of value before the end of the program, still driving towards your future state. This will support a wider buy-in from your organization and give you the data to build your business case.

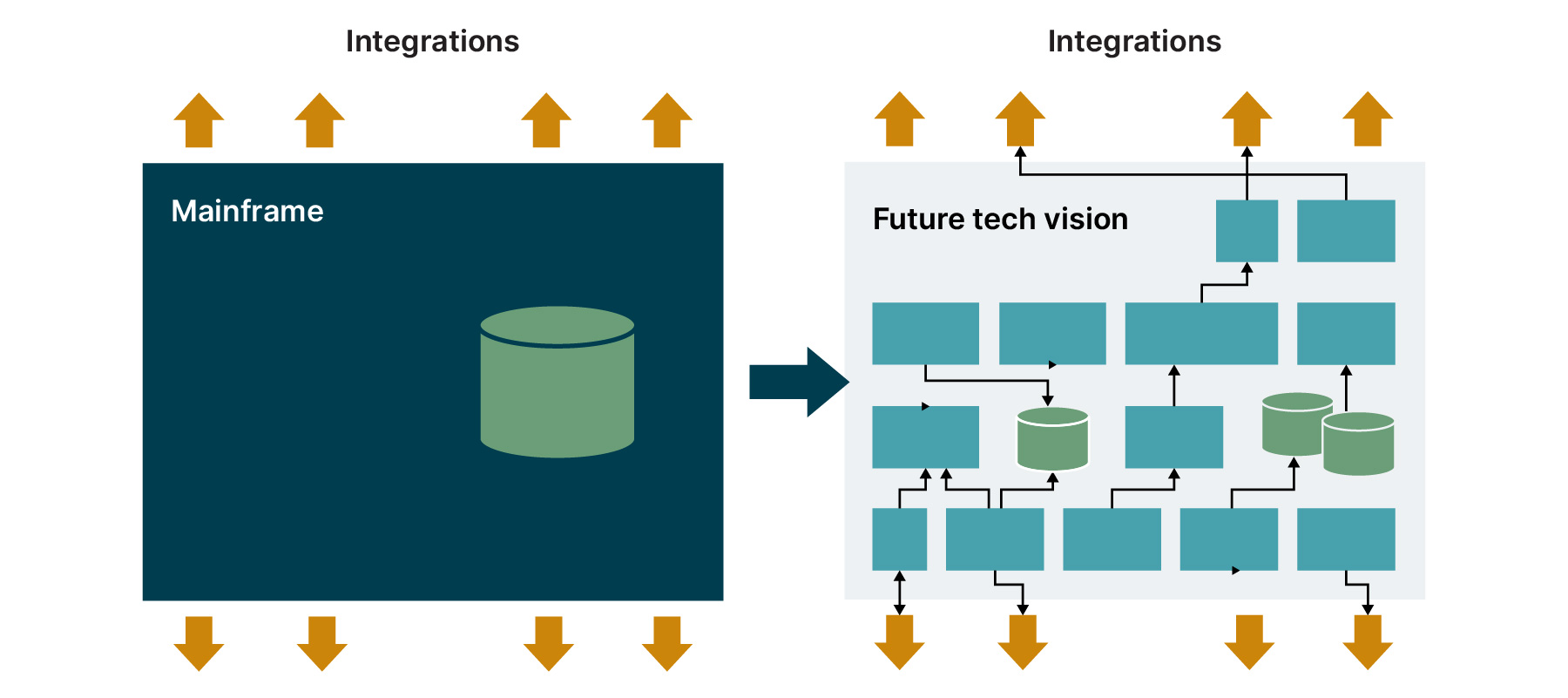

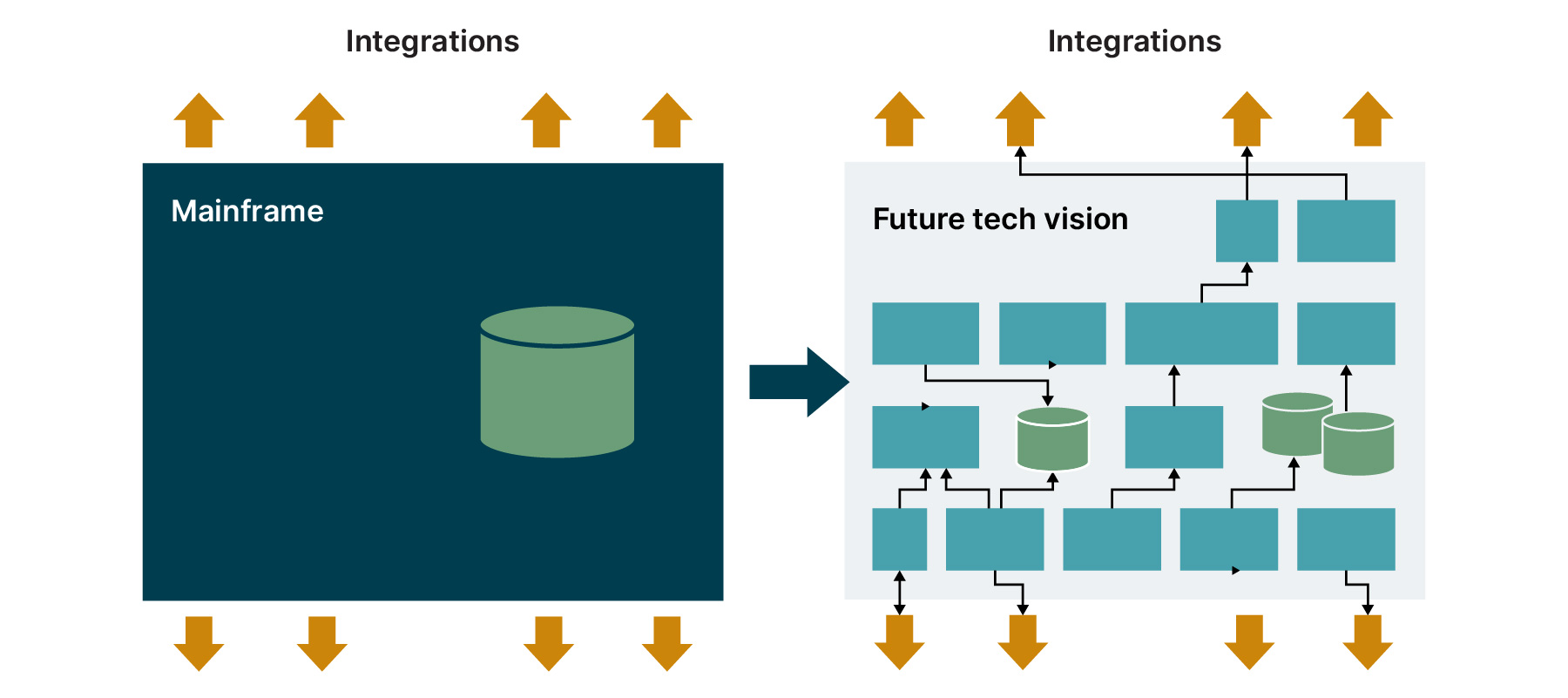

There are a number of strategies for mainframe modernisation. These include rehosting, replatforming and rearchitecting. Whichever strategies you employ, replacing a complex business critical system will require a complex solution.

So what tools and strategies do you need to set your mainframe migration up for success? In this article we will talk about techniques Thoughtworks have employed to build a flexible roadmap for incremental mainframe replacement while bringing risk left and doing ‘just enough’ analysis while not delaying getting started.

Prioritize goals and understand trade offs

What specifically must we achieve and how do we evolve this vision?

Factors driving change:

The decision to offload from your mainframe will be driven by a number of factors. These often include:

Mainframe and license costs rapidly increasing

Increasing cost to run

Employees with required skills nearing retirement

An inability to react to new business opportunities

Challenges with scaling or reliability

New features are either expensive or take time to implement

All these factors are important but you must develop a unified view of their priority. Many areas of your organization will have been waiting years for their favored change. But if we end up trying to fix everything for everyone, the cost of your migration will become prohibitive.

Goals:

You will have a number of goals for your replacement such as:

The new systems must provide all existing functionality for current customers

The new system must fix existing compliance issues

It must stop costly incidents

The performance must be as good as legacy (mainframes can be extremely fast)

It must enable new feature x that customers are waiting for

Cost to run must be x% of legacy cost to run

Trade offs:

You need to be prepared for difficult trade offs:

Which of the goals are negotiable and under what circumstances?

At what cost are they not worth implementing?

If a goal cannot be met should the whole programme be halted?

What happens when we uncover unexpected issues as the migration progresses?

Your prioritized goals should then be used to shape your plans. Priorities will change over time, but if we clearly articulate this change as it happens we can evaluate the impact of necessary pivots.

Prioritize discovery by risk

To cover a large surface area you need to target risk and avoid creating unnecessary detail.

When planning for your mainframe replacement you will need answers to these questions:

What is the architecture and transition approach?

How long will it take?

What is the implementation cost?

What will the new running costs be?

What performance can be achieved?

How long do you need to run new and old systems in parallel?

When can we get customers onto feature x?

What benefit is realized by each increment?

What skills will your people need?

How will consuming systems be updated?

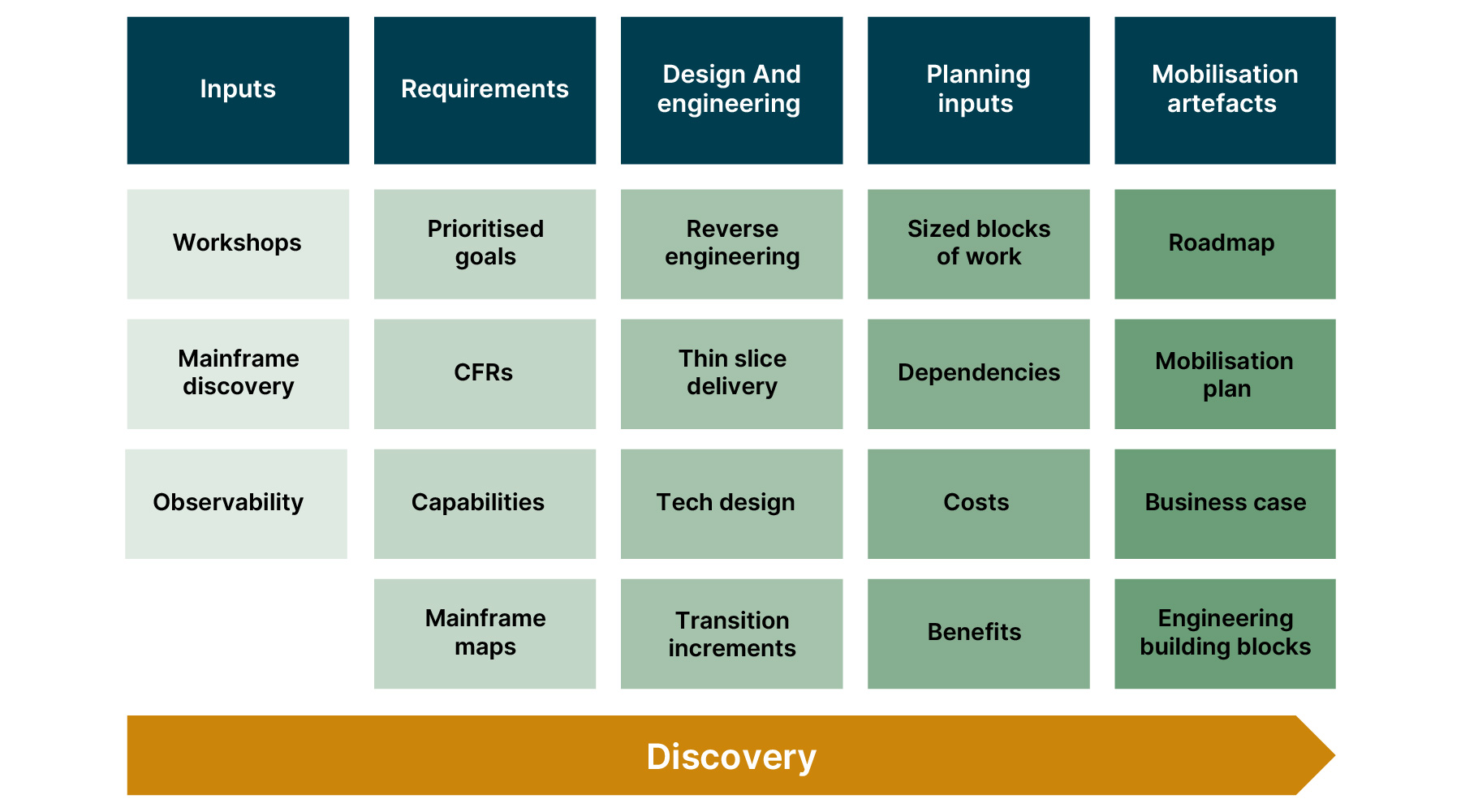

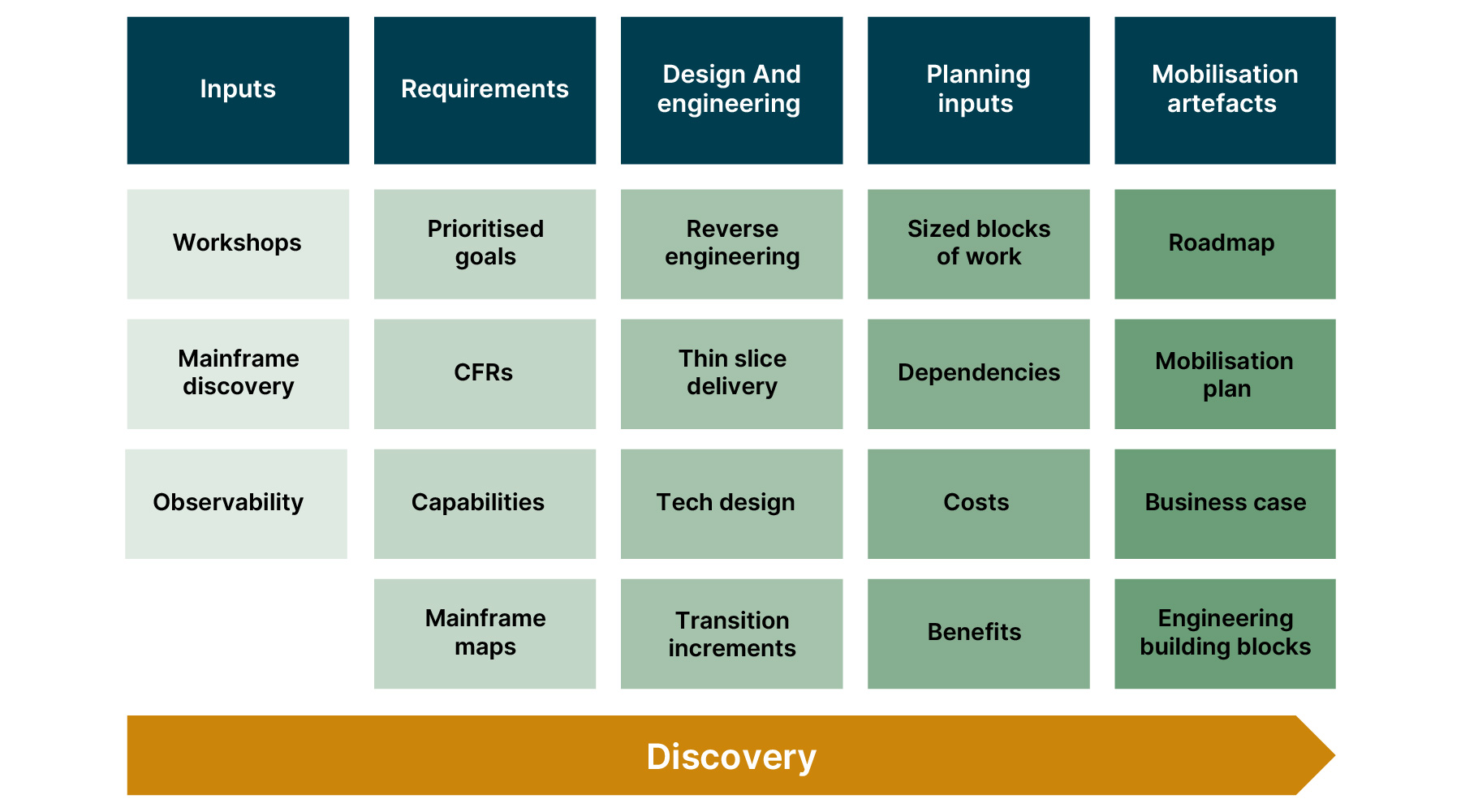

To shape the answers we would run a multi-month discovery phase. This involves analysis of existing systems, design and understanding of benefits. With the huge surface area we see with mainframes we would need to make assumptions, but with sufficient data we can validate our assumptions and build confidence.

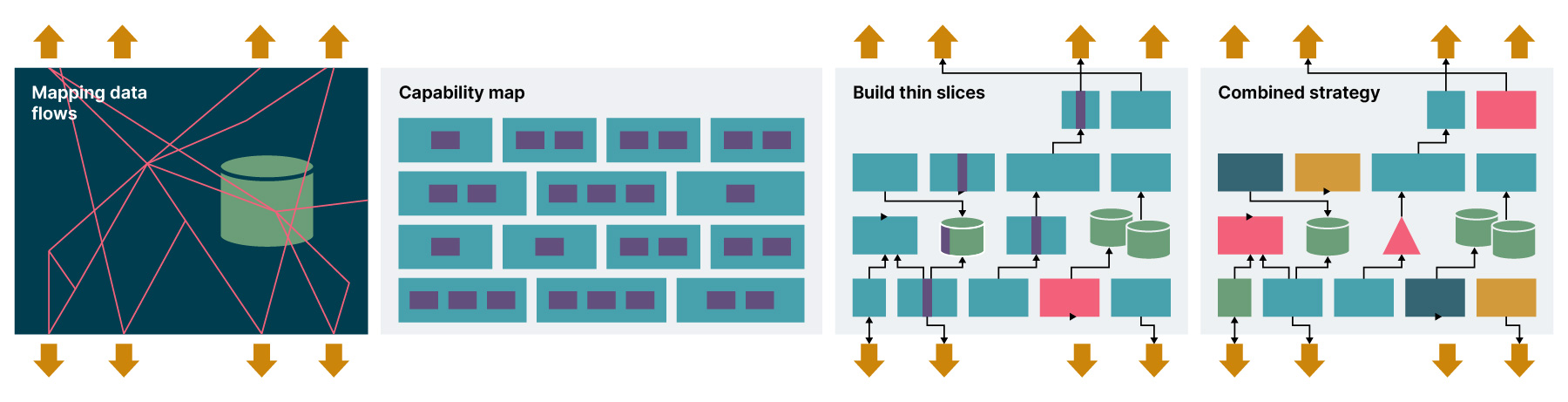

A risk based prioritization of discovery activities leads us to unpack the most complex or unknown areas of the system, by mapping technical and business capabilities, creating tailored designs and building spikes and thin slices of the code.

The discovery phase addresses these questions on a high level, with quantified margins of uncertainty. We analyze the whole landscape. We dive into some areas in detail and can extrapolate out our understanding from there. The temptation is to do increasingly detailed analysis and design, with an expectation that this will lead to more certainty, but design has a shelf life: as time and as the rest of the world moves on, it becomes less valid. For example, you may make a technology choice that is superseded by a new offering in 12 months in the fast moving world of cloud service providers. Or additional regulatory rules are announced. Or a new product on the horizon changes the required capabilities or prioritization. Or organizational change means technical boundaries must be redrawn. Too much analysis up front can lead to wasted effort and a false sense of certainty, so we need to find a balance of ‘just enough’ analysis.

Mapping what you have

What is on your mainframe?

If your mainframe is decades old it will have built up a lot of technical debt. We see issues such as undocumented logic, duplicated functionality, duplicated code, dead code, poor structure, unnecessary complexity and missing source code. We find multiple languages and technical frameworks implemented and approaches that have been layered on top of each other over many years.

Legacy complexity factor:

Mainframes can vary greatly in their difficulty to work with. The ‘legacy complexity’ factor is ‘how hard will it be to work with?'. This is based on characteristics such as the age of the system, levels of tech debt, technologies used, availability of SMEs. Maybe it’s got Assembler (no tools), or an uncommon language (e.g. REXX, hard to find these skills) or has been poorly maintained (lots of duplication, dead code), or logic is in config (cannot use static code analysis), or is poorly designed.

Code analysis:

There are a number of tools that (depending on your technologies) may extract data from your source code, allowing you to build a map of your mainframe. “Code as data” techniques can be used alongside or independently of these.

Reverse engineers can work with business analysts to extract requirements from source code. In this discovery phase, this is not to directly reproduce the logic within the code, it is about understanding the structure and intent of the code.

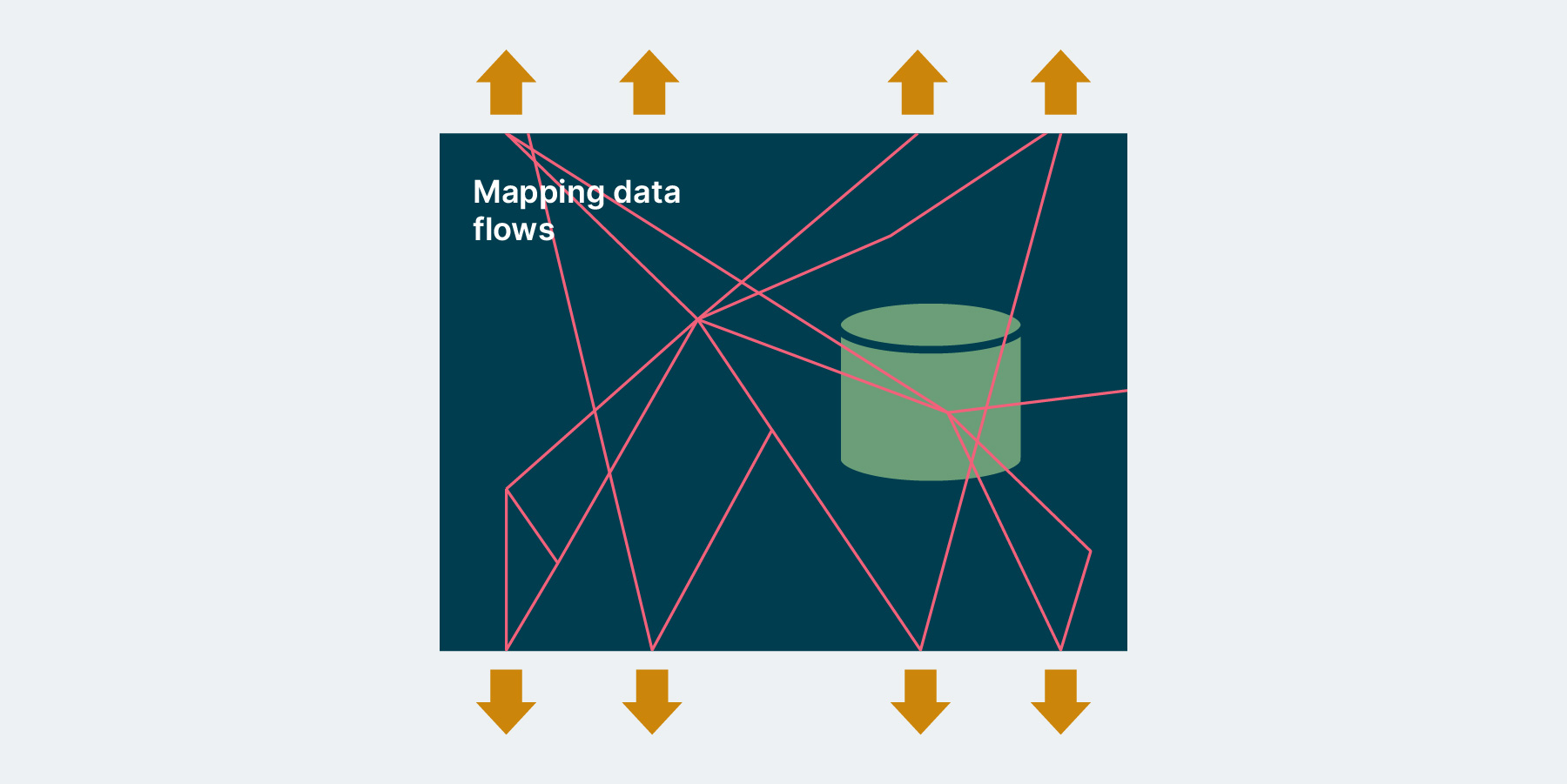

Observe mainframe in production:

Code analysis has limitations, such as when there is significant dynamic dispatch. So to determine what is actually being executed we need to observe the mainframe running in production. This can be used to minimize the reverse engineering scope. Mainframes have not been built with modern levels of observability and while it may be possible to add some retrospectively, the performance and cost impact of this may be prohibitive. You may have to implement logging around the edges of the mainframe, hooking into journals, or events, or intercepting data flows.

Mapping what you need

What do you need your new systems to do?

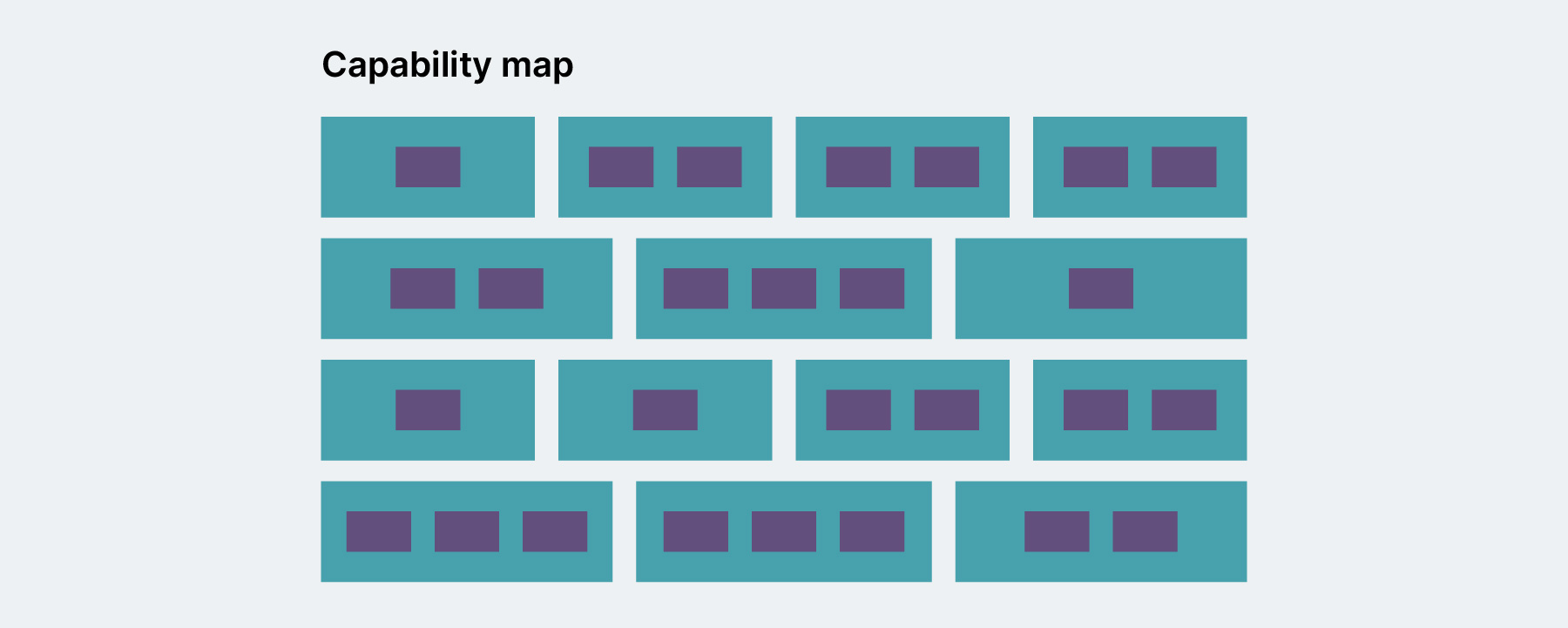

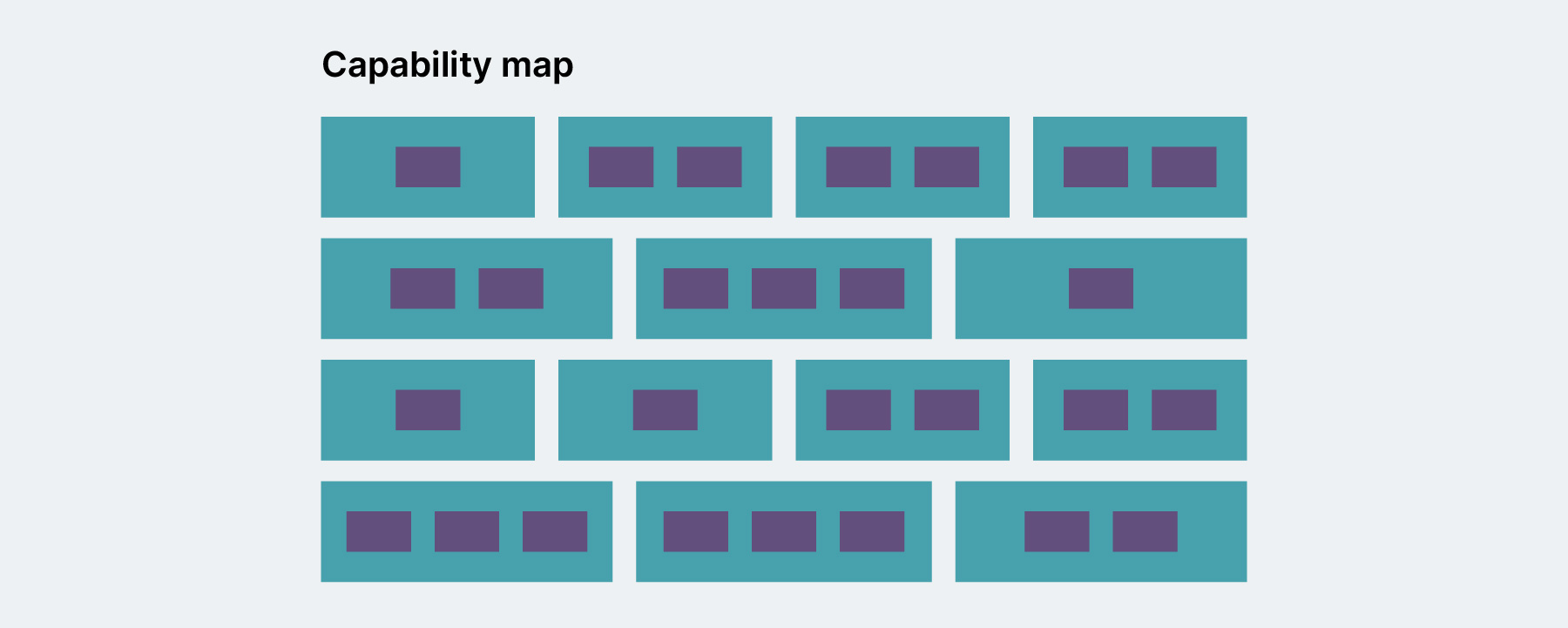

You need to understand the functionality provided today by your mainframe and surrounding systems. Much of this detail is likely to exist only in the heads of your SMEs and in the source code. You then need to understand what of this functionality needs to be retained. We have built comprehensive business capability maps using workshops and reverse engineering discovery to map out what capabilities are needed for your new systems. These can then be used for building and sizing your roadmap and can be prioritized to meet your goals.

There will be details we do not uncover with design only, so we start engineering during the discovery phase.

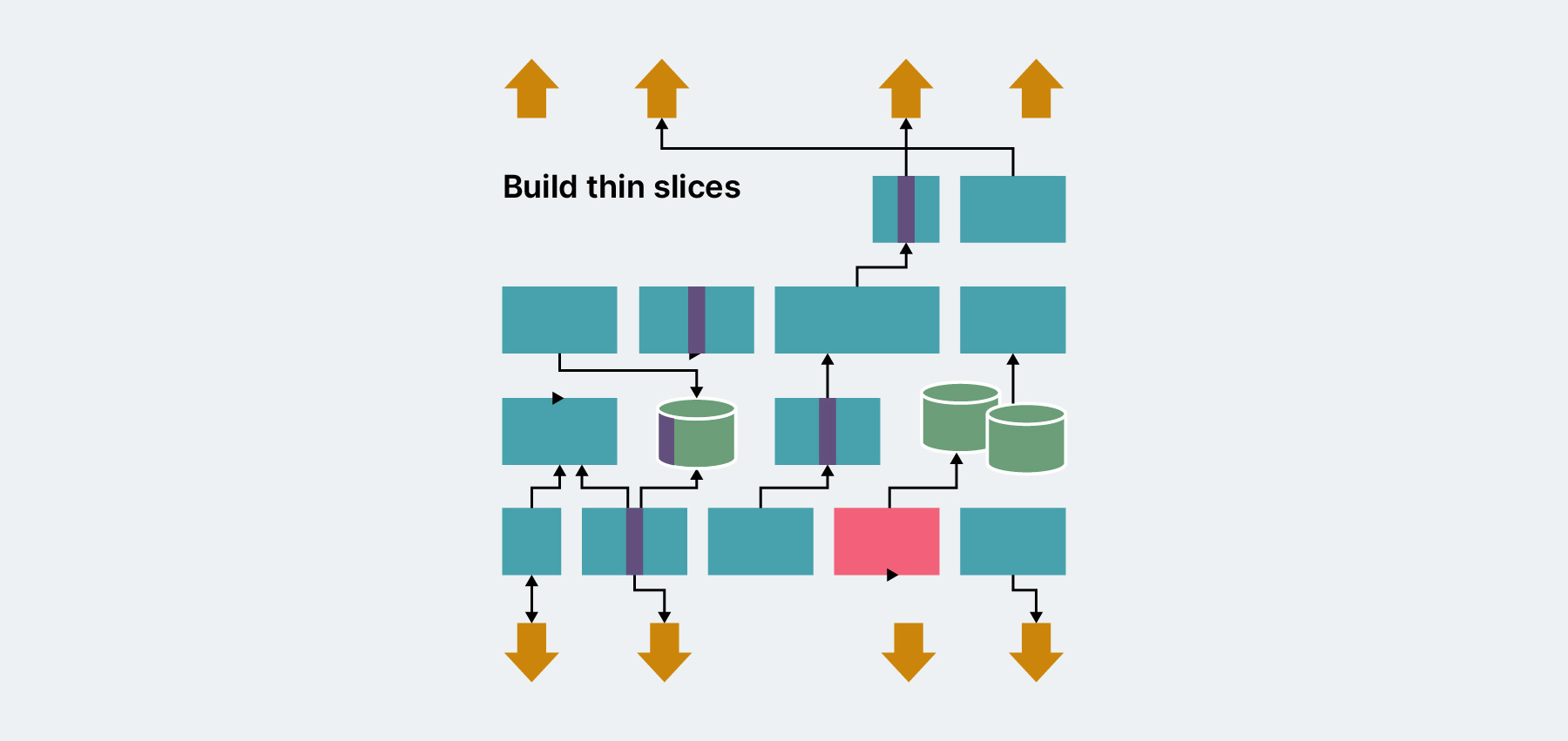

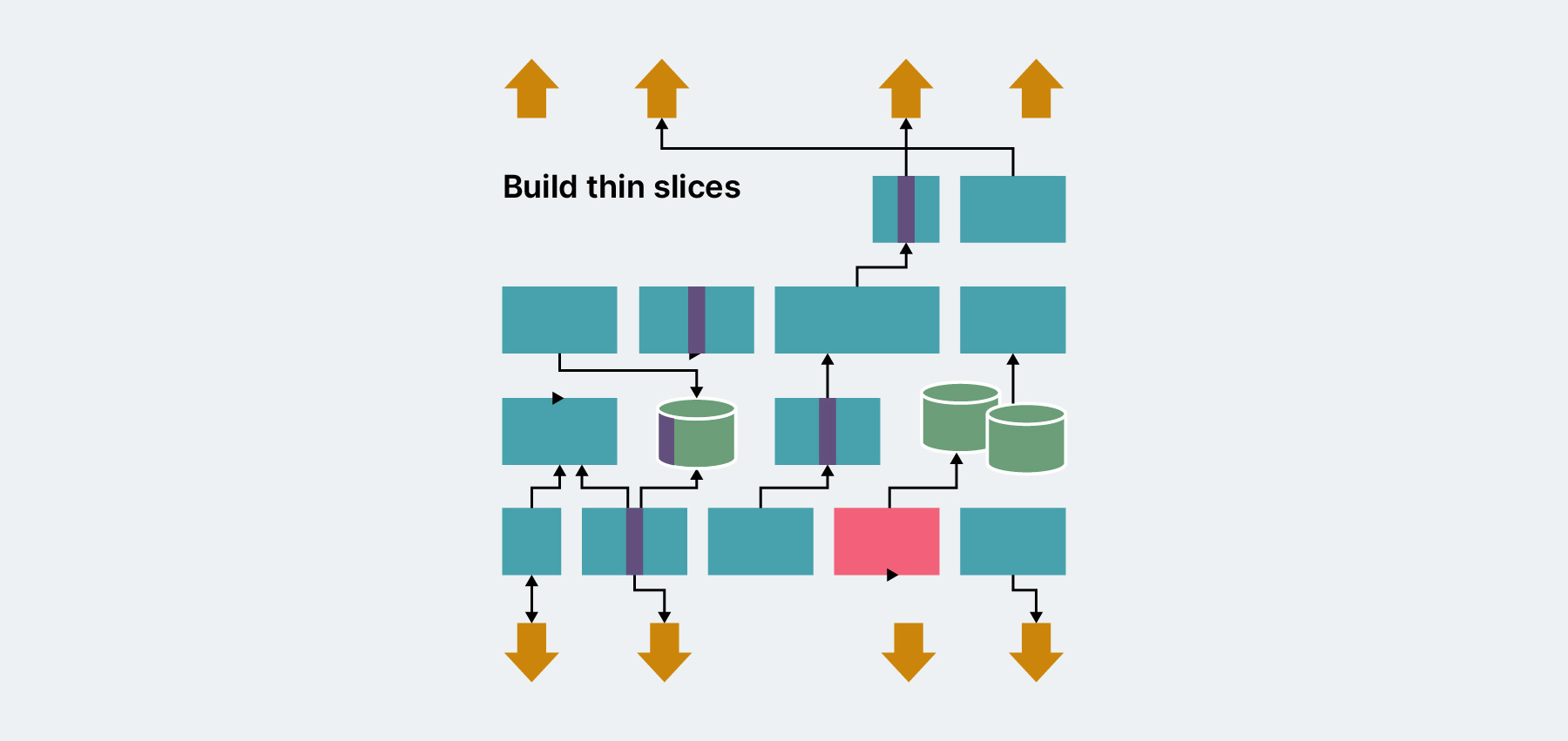

We start targeted reverse engineering in key and complex areas. We prioritize and build real thin slices of the new designs, to understand what would be needed to take this to production. We build spikes to understand how different tech choices work within the context. We look for patterns and themes across the capabilities; what is repeated, where can a theme be extracted, can we use the same tech, same techniques and build shared foundations.

There will be a question of how thin a thin slice is. When working with other types of legacy replacement (non mainframe) we would find thin slices that can actually be released to customer traffic. However, it is likely with an established mainframe that the smallest set of functionality that can serve customers is a significant proportion of the overall. In this case, to learn fast, we should create thin slices that cannot serve customers without additional work in subsequent phases. We make these as ‘production-like’ as we can to maximize learning.

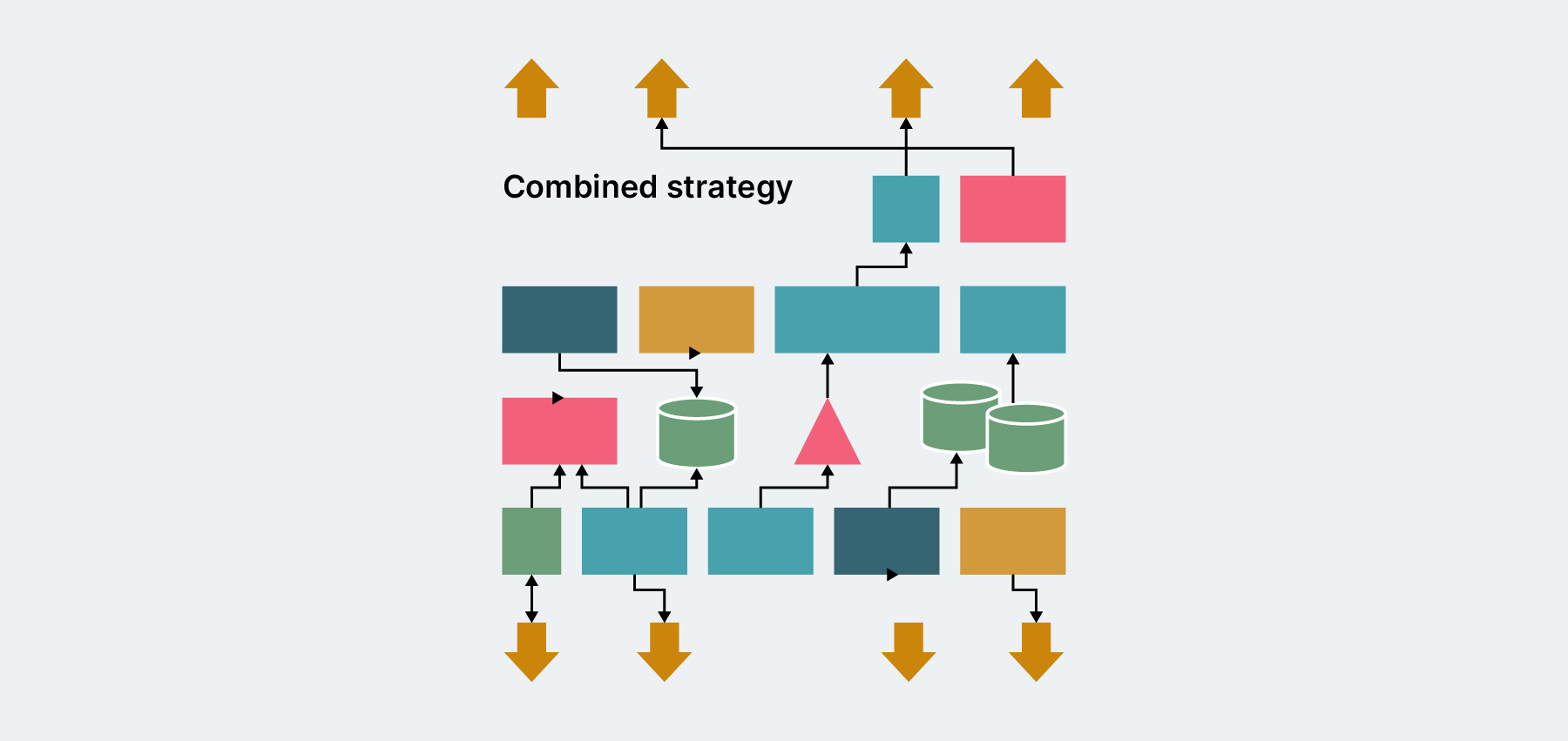

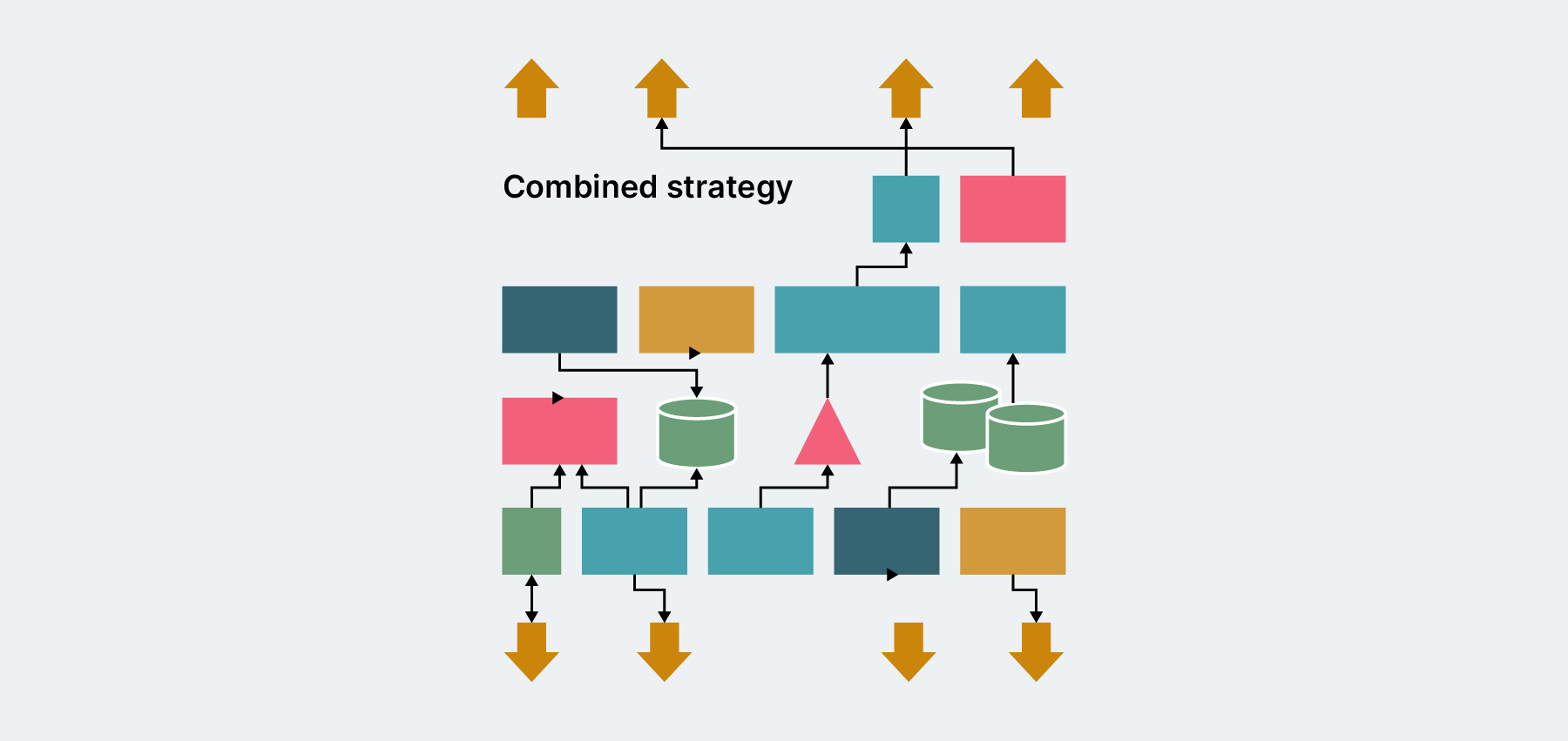

Combining approaches

Be prepared for a complex set of solutions not a single one size fits all

When choosing which of the mainframe modernization strategies - rehost, replatform, rearchitect - meet your needs you must evaluate these against your goals and the value they will realize for each area of capability. It is tempting to believe there are magic bullets out there, technology solutions that take away the hard work. There are innovative products designed to emulate or refactor mainframe code, which can accelerate your migration, but don’t expect magic. These products may form a part of your toolkit when chosen selectively for appropriate problems, but they don’t take away the complexity and scale.

We have evaluated emulators, refactoring tools, accelerators. We have tried home grown “code as data” visualizations and automated logic extraction. We have explored techniques such as Strangler Fig, Legacy Mimic, database replication as a seam (move read MIPS off mainframe), Event Interception, Application Modernization, adding observation around the mainframe. We have evaluated the performance and costs of technologies and transition approaches.

We find that a combination of approaches will be required when replacing a mainframe developed over many years and we must understand the cost, benefit and risk of each part of the solution.

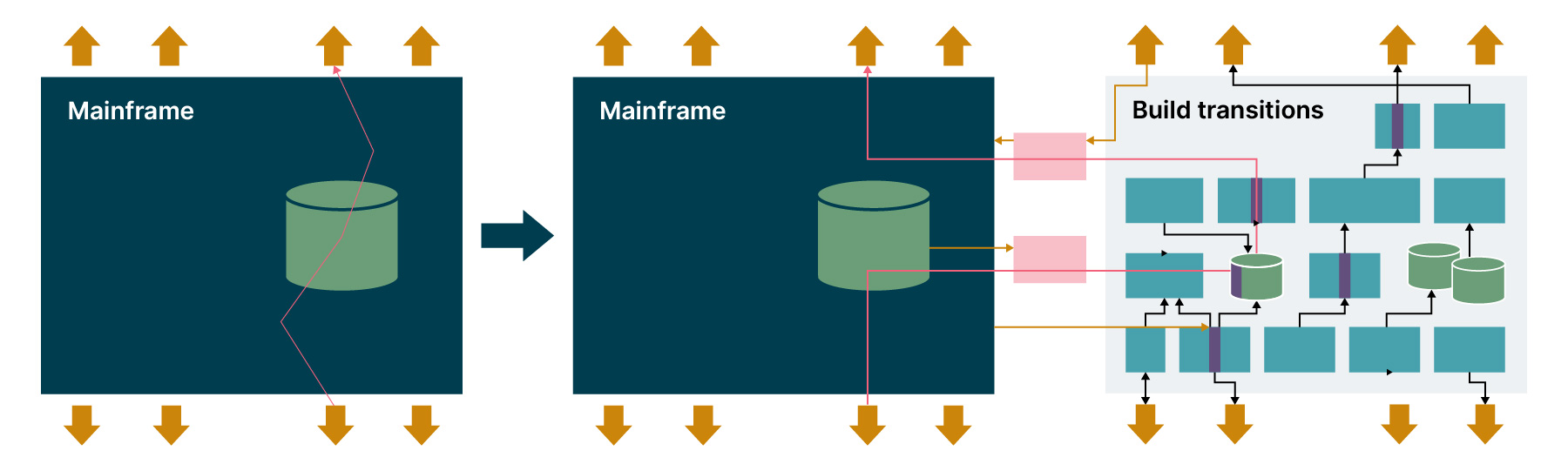

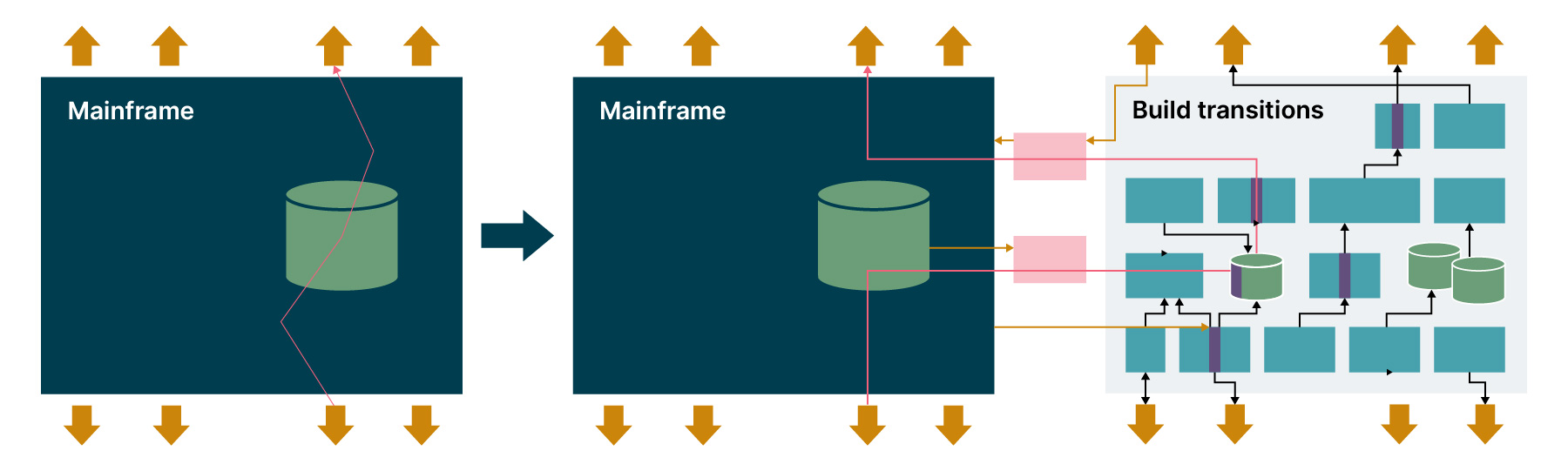

Finding transition increments

Identify incremental transition steps to move customer traffic from the mainframe to new

Our key strategy to reduce transition risk and to release value early is to reduce the size of the increments going live to customers. To break up the mainframe into manageable bite size chunks we must identify functional and technical boundaries within the systems. The functional boundaries will be both capability boundaries and logical data flows. The technical boundaries will be seams where we can hook new systems into mainframe programs and databases. At these boundaries we can create handoffs between old and new to allow new systems to work alongside the legacy one - as we transition from one to the other.

Transition increments are pieces of the new system that can be put live to release customer value. These will be larger than the discovery thin slices and include integrations with the mainframe. The set of transition increments (with understood dependencies) can be used to build roadmap options, using prioritization based on goals and benefit trade offs.

Creating roadmap and mobilization plan

A modular and flexible roadmap that can respond to change

Bringing this all together, we take the transition increments and size them with our engineering teams, taking into account:

Complexity and repeatability of future architecture

Cross-functional requirement challenges in a non mainframe world

The ‘legacy complexity’ of existing mainframe code

Technical transition strategy

Bringing in the goals, priorities and dependencies we can build a roadmap and mobilization plan, using the benefits to understand the return of each increment. The roadmap can be reworked with the same underlying building blocks to meet your goals in the most optimal way for your organization, according to the latest goal prioritization.

Summary

Enough discovery to build a roadmap, business case and mobilize

When legacy replacement is needed, delaying action can make the problem harder.

Jumping to solutions before we have enough data risks failure. But with just enough discovery, pilots and prioritization we can get started with confidence and not waste time. We can build a roadmap that can deal with change and bring risk forward.

We can eat the elephant one bite at a time. The challenge is great but where the benefits are there, it is a valuable journey.