Nowadays every organization wants to be data-driven, since the technological constraints of taking advantage of data have all but disappeared. Due to that, companies have to deal with figuring out which problems they are solving with data and how to pick and integrate all of the tooling that's currently available. These questions are already difficult to answer in a company that has embraced modern ways of delivering software and is willing to make organizational changes necessary for becoming data-driven. Most companies are not like that; they tend to have long release cycles coupled to an architecture that's not flexible, with teams that are siloed from each other and business stakeholders.

Current approaches

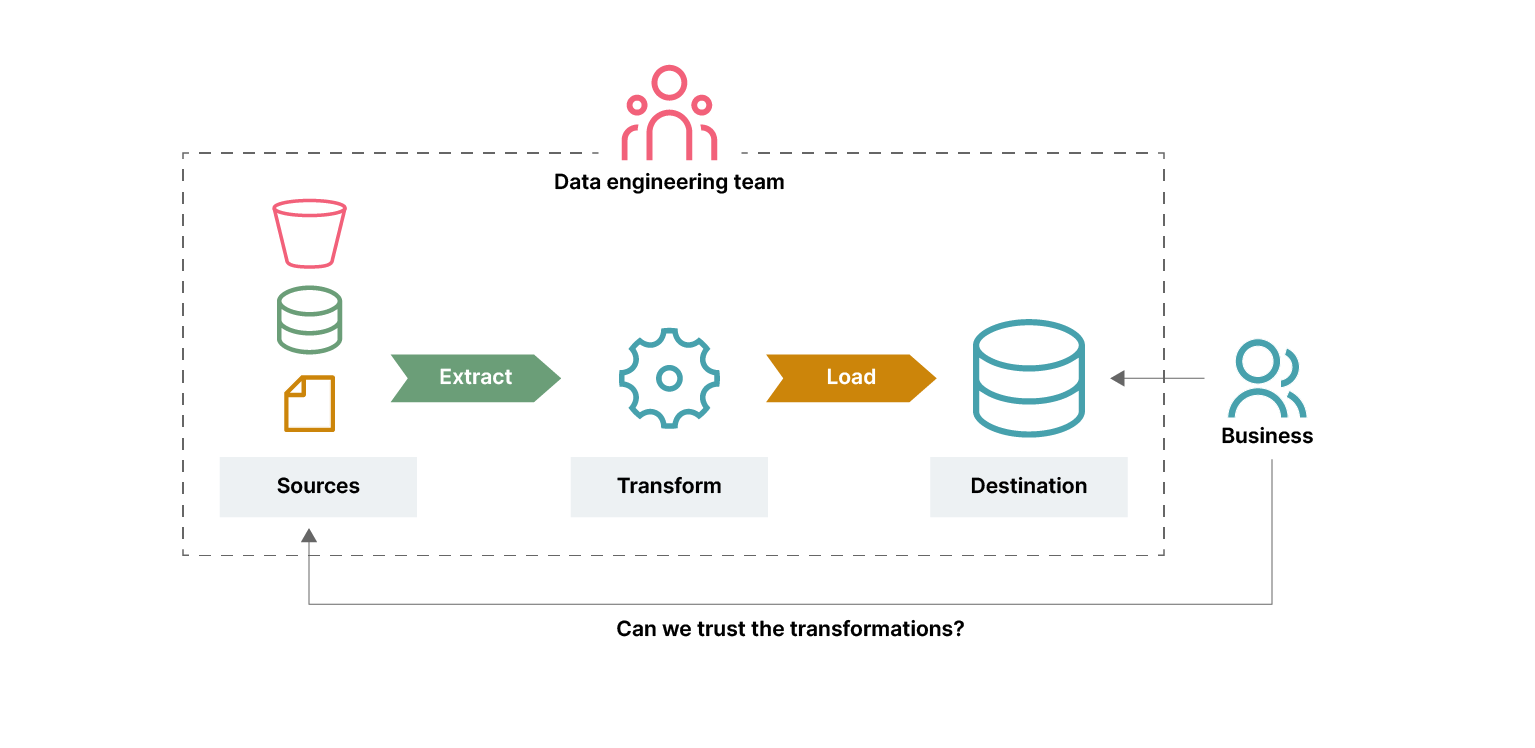

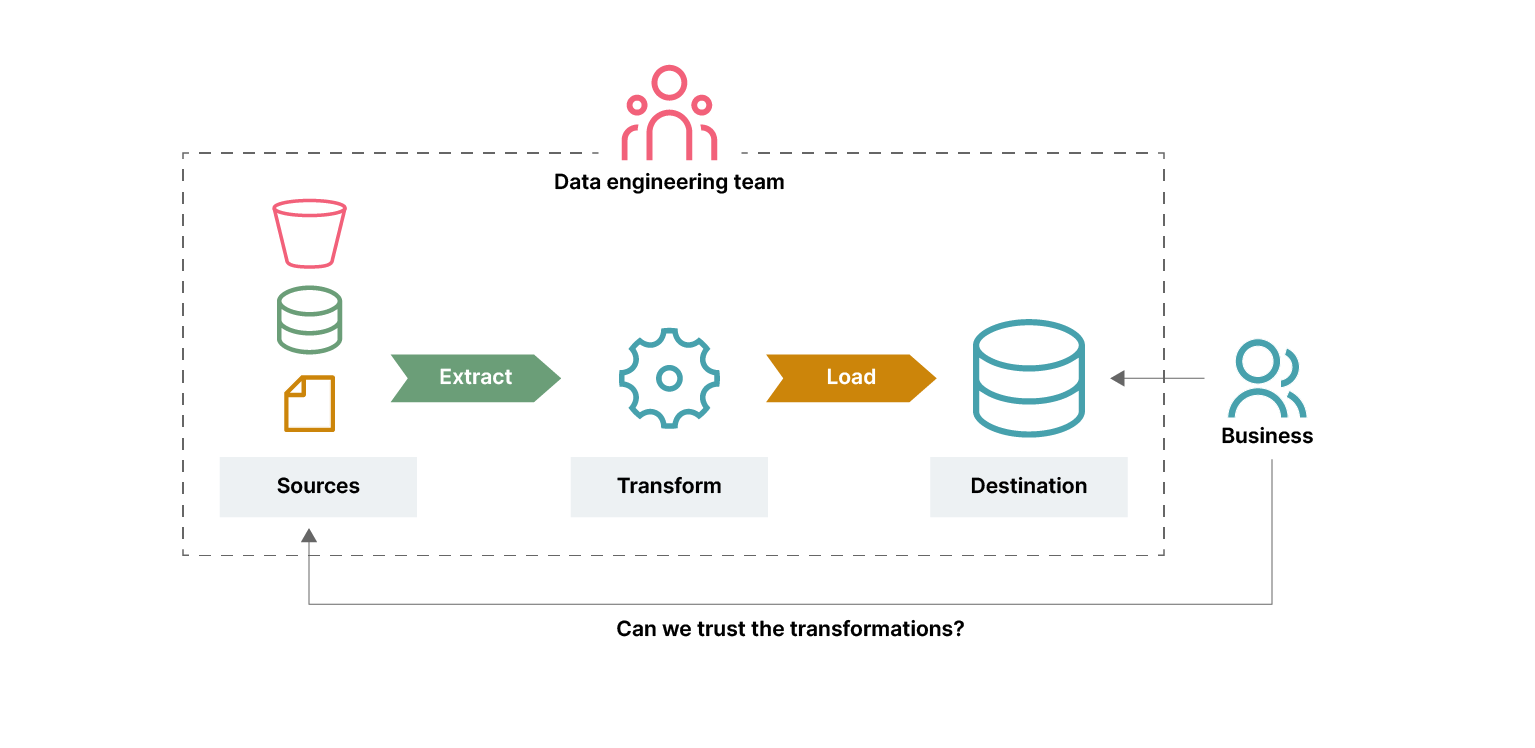

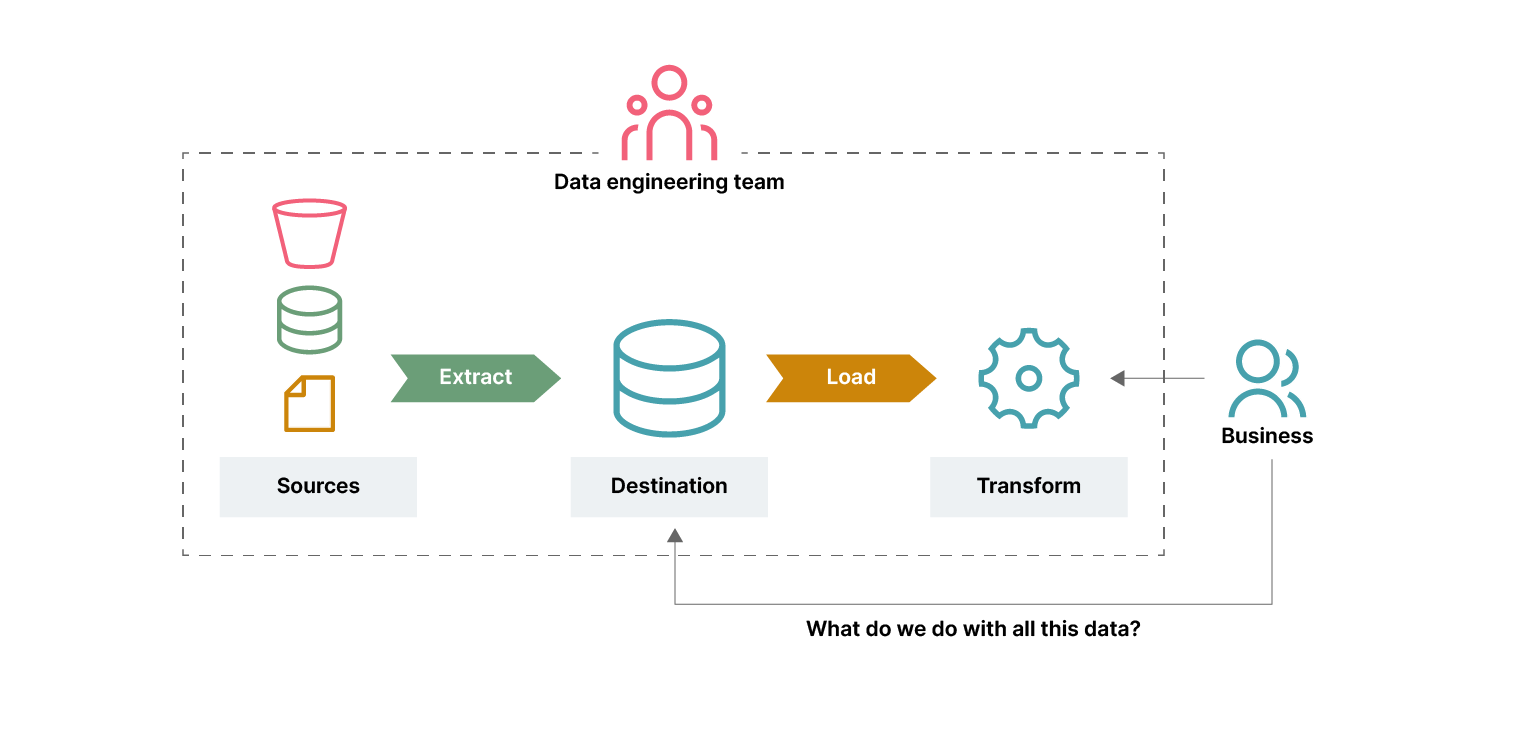

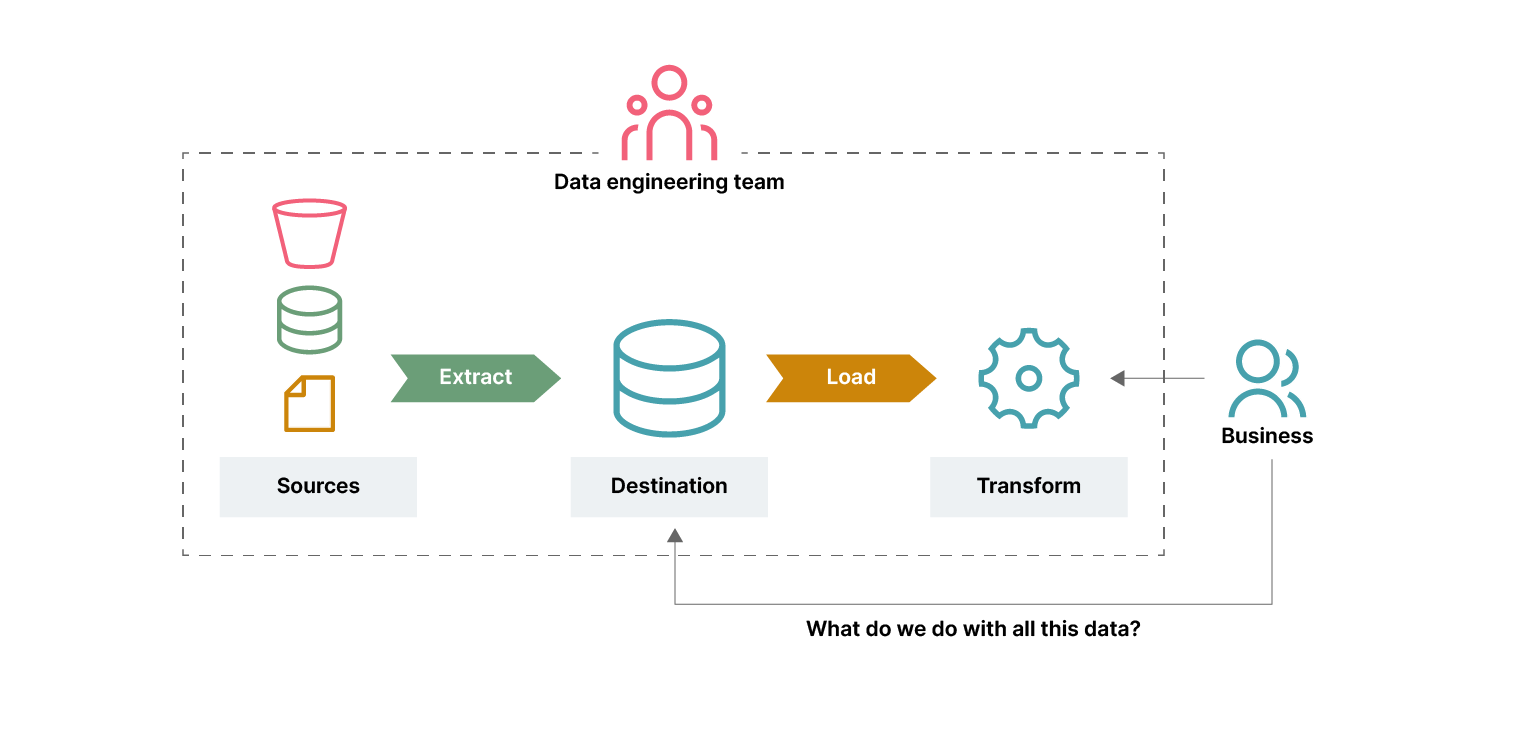

In attempting to become data driven, I see quite a lot of companies following one of the two approaches: either building massive Extract-Transform-Load (ETL) or Extract-Load-Transform (ELT) pipelines. They both rely heavily on tooling and postpone answering the question of what we want to accomplish with data.

Curated data warehouse

Suppose a company wants to understand how their products are utilized. They can follow these steps:

Build a team to implement an analytics platform consisting of pipelines taking source data, calculating some metrics, and storing the results in a warehouse.

Look at the data to see whether it helps them answer any business questions.

Spend a lot of time manually validating that the data is correct.

All of this places a lot of overhead on the centralized siloed data team who doesn’t actually have enough domain knowledge to make adequate semantic assessments.

Data lake with data dumps

There's another approach that could be taken with the following steps:

Dump all of the available data in a raw form into a data lake.

Slap a query engine on top of it.

Ask your analytics engineers and data scientists to start providing insights.

Not only are these people expensive to hire but you are again lacking focus in business problems you are trying to solve.

A better way

In both of these examples, there's a heavy reliance on centralized teams which doesn't scale well and doesn't even work when the organization is siloed. In the past, we had the same problems with QA silos, which were solved by integrating QA into the development cycle with automated test suites and continuous delivery practices. They shortened the feedback cycles and pushed most of the delivery effort to the start of the development process where it’s easier to course correct. I believe that we need more of this mindset in the analytics domain. One way of embracing this mindset is by treating data as a product like the Data Mesh paradigm suggests. To achieve this, engineers creating data products need to understand their domain or be closer to the source systems of the data.

There are many issues with the two approaches outlined above that stem from the operational system producing data that's not meant for analytical purposes. In a sense, data is simply thrown over the fence with no regard to how analytics will use it. The proponents of Data Mesh are already advocating that we need to close the divide between the operational and analytical systems. This can also be beneficial for companies that aren’t in a hurry to scale their analytical work.

I see two ways of closing the divide:

A technical approach: operational frameworks are improved to help model analytical data thus bringing analytical requirements into the operational systems development.

A socio-technical approach: analytical requirements are considered and implemented during the development of the operational system.

While the first approach is still far off, the second one is more realistic. For example, a microservice responsible for user management should also produce a snapshot of the user information with various legal and security constraints satisfied. Additionally, including metadata can help the consumers understand how the data relates to business processes. This snapshot can be used as reference information in analytical pipelines.

Simply adding analytics requirements to a specialized microservice team might not bring the intended results. Cross-functional teams with data engineers close to the data sources would increase the data literacy of the whole organization. Additionally, there would still be a need for centralized data engineering teams helping the product teams with deploying into production and consuming data just as platform teams support operational teams. This setup mirrors directly the implementation of the Data Mesh principles making it easier to scale once the need arises.

Conclusion

Instead of scaling up a centralized data engineering team, I would invest in educating the engineering teams and product managers about how to work with data. This would allow the company to bring the effort of creating data products closer to the sources and be more intentional about the value that's being elicited from the data.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.