Healthcare is at an analytics scalability crossroad

When it comes to healthcare, data analytics isn’t just a ‘nice to have’, it can dramatically improve patient outcomes. Back in 2014, a data infrastructure solution called health data interchanges (HIE) was accessed only 2.4% of the time but those patients who had their providers examine their previous health data were 30% less likely to end up in the hospital. What’s more, the healthcare network in question saw an annual savings of $357,000. The Future Value of that savings, in 2014, from 2014 to 2020, is a remarkable $2.8M.

But even with that financial ROI, fast forward to February, 2020, six years later — it still took the COVID-19 coronavirus to cause HIE services use to triple as providers now understood the importance of patient data sharing during COVID-19.

There are other examples of technical successes in data infrastructure projects, but it can be difficult to get people to use these data systems or build the infrastructure for the right business problem. Let’s say you are committed to data sharing, analytics and deriving benefits from your health data gold mine; what solution challenges can occur and how do you avoid them?

A division of one of our customers, one the largest, national, commercial health insurance companies, has a mission to reimagine health insurance as a digital business. The insights it can gain, though, from patient outcomes, clinic visits, treatment regimens, pharmaceutical courses and doses, and a myriad other clinical data sources, depends upon its data infrastructure. Unfortunately, like many other large businesses, it has big data headaches preventing it from achieving its vision. Recognize any?

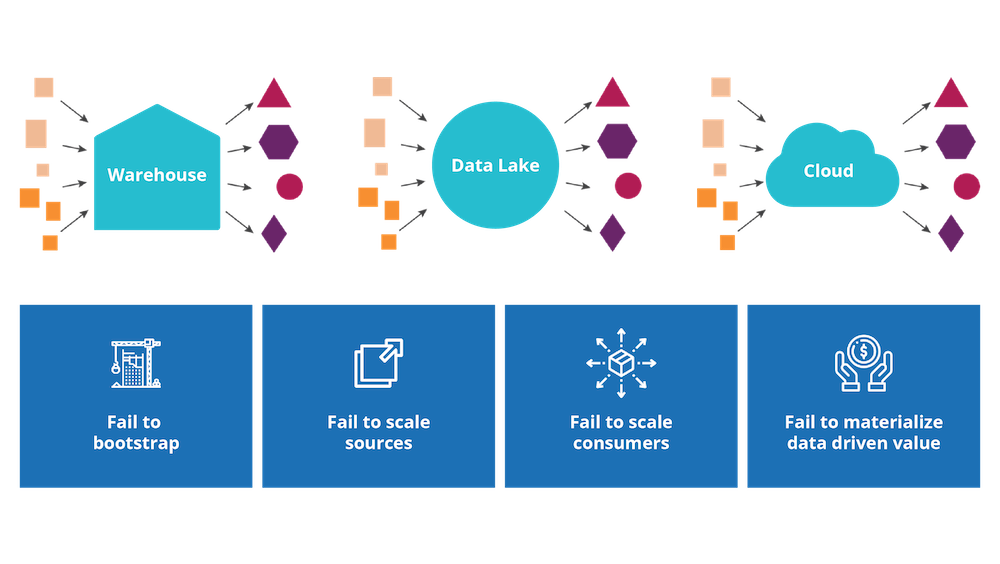

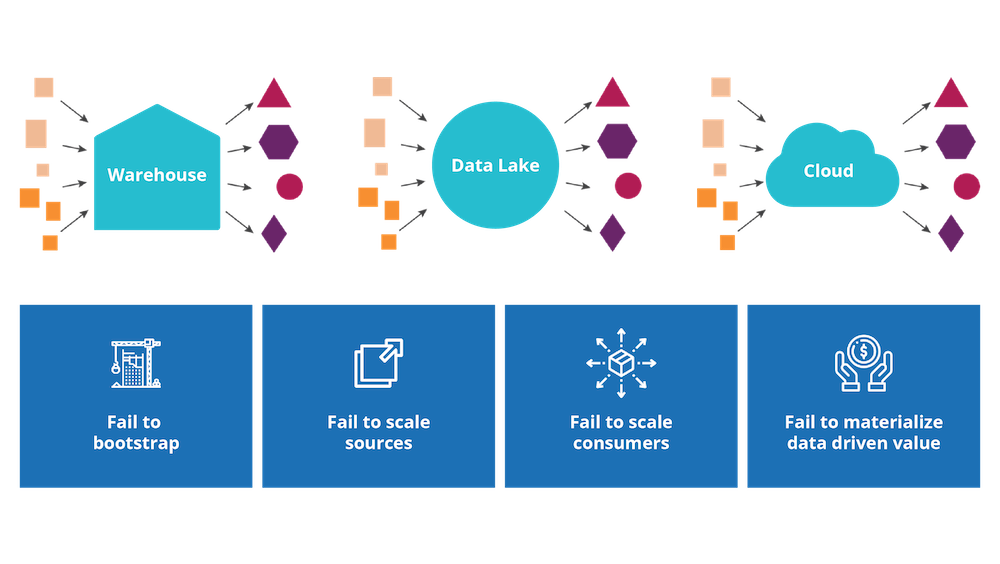

- Fail to Bootstrap - There were at least three failed attempts at building a data platform — a design that took centralized approaches of ingesting data, processing and then serving it within a monolithic data solution (e.g. data lake)

- Fail to Scale Sources and Consumers - Our client had a decades old data warehouse that was aging and difficult to work with. They initially just wanted to put it on the cloud

- Fail to Materialize Value - Our customer was exhausted from the large goals set by the digital vision to support and promote the health of every healthcare customer. This was a typical ‘boil the ocean’ requirement.

- Fail to Materialize Value - The IT analytics unit, like many others pursuing ‘big data projects’, spent an enormous amount of time on architecture. They were stuck in the how of architecture, and not the what it should be, the why it should be, or the who it should be for.

Focusing on architecture in a top-down fashion, while listening to a wide variety of users, inevitably resulted in an architecture fit for no use cases in particular. Additionally, over time, different data analysis and application teams with different remits, but who were autonomous and isolated, often encountered the same business and technical issues. This unfortunately meant they continuously relearned how to overcome recurrent issues, with no reuse of these learnings over time. For example, the same arduous effort to understand, correlate and join data between systems was repeated over and over. For instance, another customer has 28,000+ data warehouse scripts supporting their platform. There is massive redundancy in these scripts because they are implemented in a modular fashion. They point to the same source tables, perform the same joins, and massively replicate the same business rules transformations against those tables. This "cut and paste" form of reusability makes adapting those business rules a massive undertaking, and that body of scripts represents significant technical debt.

We have all seen how typical data solutions have failed publicly in the press and silently ended inside our own companies, exhibiting promises and symptoms:

- Data lakes on and off the cloud and data warehouses seem particularly prone to “build it, they will come” mentalities. These catchall systems often lead to an unplanned expansion in scope, dramatically extended times-to-market, and budget overruns, often leading to building something no one uses.

- Time to market, cost, and customization pain occur when using multiple point solutions of COTS to solve these challenges.

- When big data systems (data lakes, data warehouses) finally do go live, they have user and data scalability issues, have duplicates, missing items and data outliers that lead to inaccurate computation.

So on top of the technical challenges, there are two broader issues; users sometimes don’t know a system exists or don’t use it enough, or, IT has spent too much time trying to make it useful to everyone that it becomes useful for no one. Is it then possible to connect these two groups - users and IT - given all the challenges, and build an appropriate system in a timely manner? Is it possible to build a system serving multiple, parallel uses and users without ‘cut and paste’ reuse? Is there truly a solution?

Yes.

We call it the Data Mesh.

The ROI of continuous data analytics and its benefits

We have our success example above of reducing patient hospital entry by 30% using data engineering to link and analyze critical, historical, clinical information. While laudable, our Data Mesh approach has even more general benefits to enjoy. Our Data Mesh approach applies domain thinking that preserves the business meaning of data and applies platform thinking to speed up delivery and serve data securely. The result is that our returns on investment from Data Mesh driven projects have broken delivery time-to-market records inside our customers.

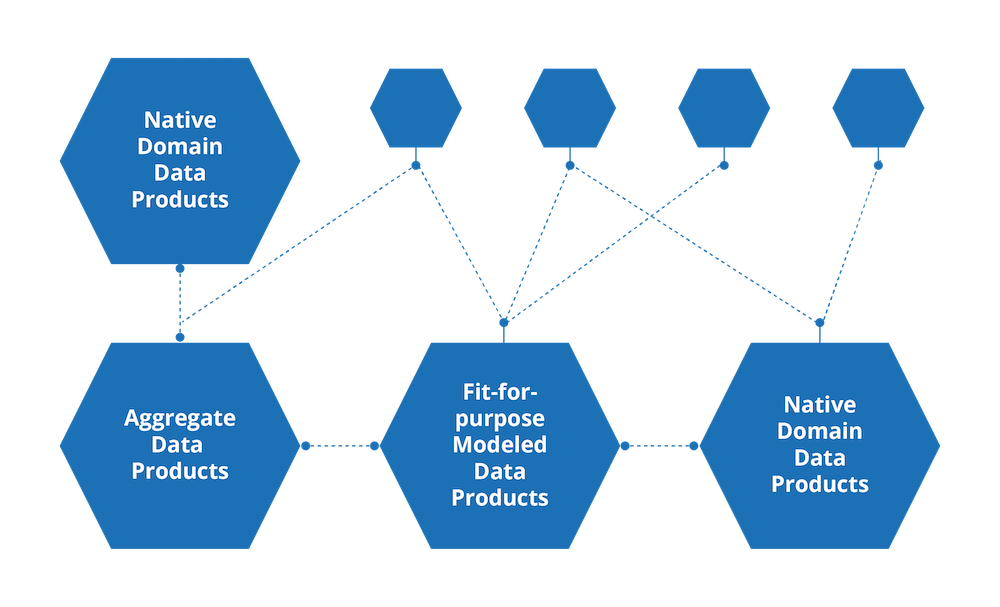

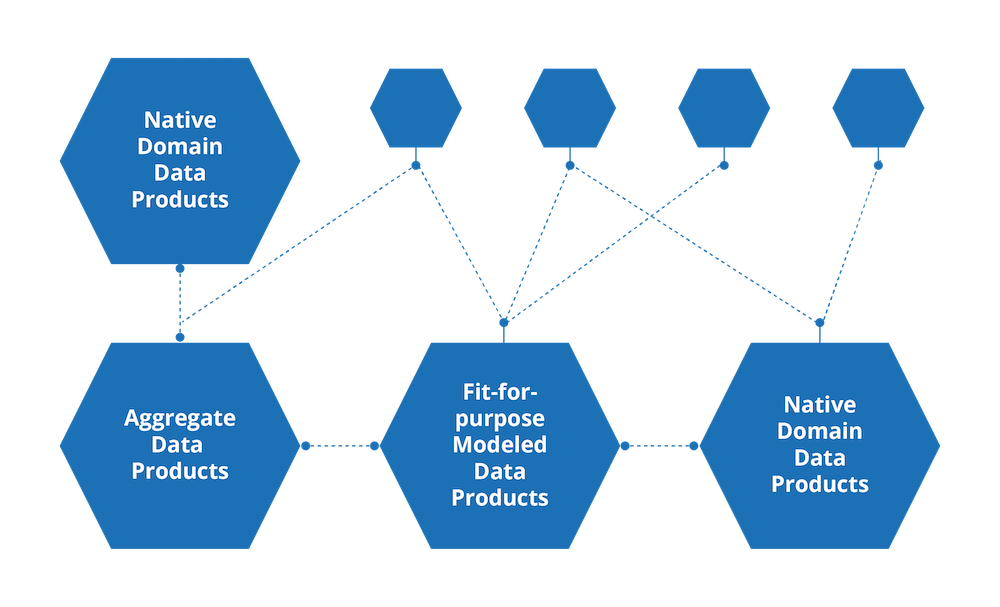

A vital output of our Data Mesh approach is called a ‘Data Product’. It serves a business community for one or more business use cases. The Data Product is essentially a topic or domain-specific data set that is continuously and automatically updated. It is built very quickly (days to weeks). The Data Product is then typically accessed by an analytical application - business intelligence, machine learning and statistical modeling applications. Tools such as R, Tensorflow, PyTorch, Azure PowerBI or Tableau connect natively to the data product as connection types can be automatically generated by the Data Mesh tooling. An important metric to measure the Data Mesh’s time to market improvement is:

Metric: # of data products deployed live to customer group(s) per a time period (i.e. the “time to market” to create a business relevant Data Mesh data set).

This metric must be calculated with care to ensure that the Data Mesh technology does not lead to massive proliferation of data products, only includes data products that produce analytical ROI or business impact, only includes data products with non-zero business users, and only includes data products that we delivered timely in the minds of those users.

The aforementioned healthcare company is nearly two years into their data mesh adoption. Early during the COVID-19 pandemic the company tasked a data product development team to develop a set of COVID-care ‘data products’ for its members as quickly as possible. In this case they created eight data products within three weeks. On a separate project, another data product team built 50 data products that went live within two months; these data products were accessed by 4,800 business users running PowerBI on top to improve healthcare. Overall, we have multiple, parallel data teams delivering with the Data Mesh approach. Previously, the architecture-driven, single thread data teams had been unable to produce a single useful data set in over a year.

A Mesh Solution

Data Mesh-oriented cloud development is much faster than standard data lake and warehouse solution development. By our reckoning, you can reduce the time it takes to get valuable insights from quarters and months to weeks.

The Data Mesh approach also provided our client with many operational improvements:

Lean process improvements

- Helped customer analysts produce insights and data scientists to train models in order to predict trends with unprecedented speed to market.

- The customer was finally able to to use the MS Azure PowerBI analytics system as it was not populated with data before the Data Mesh approach quickly started populating it

- 108 dashboard reports were created in a few months; in the past they produced 30 in a year

Lean operational improvements

- Increased its Government System Medicare Rating — the Stars system — because it issues better data, more data and of higher quality to Medicare.

- Enabled health care executives to quickly determine the optimal service among a plethora of health services choices for the COVID-19 pandemic

- For their inbound call center, new analytics data from a new Data Product enabled them to triage a patient’s health needs faster and safer (e.g. send them to urgent care or rest longer at home)

- The quickly produced analytical data sets allowed them to rapidly build a business case for telemedicine, greatly expanding access to care due to the rapidly growing COVID-19 patient population

- The rapidly available data sets enabled healthcare data scientists to identify member populations who have lapsed in care (e.g. stopped their medications, didn’t refill prescriptions, stopped measuring blood pressure), or who need care on a daily basis.

What is the Data Mesh?

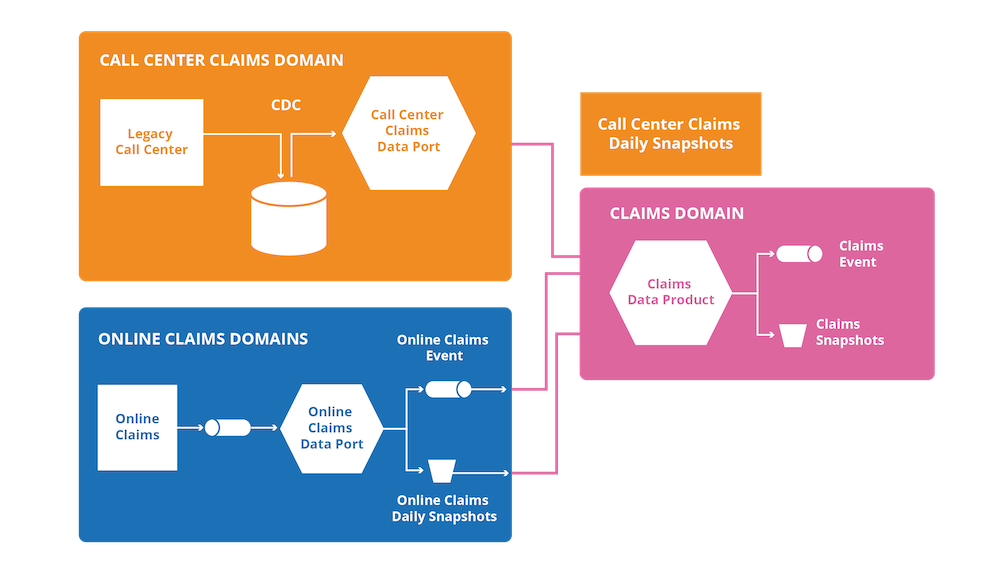

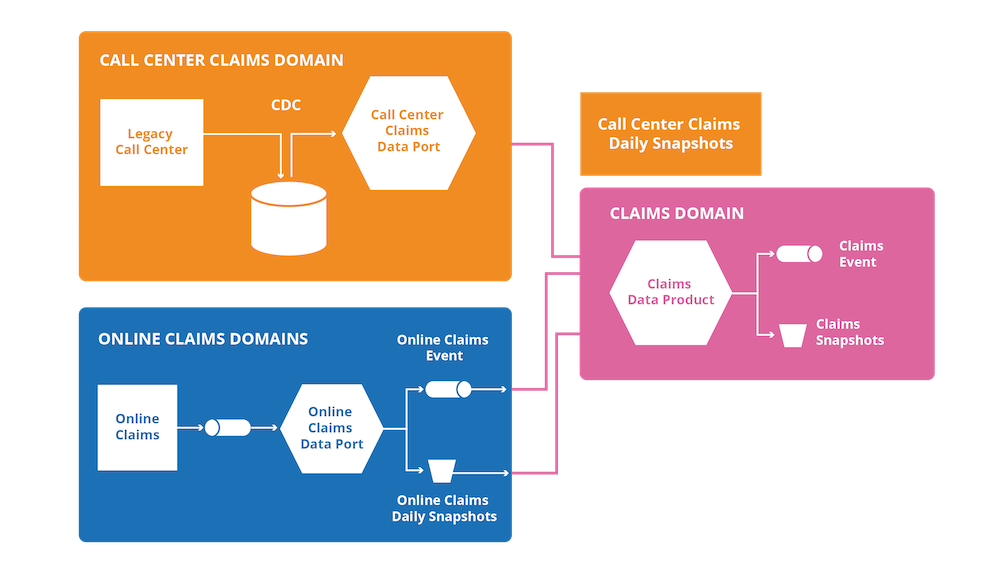

The Data Mesh is a next-generation data engineering approach and platform, specifically highlighting that data domains (e.g. business data or business objects) are the first concern one should define and discover for quickly delivering a data system for analytics. A data domain example could be the claims data product holding healthcare patient claim data.

The next important concept is that data should be treated as a product — produced and owned by independent cross-functional product teams who have embedded data engineers. The advantages of this are that it encourages reuse across the organization and that the team shepherds its maturity over time. Data products should be distributed across the enterprise — instead of having one big data product, otherwise known as a data lake.

The Data Mesh implementation architecture is defined by federated governance, standards and interoperability as primary architectural guidelines. There are a specific set of features of this architecture:

- Discoverability

- Addressability

- Self-Describing

- Secure

- Trustworthy

- Interoperable.

All of these concepts steer the reorganization of teams to be cross functional, infrastructure to be interoperable and shared, with the opportunity to centralize compliance, security, team design and development lifecycles.

The Data Mesh implementation technology uses well-known, common data infrastructure tooling (e.g. Kubernetes and Terraform) as a platform to host, prep and serve the data assets. This shared and harmonized data infrastructure (for example Azure or AWS data services) don’t all have to be from one software company per se; as long as the tools work together and are universally accessible by all data product teams, it will be in alignment with the Data Mesh principles.

Data assets and not lakes of data

The prevailing wisdom of more data is better, centralization is better, otherwise known as ‘a central version of the truth’ will continue to be a challenge to building scalable and relevant analytical systems, since we have been steered by the idea that just having more data will solve our problems and help seize new opportunities. While there’s no arguing that the acquisition and ownership of healthcare data can lead to high ROI and critical care analytics, they must be assets relevant to current use cases, the data ‘customers,’ and timely to capture market opportunities.

The data assets contained in your Data Mesh will benefit from, and be due to the intersection of techniques instrumental in building our modern distributed software at scale; techniques that the tech industry at large has adopted at an accelerated rate and that have created the massive economies of scale we see at our largest companies.