3 Pointers to Add Agility to Testing

On one of my first projects at Thoughtworks I found myself confronted with a legacy codebase. The tech stack was a Drupal with lots of PHP to customize the CMS with Java based Selenium 1.0 tests on the side. It was not created to test the app comprehensively, and there were gaping holes. The Selenium 1.0 suite consisted of a few UI-driven tests representing user interactions. They took 100 minutes to run and did not even discover critical failures.

Here is how I approached this problem and the lessons I learned along the way.

What’s wrong with the “old” way of testing?

My first focus was set on analyzing the existing test suite. I dug deeper to see what functionality was actually covered. What were the elements that made our suite so brittle? And why did it take more than an hour to run all the tests? Once the stories were completed by developers, we did a story handover with the Three Amigos (BA, developer, and QA). Then, as I could not fully trust the regression suite, I did feature and exploratory testing manually after reviewing the written automated tests.

The problem with this behavior was that

- Responsibility for quality shifted to the QA. I accepted that I as a QA am responsible for ensuring quality in the application. Once the responsibility has shifted to the QA, other members of the team are more likely to rely on the QA to verify if a feature is actually working correctly. Soon I was empowered by the team to sign-off stories before they could go live. At the same time I was promoted to the role of “Quality Gate”. While it feels good to be needed by the team, it is not a behavior benefiting the team. I found myself in this position by trying to do the right thing, and thus it is important to be watchful about slipping into this situation.

- Shallow feedback. Similar to organizations where QAs are siloed by function and which lack cross-functional teams, I did not look outside of the QA bucket. As I had little context of the origin and real purpose of a feature, it forced me to test only against the functional requirements. This did little to uncover empty features that do not solve any real purpose or errors that were introduced during the analysis phase.

- Unnecessary waste. When significant bugs were found in context of the testing phase, I blocked the card by putting a sticky on it. In order to unblock the card, the bug had to be resolved. The result was that I would lose the developer with the context of the story since they would have moved on to another story while I was testing it. It also stopped the story from going live in time and creating business value.

Adding agility to testing

The following pointers helped us to defeat the issues listed above and make our testing a bit more agile and value-driven.

#1 Start with yourself - look outside the QA bucket

I started looking outside the “boundaries” of my QA role. I took part in the analysis process by participating in meetings and helping to write and review stories. I gave feedback and set testing expectations during story kick-offs. Once I looked outside of my QA column, I understood the real purpose of a story. This enabled me to ensure that the feature meets its purpose.

Understanding the business value of the story not only helped me find more bugs outside of the pure functional requirements, but it also stopped them from even coming up. They were stopped by identifying gaps or challenging the suggested solutions before and during development.

#2 Get to the technical core - reduce waste

The result of the above efforts significantly sped up stories going through QA but did not tackle the problem at its core. We had to change our tech stack, away from the CMS with it’s ice cone test suite towards a proper testing pyramid. We chose Ruby as our new language because it has a mature active community and has testing built into it’s DNA. Each new feature required by the business was developed in the new tech stack with testing in mind using the Strangler Pattern. Through this method, we introduced valuable automated tests in areas of change. We slowly made our old CMS redundant. We evolved the testing framework with the needs of the changing application. We used the native headless driver Capybara, because it run functional tests in only a few seconds as it runs only from the memory. All tests reached a trustworthy coverage and gave feedback within 30 seconds. This enabled me to push for the next step - getting the entire team to have a stake in quality.

#3 Encourage the culture of quality - it is the team’s responsibility

The previous actions helped to improve the QA process, but it did not challenge my role as the “quality gate”. When I was away for 2 days I saw the QA column filled with cards while everyone else was working on new features. A clear symptom to look out for if you are wondering if you are also the quality gate on the team. I needed to find a way to get stories to move smoothly through QA while I am away.

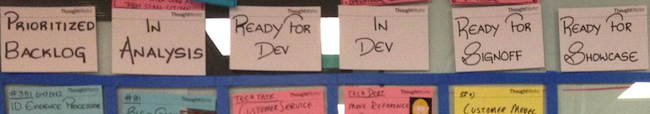

To solve this I shifted from testing and signing off stories to creating a team culture that is responsible for the quality of the software. I started advocating quality in many aspects of the project but none of them were as disruptive and effective as removing the QA column as seen in the picture.

It removes the safety net that someone is there to check if the code works functionally as it is supposed to. That uncertainty has caused the developers to take responsibility of their own code. They started focusing on good coverage within the tests that were created as part of the story as it became their only safety net.

The Three Amigos meeting now became the final check before showing it to the product owner to sign it off. Manual tests to proof the feature’s functionality were done by the developer pair themselves. With the assumption that written code is functionally working, any further testing is done to improve the quality even more instead of just checking if the code is working.

As a QA I made myself available to pair with developers to help them test their own code. I didn’t expect them to find every single bug, but the expectation was set that the code had to work as defined in the acceptance criteria. If there are any bugs, we can always write a test for it and deploy a fix quickly.

I was alleviated from the constant pressure to push stories through the QA column. The available time I got as a result was spent on the story’s active analysis and development. I was part of every pair, especially when tests were written.

Try it Out

Try this approach on your team. I see it working well on cross- functional teams. If it is not, then your first step should be to make it cross functional. On less mature teams, this may be too big of a step to make. You could try to not remove the QA column but to shift its responsibility. For example, adding the rule that anybody on the team can pair with the anchor of a story to do the QA on it. Once this is part of the team culture, the column shifts the responsibility to the team but doesn’t remove the safety net. Doing that could be part of a later step in the project once the team is ready for it.

Let me know about your experiences and thoughts by leaving a comment. Thanks!

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.