Part one of this blog discussed how to achieve lower network latency using gRPC. This blog will expand on load balancing and Envoy Proxy.

Load balancing

Load balancing refers to the efficient distribution of incoming network traffic across a group of backend servers, also known as a server farm or server pool.

A load balancer performs the following functions:

Distributes client requests or network load efficiently across multiple servers

Ensures high availability and reliability by sending requests only to servers that are online

Provides the flexibility to add (or remove) servers to (and from) the pool as the demand varies

Load balancing can be done either by the client (client-side load balancing) or by a dedicated server which load-balances the incoming requests (server/proxy load balancing).

An example of client-side load balancing includes Netflix Ribbon and for Proxy load balancing there’re Nginx, HAProxy, Consul, DNS and Envoy Proxy.

The pros and cons of both proxy and client-side load balancing are listed in Table.1.

Proxy load balancing |

Client-side load balancing |

|

| Pros | - Clients are not aware of backend instances

- Helps you work with clients where incoming load cannot be trusted

|

- Provides high performance extra hop is eliminated

|

| Cons | - Since the load balancer is in the data path, higher latency is incurred

- Load balancer throughput may limit scalability

|

- Clients will require complex logic to implement the load balancing algorithm

- Clients need to keep track of server load and health

- Clients will need to maintain per-language implementation

- Clients cannot be trusted for balanced load or the trust boundary needs to be handled by a look-aside load-balancer

|

Table.1 Comparison of Proxy and Client-side Load Balancing

Because of the downsides of client-side load balancing, most systems built using microservices architecture use Proxy load balancing.

There was an instance where, for a client, we extensively used HAProxy as a load balancer and the same was leveraged for a gRPC service as well.

Eventually we moved to Envoy Proxy, the only load-balancer we were aware of to support gRPC at the time.

The following sections explain what Envoy Proxy is and the reasons for our move from HAProxy to Envoy Proxy.

What is Envoy Proxy?

Envoy Proxy is an L7 (Layer 7) proxy and communication bus designed for large modern service-oriented architectures. Layer 7 load balancers operate at the highest level in the OSI model, the application layer (on the Internet, HTTP being the dominant protocol at this layer).

Layer 7 load balancers base their routing decisions on various characteristics of the HTTP header and on the actual contents of the message, such as the URL, the type of data (text, video, graphics), or information in a cookie.

Why Envoy Proxy?

On a particular project that I was a part of, our system started scaling (acquiring more customers resulting in a higher load). My team and I saw a few servers degrade in performance. Some of the servers were receiving more requests than the others and thus reported higher response times.

Further investigation identified HAProxy’s inability to deal with HTTP2 multiplexing. HAProxy was built for load balancing HTTP traffic but did not support HTTP2 based traffic at the time.

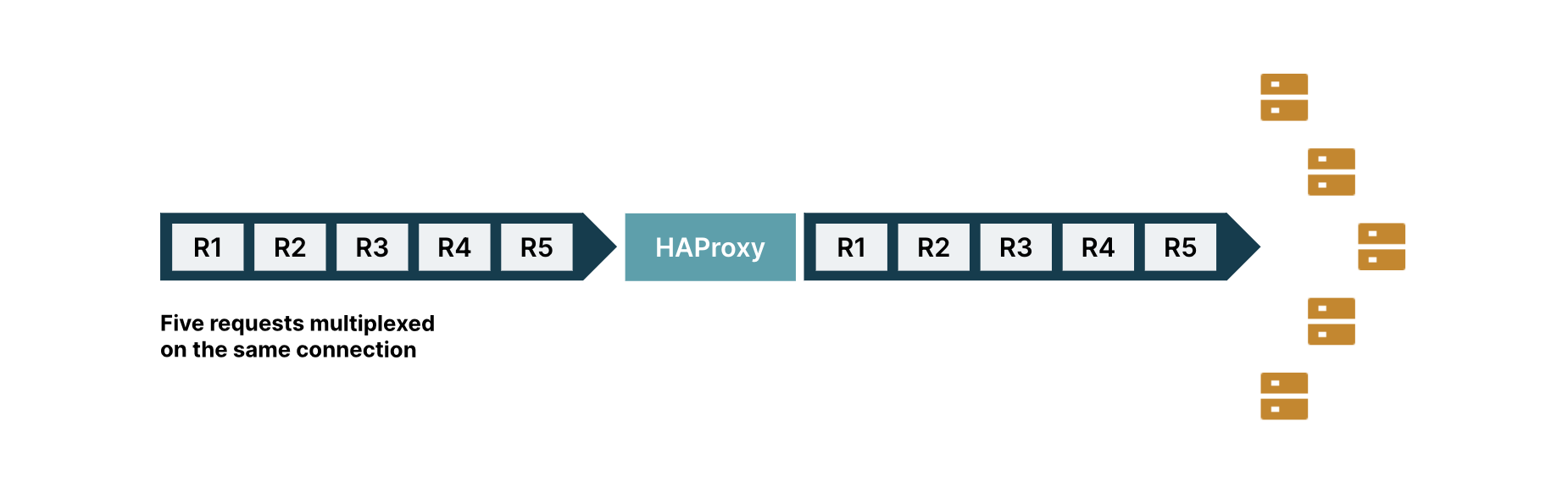

Consider a single connection from a client to the HAProxy Server, as shown in Fig.1., with five requests multiplexed on the same connection.

HAProxy, built for HTTP based load balancing, expects a client to create a new HTTP connection for every request. And, for each such incoming connection, HAProxy creates a corresponding outgoing HTTP connection to a backend server.

Now, in spite of there being five requests, HAProxy treats them all as a single request, since they are multiplexed on a single connection. It forwards them to the same backend server, however, the backend server now has to serve five requests instead of one.

Fig.1 Load Balancing using HAProxy Server

Envoy solved this problem by supporting HTTP2 based load balancing.

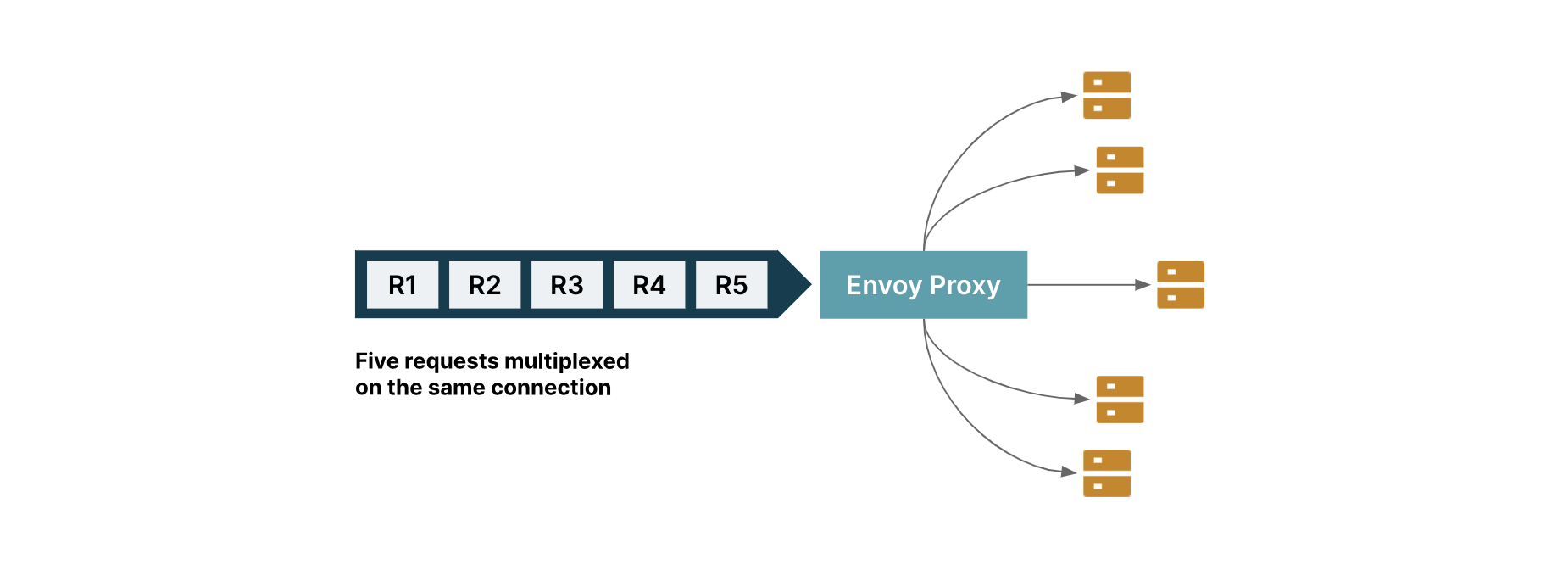

Consider the same example as above, where you haves a single connection from a client to the Envoy Proxy Server, as shown in FIg.2., with five requests multiplexed on the same connection.

Unlike HAProxy, Envoy recognizes the five multiplexed requests and load-balances each request by creating 5 separate HTTP2 connections to different backend servers.

Fig.2 Load Balancing using Envoy Proxy

Deep dive into Envoy Proxy

The Envoy Proxy configuration primarily consists of listeners, filters and clusters. Let's look at how to configure them in order to achieve the desired load balancing.

Listeners

A listener is a named network location (e.g., port, Unix domain socket, etc.) that Envoy should listen to, and downstream clients can connect to. Envoy exposes one or more listeners that downstream hosts can connect to as shown in Fig.3.

"listeners": [{

"address": {

"socket_address": {

"address": "127.0.0.1",

"port_value": 7777

}

}

}]

Fig.3 Envoy listener configuration to listen on 7777 from localhost

Filters

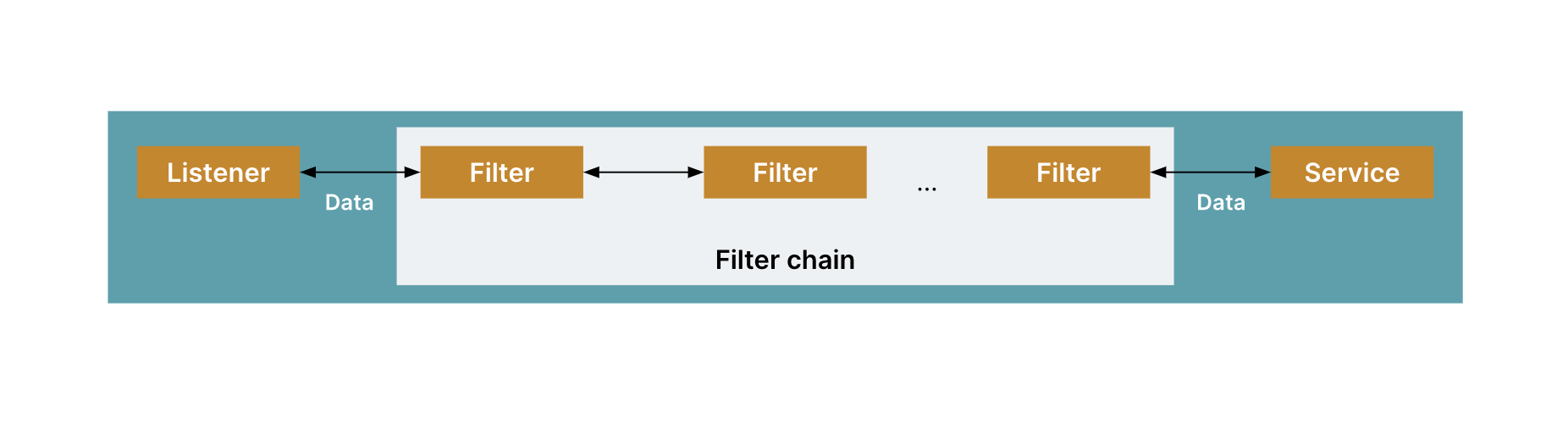

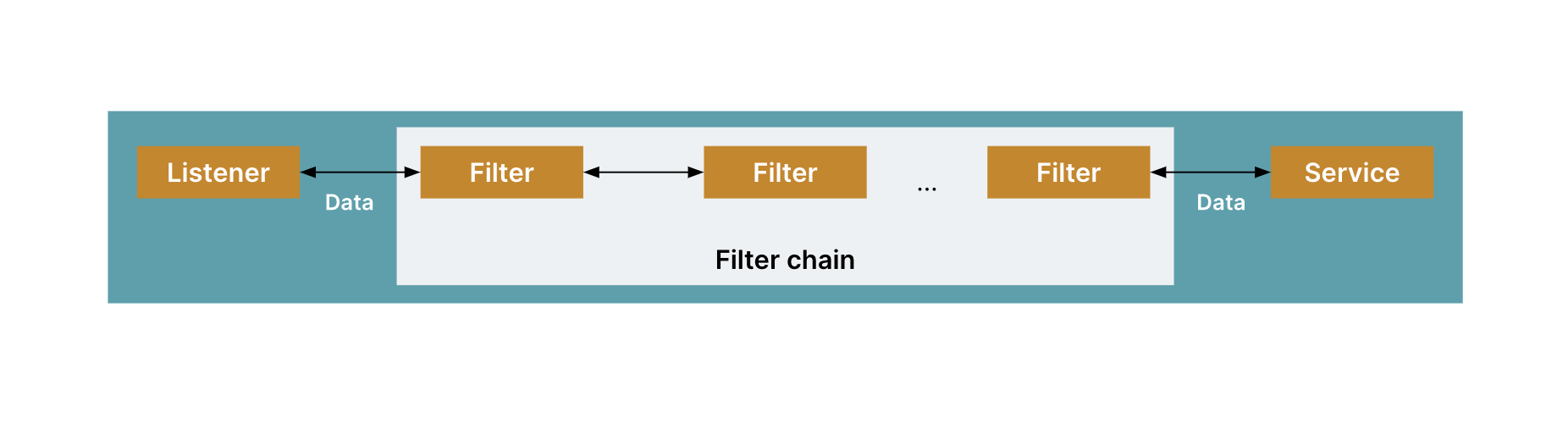

A set of filters tell Envoy how it should process the messages it hears. Envoy supports Listener filters, Network (L3/L4) filters and HTTP filters.

Fig.4.The flow of traffic through Envoy proxy with data flow enhancement using filters

Listener filters are processed before the network-level filters and have the opportunity to manipulate the connection metadata, usually to influence how the connection is processed by later filters or clusters.

Network (L3/L4) filters form the core of Envoy connection handling. There are three types of network filters:

Read filters are invoked when Envoy receives data from a downstream connection

Write filters are invoked when Envoy is about to send data to a downstream connection

Read/Write filters are invoked when Envoy receives data from a downstream connection and when it is about to send data to a downstream connection

HTTP filters can operate on HTTP level messages without any knowledge of the underlying physical protocol (HTTP/1.1, HTTP/2, etc.) or multiplexing capabilities.

"filters": [{

"name": "envoy.http_connection_manager",

"config": {

"route_config": {

"name": "local_route",

"virtual_hosts": {

"name": "backend-1",

"domains": "*",

"routes": [{

"match": {

"prefix": "/booking"

},

"route": {

"cluster": "BookingOMS"

}

}]

}

},

"http_filters": [{

"name": "envoy.router",

"config": {}

}]

}

}]

Fig.5 An example of Envoy filters

Clusters

A cluster represents one or more logically similar upstream hosts, to which Envoy can proxy incoming requests. Envoy discovers the members of a cluster via service discovery.

It optionally determines the health of cluster members via active health checking. The cluster member that Envoy routes a request to is determined by the load balancing policy.

You can read more about the different load balancing policies supported by Envoy here. where one.

"clusters": [{

"name": "BookingOMS",

"type": "STRICT_DNS/LOGICAL_DNS/STATIC_DNS/EDS",

"connect_timeout": "1s",

"hosts": [{

"socket_address": {

"address": "BookingService",

"port_value": 8080

}

}]

}]

Fig.6 Envoy cluster configuration

Fig.6. shows an example of an Envoy cluster configuration, where you configure a cluster named BookingOMS. Any request to this cluster will be routed to the BookingService on port 8080.

Dynamic routing with Envoy Proxy

After my team and I had successfully solved the load balancing of incoming gRPC traffic with Envoy, we were confronted with another business problem.

The system was to receive a read request on every app launch, i.e., from the Home Page. This meant the system would have to deal with an additional ~30k more read requests per minute.

To avoid facing the same response time issues as before, we decided to deploy a dedicated read-only cluster for the additional read traffic.

We introduced a request header to differentiate the requests made from the Home Page. And, routing these requests to the read-only cluster only needed two changes on Envoy.

Cluster configuration for the read-only cluster

Filter configuration to route the requests from the Home Page to read-only cluster

The team and I didn’t want to route all the traffic from the Home page to the read-only cluster at once. Instead, we wanted to incrementally roll out the new readonly endpoints.

Envoy Proxy helped us solve this problem with ease because it supports cluster weightage. More on that, here.

As shown in the configuration in Fig.7., the team began by routing 5% of the traffic from the Home page to the read-only cluster, gradually increasing it until we routed 100% of the traffic to the read-only cluster.

{

"prefix": "/api/v1",

"weighted_clusters": {

"runtime_key_prefix": "routing.traffic_split.app_home",

"clusters": [{

"name": "service-readonly",

"weight": 5

},

{

"name": "service",

"weight": 95

}

]

},

"headers": [{

"name": "Read-only",

"value": "true"

}]

}

Fig.7 Envoy incremental deploys

Here a summary of what I’ve discussed over this two part series on scaling microservices:

gRPC, built on HTTP/2, provides us with a high-speed communication protocol that can take advantage of bi-directional streaming, multiplexing and more.

Protocol Buffer provides client/ server library implementations in many languages. It has a binary format and, hence, provides a much smaller footprint than JSON/XML payloads.

When targeting least response times, opt for gRPC as your communication protocol.

Envoy is an L7 proxy and communication bus designed for large-scale modern service-oriented architectures. It supports load balancing of both HTTP and gRPC requests.

Envoy provides a rich set of features via the built-in filters which one can quickly leverage via Listener configuration.

The filter chain paradigm is a powerful mechanism, and Envoy lets users implement their own filters by extending its API. The dynamic routing feature helps one roll out endpoints that are still under pilot or experimentation.

gRPC and Envoy offer powerful ways to improve network latency and scale one’s backend microservices.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.