The software development ecosystem is constantly changing, with a consistent stream of innovation in tools, frameworks and techniques alongside an unpredictable market that requires businesses to constantly rethink and revise their priorities. Fortunately, evolutionary architecture principles offer an effective way to keep pace with a rapidly changing environment, supporting incremental evolution through “fitness functions.” Typically, fitness functions are codified as architecture, unit, integration and other tests, alongside monitoring, alerting systems and metrics. Together, these functions make it possible to assess the integrity of the architecture's cross-functional requirements as it evolves.

We believe the same concept can — and should — be applied to the supporting testing strategy. This is an approach we call evolutionary testing strategy. This approach will expose issues early in the project lifecycle and fix potential gaps in the product, and in processes and organizational structures. In this article, we present a high-level approach to evolutionary testing strategy by applying the lessons from the architecture domain to the testing domain.

Let’s first begin with why we need to evolve the test strategy with concrete examples.

Why you need an evolutionary test strategy: Six exemplary scenarios

Keeping your test strategy static is like locking one wheel in a tricycle while the other two — the business requirements and the software architecture — keep moving! The result will not be harmonious.

However, as an industry, we have lagged behind in evolving processes, roles and responsibilities for our testing strategies. For example, Architecture Decision Records (ADRs) do not detail how a decision might impact the associated testing process or tests architecture. Enterprises rarely hire a test architect. Even more rare is a system that brings a test architect and a software architect together to discuss how a design pivot impacts the test’s architecture.

This cultural misalignment of the testing strategy is why enterprises often end up with products of insufficient or degrading quality. Let’s explore how these challenges manifest themselves in practice.

#1 Five-year legacy modernization

Imagine an enterprise set to modernize its legacy technology, the core of which was built 30-40 years ago. A common approach most enterprises take is to create a five-year roadmap for feature delivery, supported by a futuristic high-level technical architecture, a set of popular and best-fit tool choices and a testing strategy (done mainly to procure budget). Evidently, the understanding of the domain, the complexities of the features, and the development process is bound to evolve through the five years of execution. So, why shouldn’t the testing strategy?

The testing strategy created to support such a 5-year delivery roadmap is likely to offer a very high-level view consisting of whatever is known at that specific moment about the hundreds (possibly thousands) of features inside legacy systems, gathered from interviews with subject-matter experts (as documentation may be lacking or out-of-date). It would most certainly require evolution around the tests architecture in alignment with the evolution of software architecture, testing processes in alignment with team structure changes and technical choices as the technical landscape evolves with innovation.

#2 Changes in business priorities

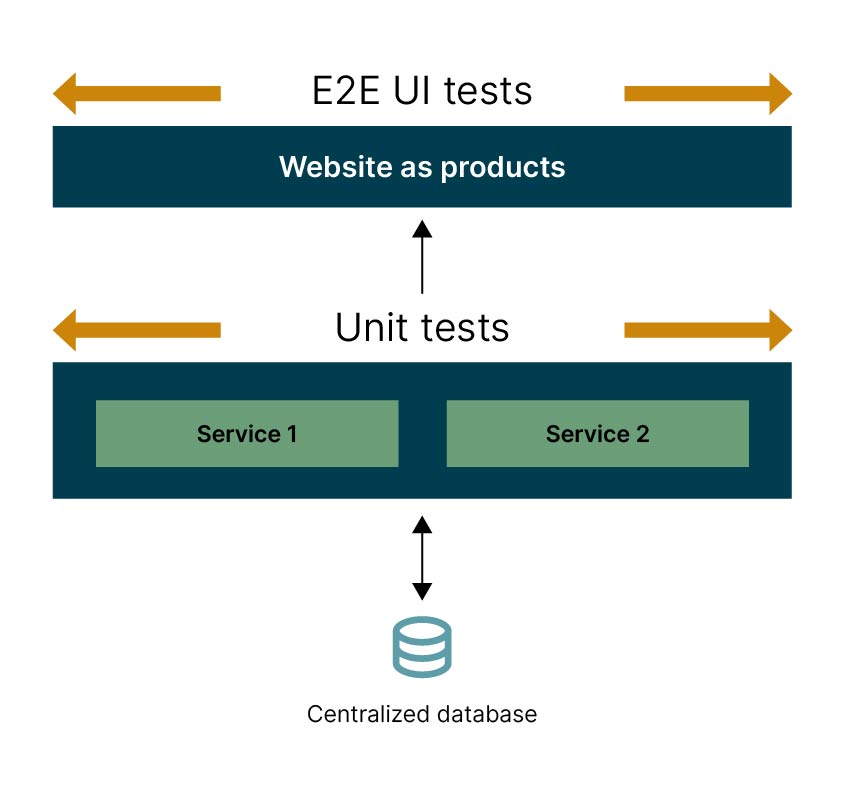

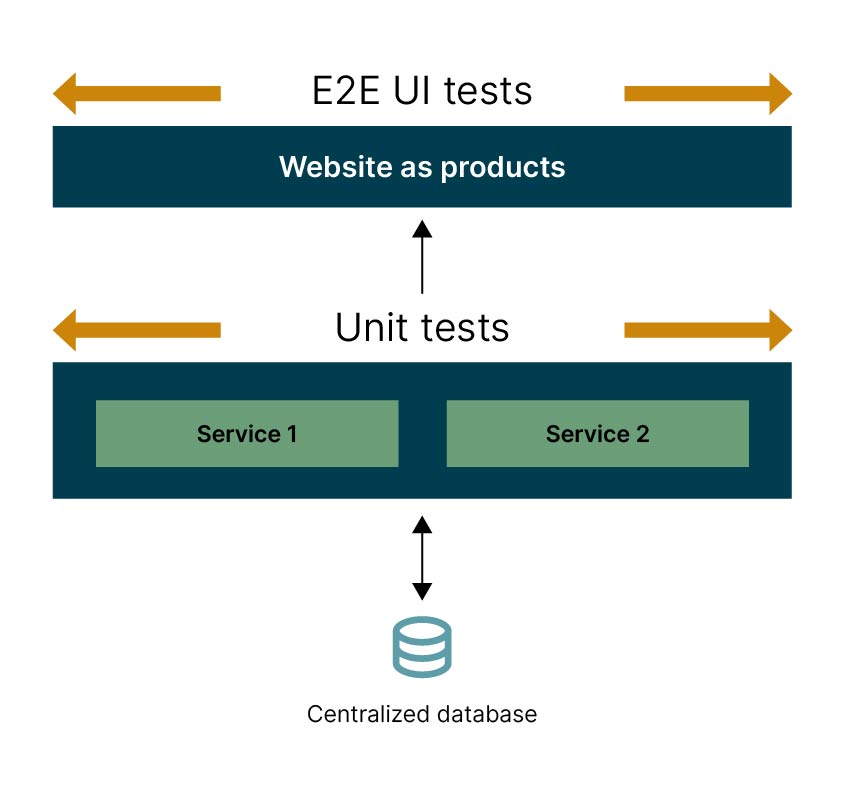

Let’s say your team is building a website with a couple of services in the backend. In the initial plan, you would have probably proposed a test architecture that prescribed unit tests and UI-driven functional tests. Midway through the implementation cycle, you learn that a competitor has launched a similar product that is API-focused without a WebUI. Customers, too, are no longer interested in the UI, and they've bought into an integration vision based on leveraging APIs. To regain ground, the business no longer wants to sell the website as a product. They have pivoted the go-to-market strategy, now selling the underpinning APIs as the product!

Figure 1. Business Priority changes must affect your test strategy

This may seem like a no-cost pivot for development. But for testing, this is substantial — the whole user experience and value proposition are different. This demands an evolution of the test strategy — altering the test architecture, re-examining testing outcomes and redefining tests for the API layer.

#3 Software architecture change

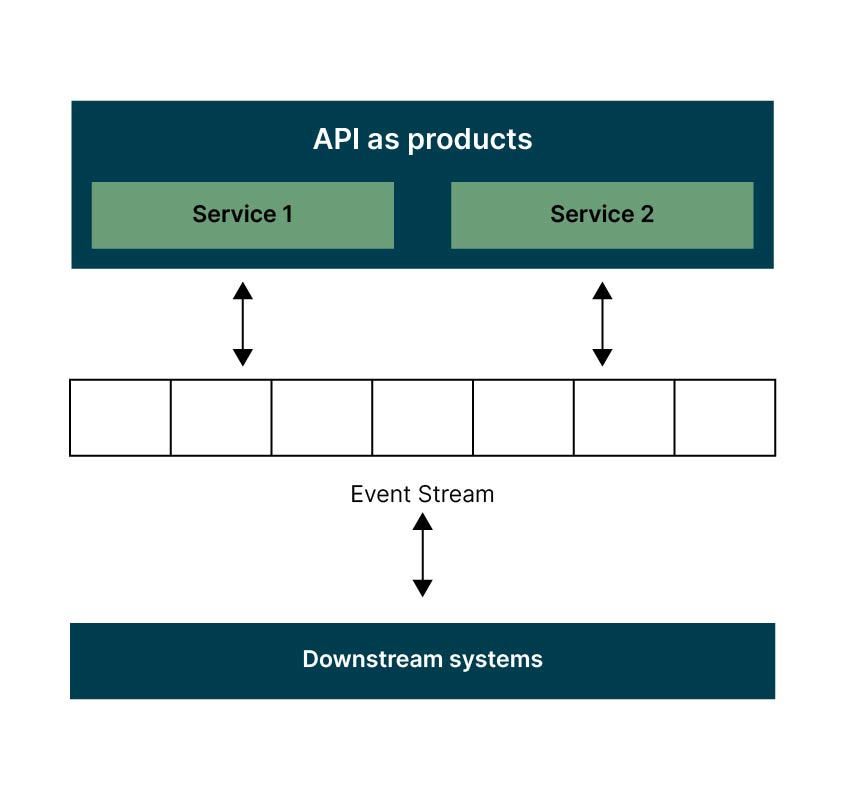

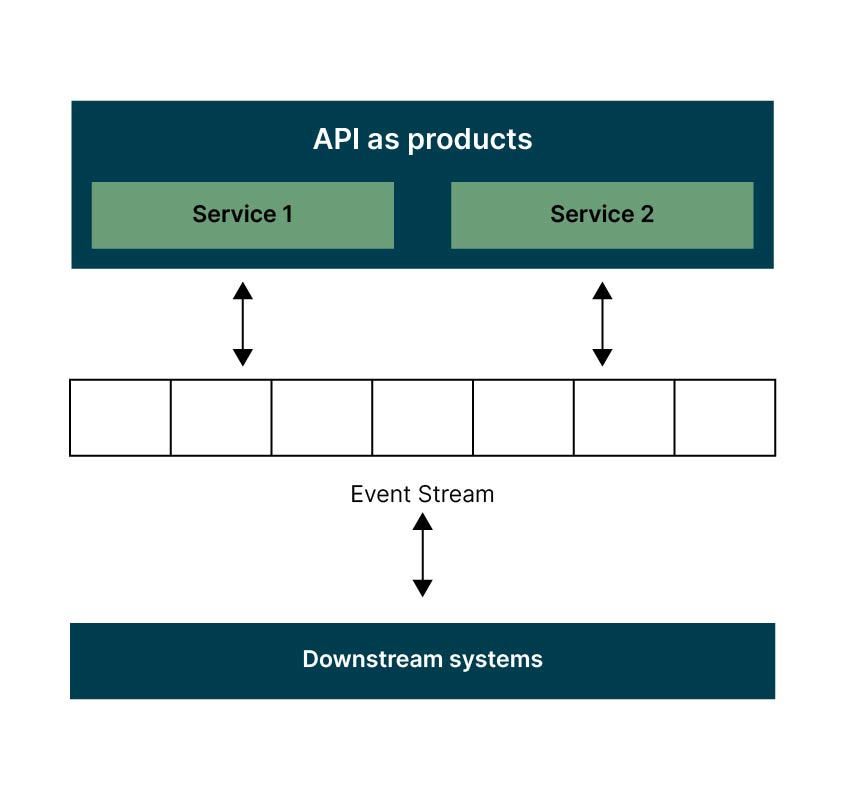

Extending the example above, two services communicate via a common database. As the software architecture evolves, the database is replaced by an event stream to support loosely-coupled downstream communication.

Even if you had changed the test strategy to write API tests, it would not cover this architecture change, which needs several test cases to cover asynchronous behavior and failure domains introduced by the eventing technology. Unless you're thinking holistically about your testing strategy, it is easy to overlook these nuances.

Figure 2. Software architecture changes must affect your test strategy

To successfully manage this pivot, you need an evolutionary approach that invites the team to pause and understand the new technology and system behaviors. Doing this will allow the team to add sufficient observability and revise the test architecture proactively.

#4 Cross-functional testing

Another critical factor impacted by this pivot is application performance. Unless you revise the test strategy, adding appropriate performance tests at the API layer (or at least ensure you have the necessary capacity to add them later), you may not be able to go live in the stipulated time without significant risks.

#5 Team structure changes

Let’s say that, having listened to your concerns on the aforementioned risks, a considerate business team agrees to fund one extra team to accelerate development. Now, each team will focus on a single service’s development independently. This brings its own complexities.

So far, quality engineers knew the features of both the services and ensured that changes to one did not affect the overall domain. However, with the new organizational change, they will only be partially aware (or worse, completely unaware) of the features developed by the other team.

As a result, you will need to evolve your testing processes to have more collaboration ceremonies embedded in day-to-day testing practices and define how the teams are to collaborate and own the system integration tests and. Unfortunately, these are often overlooked until someone discovers integration defects in the release cycle and fixes the process reactively.

#6 Tech industry advancements

Technologies are rapidly evolving. If you don’t keep up with them, you might discover that you've become "legacy" even as you’re still developing! Take the recent Appium 2.0 release, for example. If you’ve built your test suite on Appium 1.x, it’s no longer supported. This means you must evolve your test strategy to move to new tools or adopt Appium 2.0. This also applies to new techniques such as test containers, AI/ML-based reporting and so on.

From the scenarios above, you can see that approaching testing tactically to check what’s already developed can cause significant problems to the quality of the product delivered. An evolutionary testing strategy helps not only testing engineers but also development teams and the wider business because it bakes quality into the software proactively rather than taking a casual and reactive approach.

How to adopt an evolutionary testing strategy

While an evolutionary testing strategy might look overwhelming if you haven’t used it before, it doesn’t have to be. We’ve identified some key actions to help you get started and adopt an evolutionary testing strategy.

Version everything

A minimum requirement is for test suites to be version controlled. This applies to all types of tests, not just unit and integration tests written by the developers. Many teams have end-to-end tests or API tests still running on a local machine at the behest of a test engineer. This needs to change; it should be version controlled as well.

Document your versioning strategy

Connect your test suites to the test strategy version process that is tied to your architecture versioning. The benefit of this is that as versions of the architecture progress, your test strategy evolves accordingly.

Capture test evolution impacts in your ADRs

In Architecture Decision Records (ADRs) and/or Business Decision Records (BDRs), make it mandatory to record the evolution of the test strategy. Even if there's no change to the testing strategy, record that too. It should be an explicit consideration.

Create clear lines of accountability

Create separate roles such as a Test Architect or Head of QA that have the capacity to think beyond day-to-day tasks to drive this initiative. If that isn't possible in your current environment, at least ensure all responsibilities and accountabilities are documented and understood.

Include testers in your teams

It’s important to follow agile practices and include testers in solution design and business discussions. The evolution of the test strategy should be discussed alongside any solution/business changes. It is also useful to ensure your plans include the tools and data the test strategy will need.

Plan with contingency

Development efforts have a habit of consuming any buffers put in place during planning. That’s why it’s particularly important to allocate testing contingency in your estimated plans. Make sure that you track where you have to spend this: doing so makes it much easier to perform a cost benefit analysis and mitigate risk, if changes actually are required.

Evangelise and communicate

Along with the above set of practices, it’s important to raise awareness around changing business requirements and their unintentional implications for architecture and testing in your teams — for instance, talk about such misses in team retrospectives, bring about testing strategy discussions in tech huddles; evangelize similar custom practices suitable for your team size and culture so that everyone is onboard with the importance of evolving the testing strategy!

In summary, the evolutionary testing strategy approach should produce better business outcomes by creating an harmonious ecosystem where the testing strategy is aligned with business requirements and architecture changes proactively. It will ensure that fitness functions are not simply ivory tower artifacts but achievable and business-driven guideposts. It will also empower the test engineers to add demonstrable strategic value by facilitating an overall alignment of testing to architecture, all the way through to the business outcomes.