AI is reshaping enterprise operations. IT leaders see the opportunity to reduce toil, resolve issues faster, and bring intelligence into everyday workflows. Yet the term AIOps often blurs two fundamentally different concepts.

To cut through the noise, we need a clear distinction: AI for Ops versus Ops for AI. They may sound alike, but their goals, practices and toolchains are worlds apart. Grasping this difference is critical for any organization facing the operational paradigm shift driven by AI.

AI for Ops: Infusing intelligence into IT

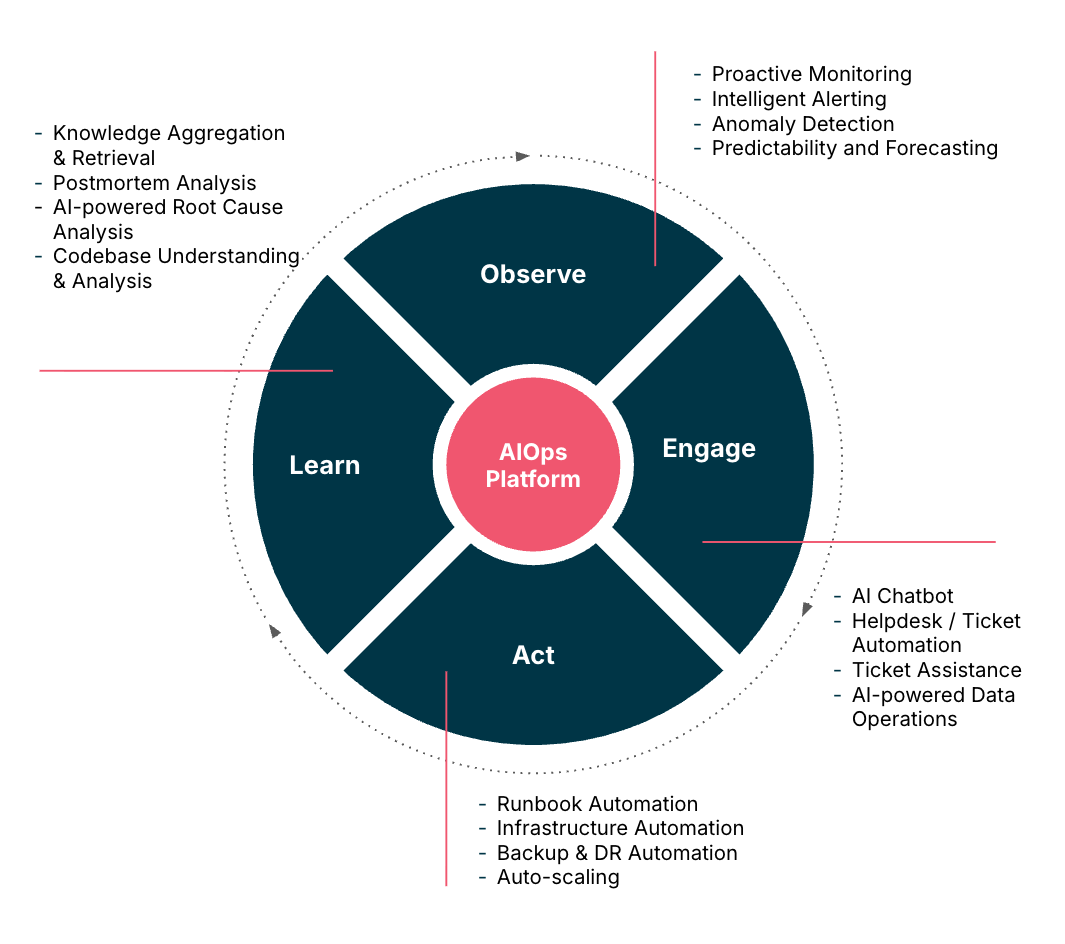

AI for Ops, or AIOps, is the use of AI to augment IT operations across applications, infrastructure and data. It combines deterministic and non-deterministic models to spot anomalies, correlate incidents, summarize tickets, review PRs and automate routine tasks such as runbooks or postmortems. It can even anticipate scaling needs in dynamic environments (Figure 1.).

Figure 1. AIOps use cases

The purpose is clear: cut manual work, accelerate resolution and free engineers to focus on higher-value problems. In this role, AI becomes a multiplier for operations, driving reliability, resilience and efficiency across the enterprise.

Ops for AI: Running AI as its own system

Ops for AI flips things. The question shifts from how can AI improve IT to how do we operate AI itself? It means treating models, pipelines, agents and datasets as production-grade assets that demand disciplined management.

This scope spans factual accuracy, reliability, performance, cost control, model drift and risks such as hallucinations or data leakage. In short, Ops for AI is about running AI workloads with the same rigor as any mission-critical system — only with practices tailored to AI’s criticality.

Different goals, scopes, skillsets and toolchains

This is where the real confusion often lies. Both practices are operational, but they serve different purposes. AI for Ops is about optimizing IT operations. Ops for AI is about making AI systems trustworthy, safe, reliable and cost-effective.

The scopes demand distinct skillsets. AI for Ops covers the core of enterprise IT operations and requires traditional tech ops expertise augmented with AI fluency. Picture an SRE who knows observability and incident response but can also use tools like Google Cloud’s Gemni Cloud Assist , applying prompt engineering techniques to drive more accurate root cause analysis.

Ops for AI, by contrast, requires deep grounding in AI evaluation. Engineers must design offline and online evals, prepare test data, trace AI behaviors and assess metrics that have no parallel in traditional IT. In practice, it looks like AI evaluation specialists who also understand IT operations.

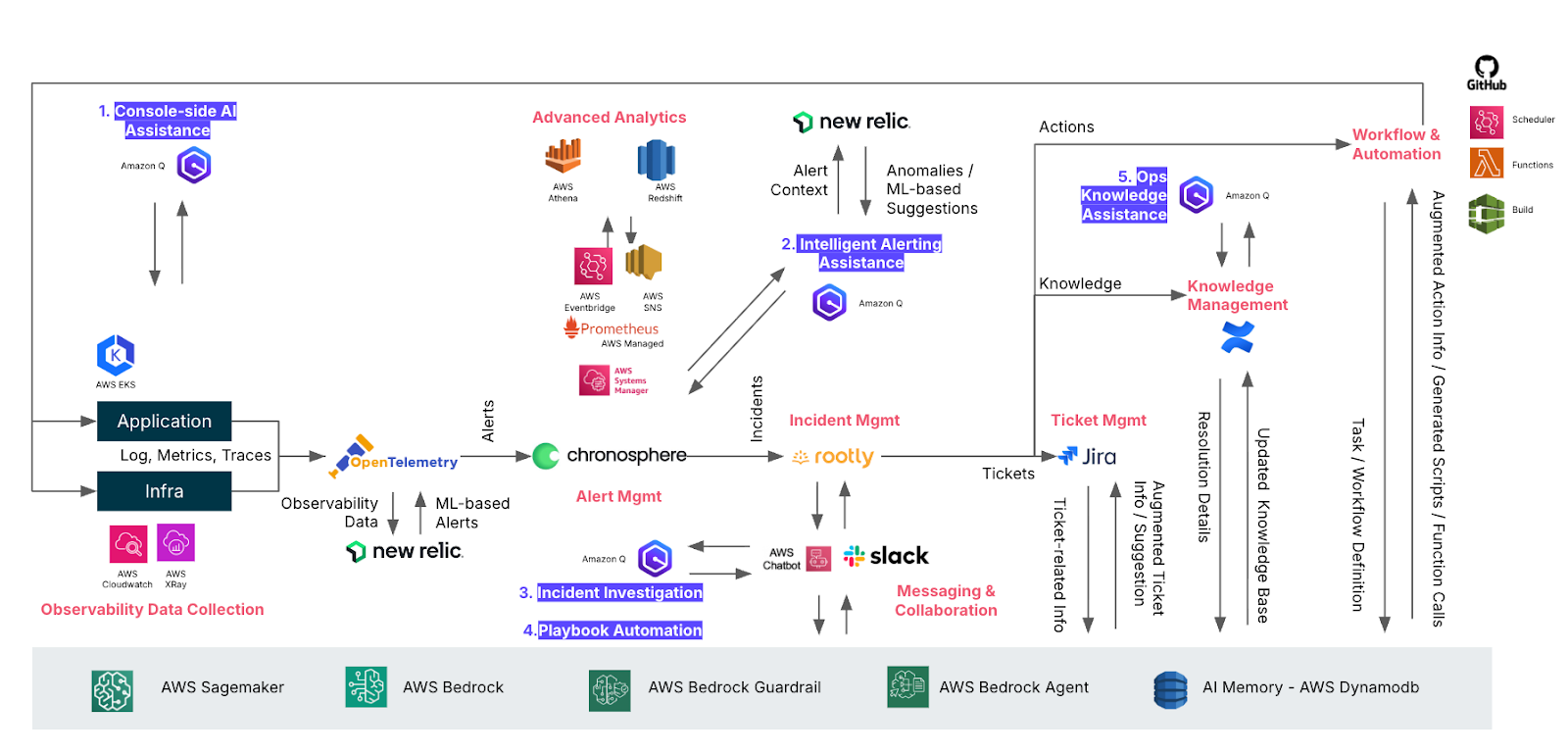

The toolchains diverge as well. AI for Ops leans on observability platforms, ITSM systems, incident response tooling, and cloud monitoring (Figure 2.). Ops for AI, on the other hand, relies on MLOps, LLMOps, evaluation pipelines and AI observability stacks.

Figure 2. A typical shift-left SRE AIOps toolchain built on AWS Bedrock-based infrastructure.

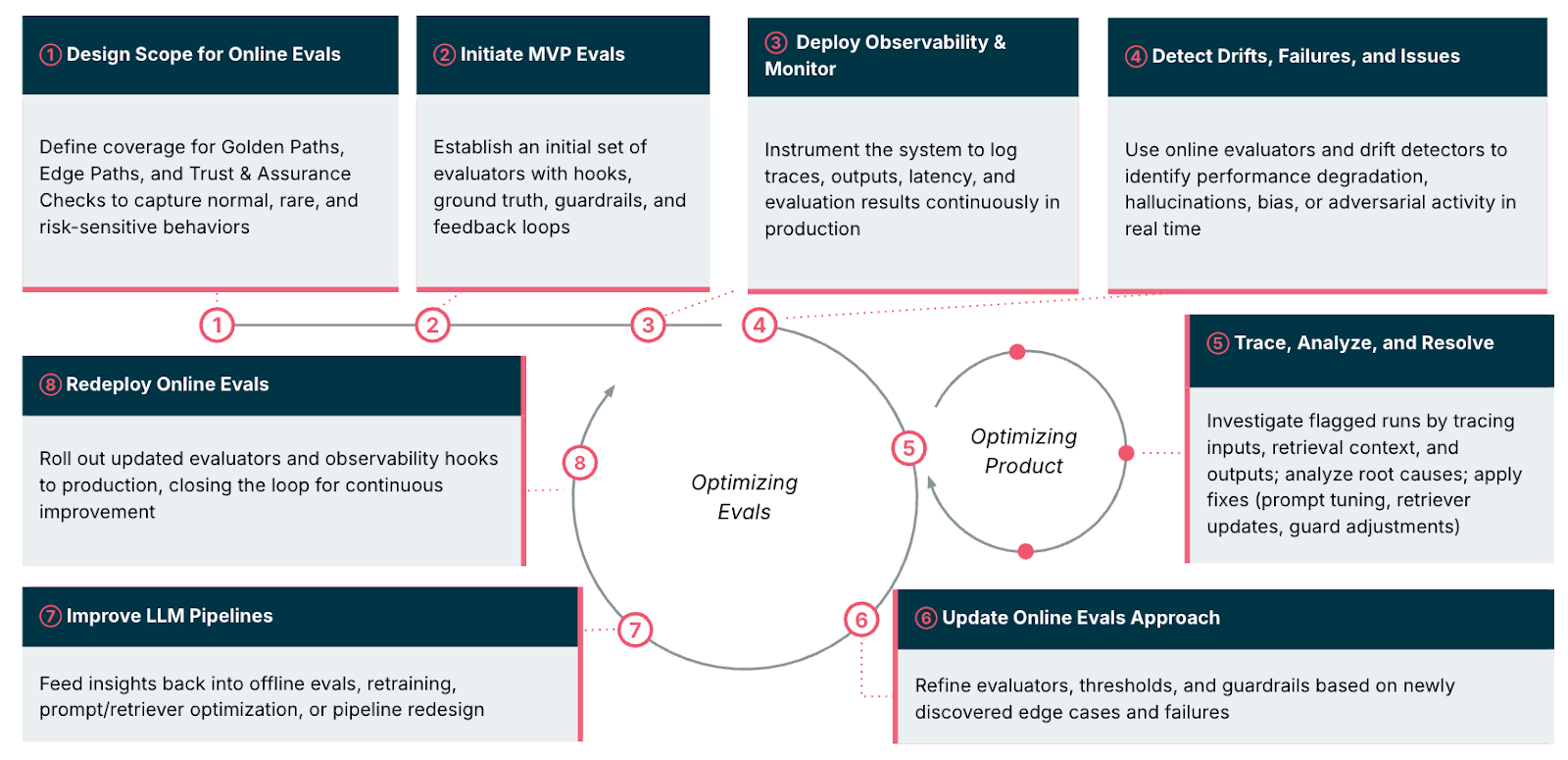

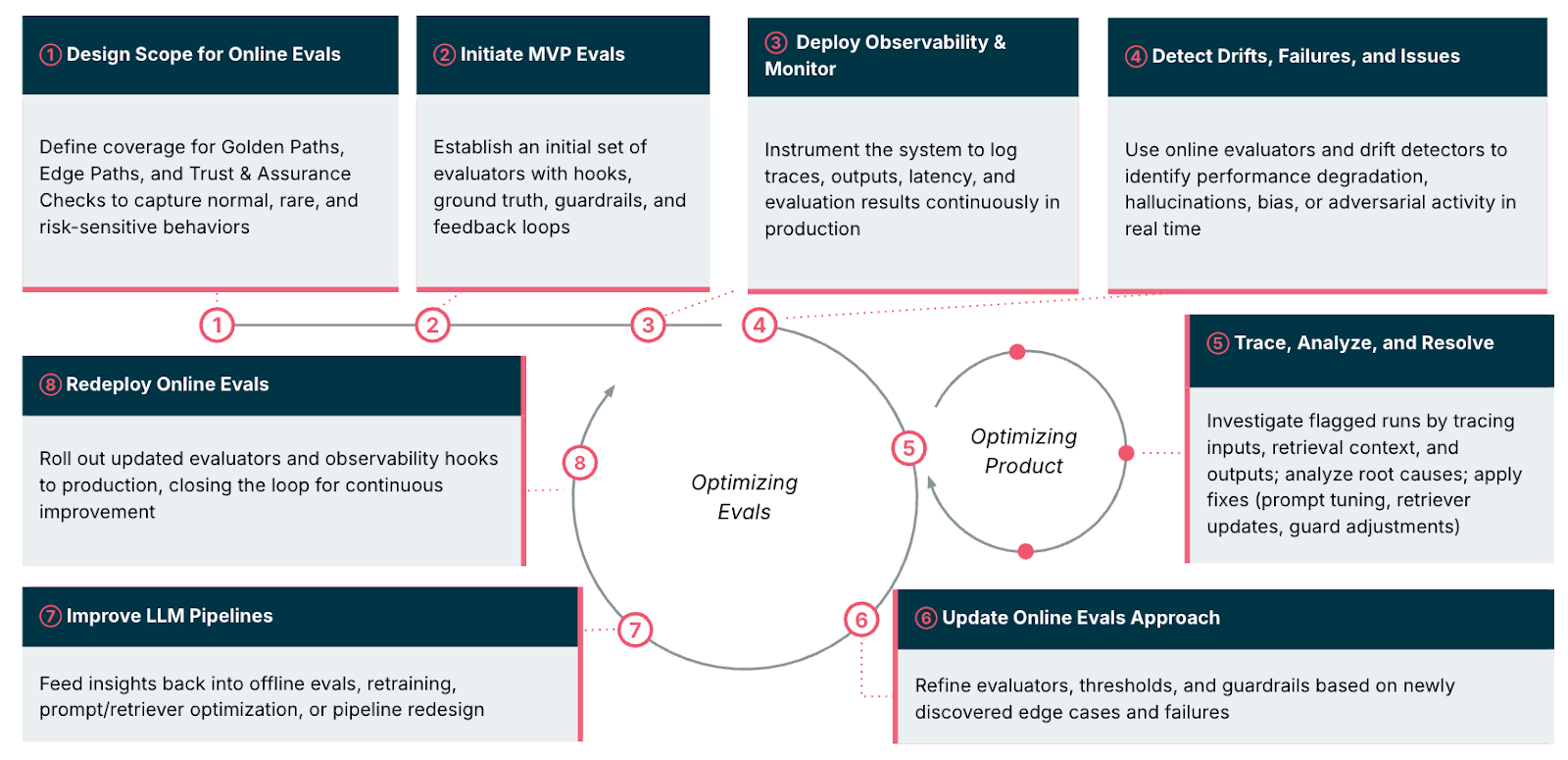

Ops for AI, on the other hand, relies on MLOps, LLMOps, evaluation pipelines (Figure 3.), and AI observability stacks.

Figure 3. A representative online evaluation pipeline for monitoring and optimizing AI product performance

Collapsing these two disciplines into a single definition erases the nuance — and risks applying the wrong playbook to the wrong problem.

The convergence ahead

The hype is real, but so is the trajectory. Within the next 12 months, AI applications and workloads will grow into a visible share of enterprise IT portfolios. That’s when the two scopes — AI for Ops and Ops for AI — will inevitably converge. Ops for AI will stop being a niche and become a core operation backbone inside AI for Ops, just as DevOps once erased the boundary between development and operations.

This convergence will demand new skills, new metrics and new governance models. Operations leaders must act early to prepare, because running IT and running AI are becoming inseparable. Those who hesitate risk gaps in reliability, cost control, and trust — exactly when AI is moving to the center of business.

When ops paradigm shifts and scope expands, be prepared

The distinction is clear. AI for Ops means applying AI to make IT operations smarter and more efficient. Ops for AI means running AI itself as a reliable, trustworthy workload. Both are critical, but they are not interchangeable.

The future lies in convergence. As enterprises scale AI adoption, Ops for AI will fold into AI for Ops as a dedicated practice. The operational paradigm is shifting, and leaders who master both will define how enterprises run in the AI era.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.