In the first part of the article, we focused on ELK. This article focuses on EFK.

EFK

Deploy ElasticSearch

Same as the ElasticSearch deployment manifest

Deploy kibana

Same as the Kibana deployment manifest

Deploy fluentd

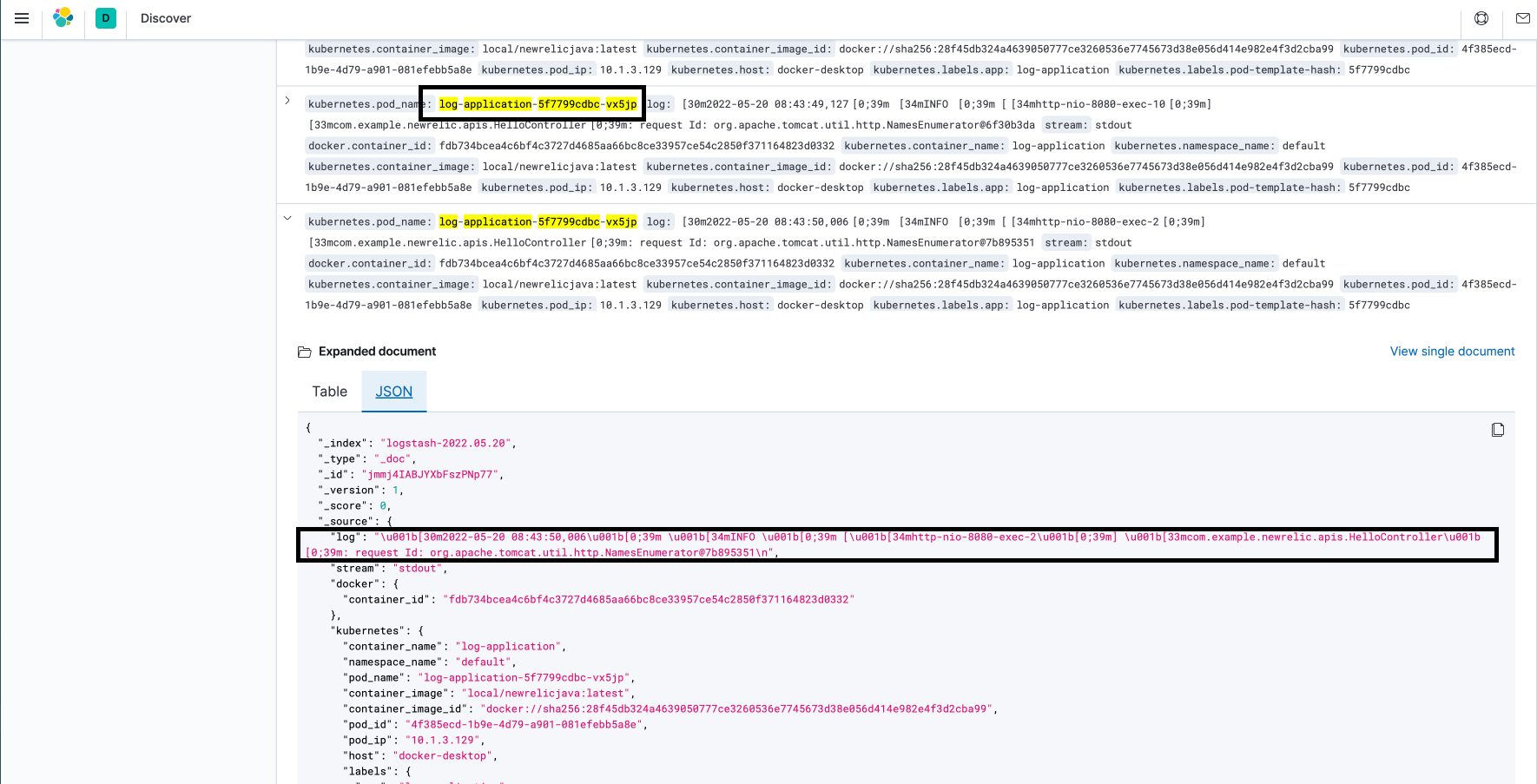

Here, we will define a DaemonSet manifest to deploy fluentd which deploy a Pods for collecting the Pods log and docker container log. If everything is set up correctly, you will see the following after inputting: KQL kubernetes.namespace_name : "default".

k8s container log

Here is some information about the resource DaemonSet from the kubernetes official website

A DaemonSet ensures that all (or some) Nodes run a copy of a Pod. As nodes are added to the cluster, Pods are added to them. As nodes are removed from the cluster, those Pods are garbage collected. Deleting a DaemonSet will clean up the Pods it created.

Deploy business service application

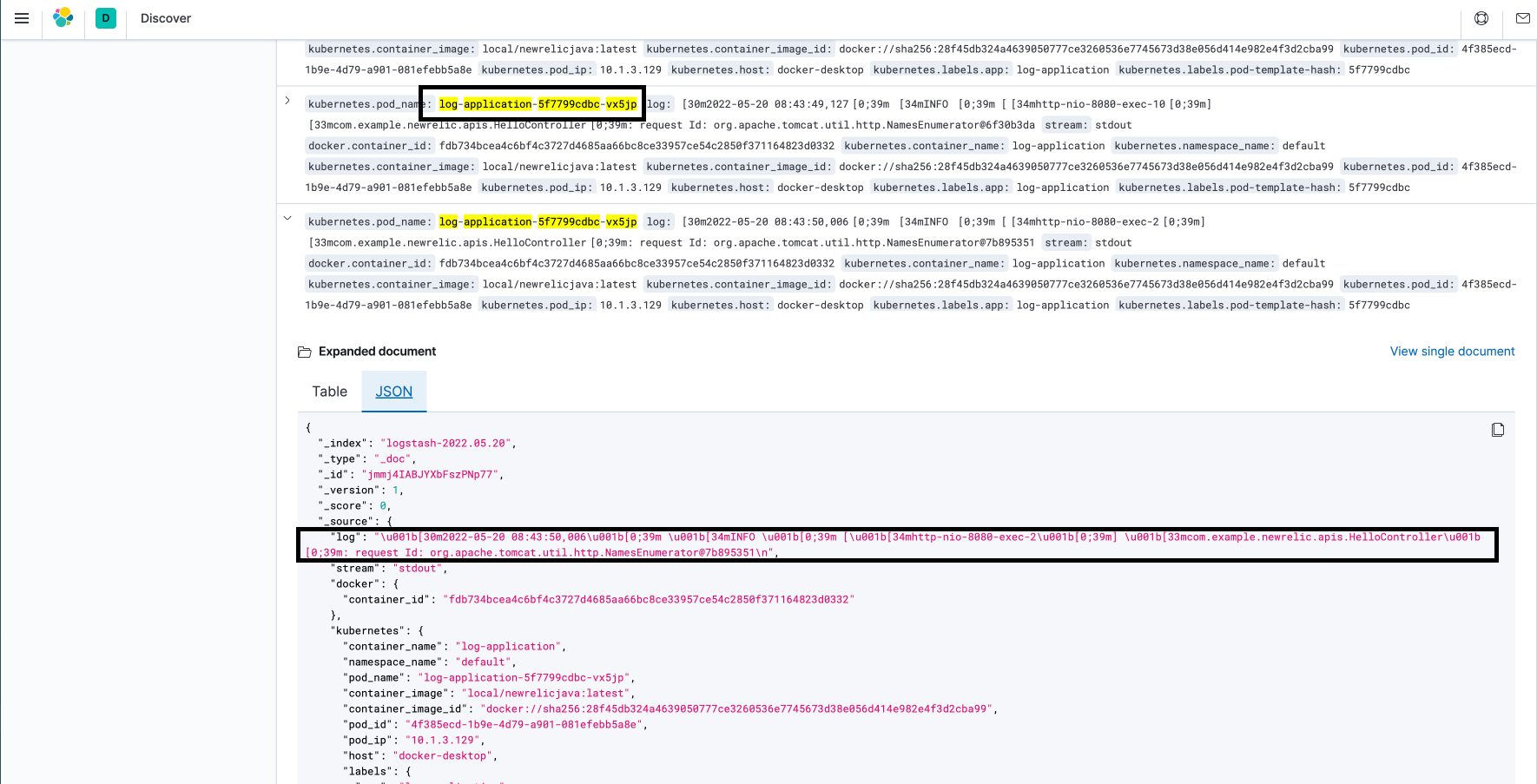

In our daily delivery, we always deploy our application in k8s. Take log-application.yaml as an example, we use slf4j.Logger to print log on the application console. When a request hits the log-application, it will print its stdout on the console, which will be scanned by fluentd and it will send the log data to ElasticSearch. The following picture pic5 will be represented.

Successful query application for log information

Differences between ELK and EFK

Components

ELK is composed of ElasticSearch, Logstash, filebeats and Kibana while EFK only includes ElasticSearch, fluentd and Kibana.

Mechanisms

In the ELK framework, the core feature is the logstash, used as a log sending agent. Logstash acts likes a log transportation center which can re-process the format, filter and enrichment of logs. It's very memory-consuming. We can't deploy a logstash into a container with other logging applications. Therefore, filebeat, a lightweight log collector, works better as a log agent to send log to logstash.

In the EFK framework, fluentd is the core feature. fluentd is deployed on k8s cluster as a DeamonSet resource, which can collect the node metrics and log. It doesn't need another log transportation agent. Only if fluentd is set with enough permission can the container and nodes log be collected directly.

log agent performance

logstash is written by JRuby and runs on JVM.

fluentd is written by CRuby, which consumes less memory than logstash.

Configuration

logstash

You can add your own manifest to set up input and output rules, log format, etc.

fluentd

fluentd UI browser (Install, uninstall, and upgrade Fluentd plugins)

-

Usage scenarios

ELK needs more memory. Therefore, logstash might not be suitable for low-memory machines. By contrast, EFK consumes less memory. In my opinion, this gives EFK a wider range of applications.

More information

For more information, you can take a look at the following references:

Conclusion

This article introduced two popular log frameworks, ELK and EFK, which come with several advantages:

Log-centralized: docker container and nodes cluster are gathered into one place, which makes debugging easier.

real-time, log agent, logstash and fluentd send system logs to ES in real-time. Because of their real-time file content and port listening, we can obtain real-time log feedback for troubleshooting online issues.

Visualization & analysis, providing KQL for querying data and drawing charts, which helps us with analysis.

open-resource and active communities for supporting unknown issues.

You can find all the manifests in my github.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.