Test Driven Development is the best thing that has happened to software design

In short, TDD shapes an idea into implementation through a cyclical ‘fail-pass-refactor’ approach. This means that when writing software using TDD, we don’t know what the final production code will look like, what the names of the classes and methods will be, or even the implementation details. In fact, our focus remains on the 'what', and our knowledge of 'how' is postponed.

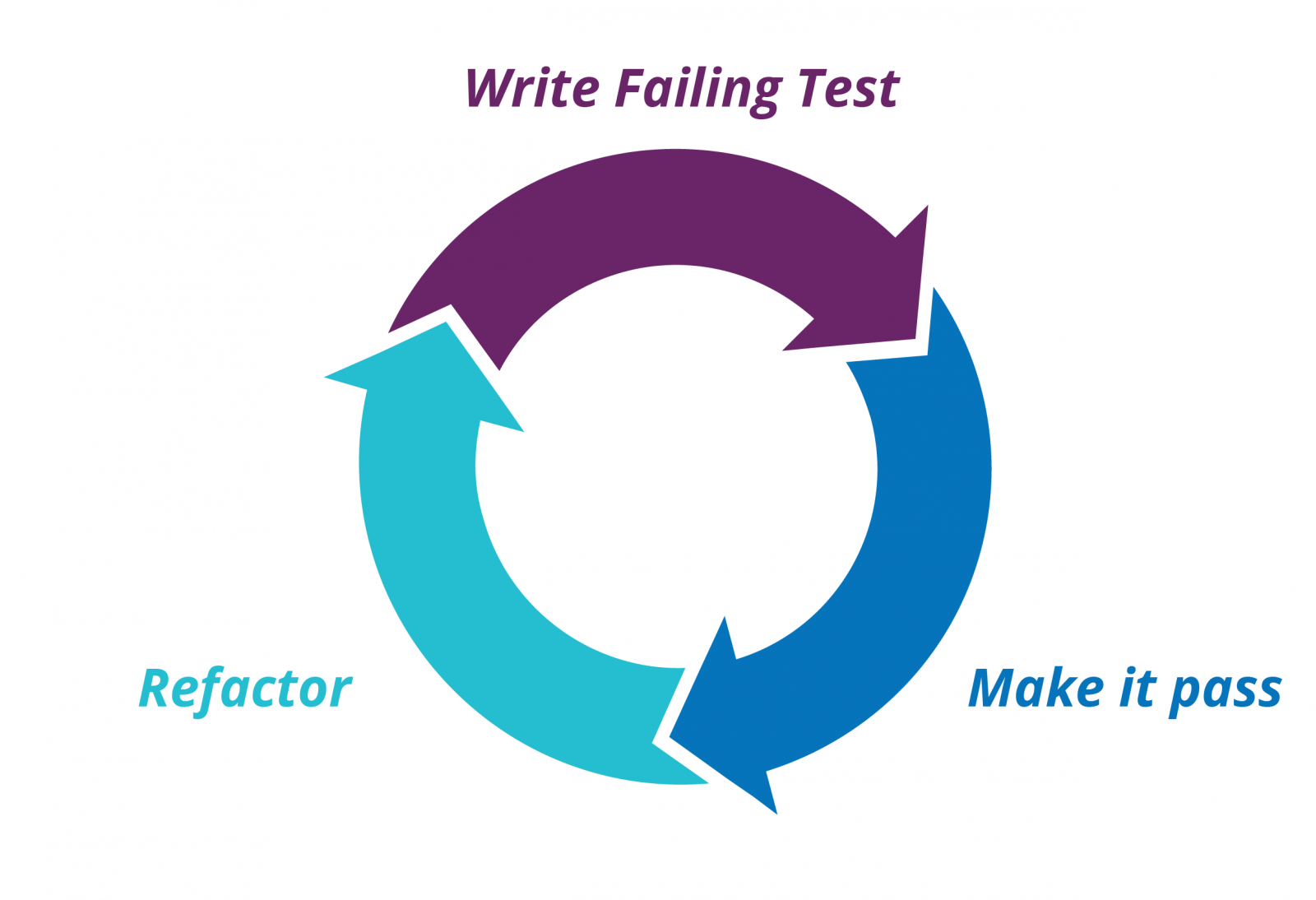

The cycles of TDD

TDD’s iterative approach supports software to evolve into a working, elegant solution, that fortunately doesn’t take millions of years like normal evolutionary processes! Each ‘purple’ (fail) - ‘blue’ (pass) - ‘refactor’ cycle (pictured below) gets us closer to what we need.Simply put: in the purple phase, we write a failing test; in the blue phase, the minimum code to pass that test; and in the refactor phase, we clean up the implementation of the test.

You may be asking what this has to do with good software design. To answer this, imagine you want to add a feature to your solution. You may write the code first, then a test; or possibly the opposite way – write a test first and then the code. At first glance, there seems to be no difference between the two approaches, but these approaches vary greatly in the mindset and approach to the quality they instill in the developer.

1. When tests are driven by code

You've written code, and now it’s time to test it. Maybe method-by-method, some corner cases, happy path scenarios or failure scenarios. Is it enough? Possibly, because your production code is working. You have just completed implementation, and it passes all of your tests. You can mark it as done.

Great job! It may not, however, be the right approach as feedback about implementation and design is lacking. In my view, the most challenging part of breaking the test-first approach is to answer the following questions:

- When should I stop adding new tests?

- Am I sure the implementation is finished?

2. When code is driven by tests

In this approach, we start by defining the 'what'. The benefits of the test-first approach are numerous. Firstly, tests are verifying behaviours, not implementation details. Secondly, you are writing code with living, always up-to-date documentation. If you have any questions on how a unit, a module or a component are working, or how they are designed; start from the tests. Thirdly, writing the test before the actual implementation forces you to ask the question: What do I expect from the code?

The requirements may be something like:

- “Any array passed to the sort function should be returned as ascending”, or

- “Elements added to the cache should not be available after eviction”, or even

- “Admin should be able to block a user so that they will not be able to log in.”

You should know the answers to these tests before the feature is implemented.

Additionally, when code is driven by tests, you know when to stop. TDD is really helpful here as you are introducing a new requirement by adding the new red test. The evolution of your implementation is more natural.

Using TDD for fast feedback

I've described two of the key reasons to implement TDD, but perhaps the most important is its ability to provide fast feedback about software design. Let's look at a scenario where we might add some new functionality to an existing code base. You want to say add a new test, but realise that something is not right – perhaps the requirement is difficult to test. Is something wrong with the feature, or perhaps the design of the system under test? We can’t be sure.

When feature testing, it is almost always a poor design that will cause issues with testing the code which creates difficulties when extending, refactoring, or even understanding code by someone else in the future.

How can we use TDD on code that is impossible to test?

Let's look at the fast feedback from these mechanisms to test impossible code, and how TDD can help requirements meet implementation.

1. Spying or Mocking

What are the symptoms of code that it is impossible to test? Regardless of the mechanisms, you are going to use: Spying or Mocking [3], there is no way to write a proper test. It might happen when code is written first, and writing tests are postponed to the distant future.

For example, given the following snippet responsible for sending a daily newsletter:

class NewsletterNotifier {

...

void sendDailyNewsletter() {

LocalDateTime today = LocalDateTime.now();

NewsLetter newsletter = newsletterRepository.findByDate(today);

List

The main problem in the example above is the creation of the EmailEngine object using the new operator. You cannot inject it and change behaviour in the tests. Assuming that EmailEngine calls external services with side effects, there is no safe way to write a unit test that verifies the behaviour of NewsletterNotifier as-is.

2. Variable Test Data

Another challenge in the previous example is the use of the static method LocalDateTime.now. The consequence of this is that using a time-based data setup means that your test is not repeatable and may fail based on external factors. For example, tests may always fail on a Friday.

This can be fixed by extracting the time variable into an injected object, like Clock. In the production code you may inject a clock based on UTC - Clock.systemUtc(), in the test code a clock that always returns a constant value - Clock.fixed()

class NewsletterNotifier {

private final Clock clock;

void sendDailyNewsletter() {

LocalDateTime today = LocalDateTime.now(clock);

...

}

}

3. Bloated Setup

Third symptom of difficult testing is bloated setup. It can be seen when the test preparation phase is too long and complicated. Bloated setup implies that an object either has too many dependencies or the Single Responsibility [4] principle has been broken. Both cases suggest rethinking your design.

class NewsletterNotifier {

...

public NewsletterNotifier(NewsletterRepository newsletterRepository,

UserRepository userRepository,

SmtpConfig smtpConfig,

EmailEngineFactory emailEngineFactory) {

Certificate cert = null;

if (isProduction()) {

cert = CertificateFactory

.getInstance("X.509")

.generateCertificate(getClass().getResourceAsStream("email.crt"));

}

this.emailEngine = emailEngineFactory.create(smtpConfig, cert);

this.userRepository = userRepository

...

}

...

}

How about the snippet above responsible for encrypting messages? Every test case requires at least two setup steps: one for NewsletterNotifier, and one for the test itself. Moreover, the way that NewsletterNotifier is actualised is not very straightforward. You need to take care of the environment, the certificate and finally, EmailEngine that is created by factory. Wouldn’t extracting the creation of EmailEngine to a separate component and providing it as a dependency to NewsletterNotifier simplify the test setup?

// used on production

class SecuredEmailEngineFactory {

private final EmailEngineFactory emailEngineFactory;

...

public EmailEngine create(SmtpConfig smtpConfig, String certName) {

Certificate cert = CertificateFactory

.getInstance("X.509")

.generateCertificate(getClass().getResourceAsStream(certName));

return emailEngineFactory.create(smtpConfig, cert);

}

}

class NewsletterNotifier {

public NewsletterNotifier(NewsletterRepository newsletterRepository,

UserRepository userRepository,

EmailEngine emailEngine) {

...

}

}

4. Mocking Hell

This is another red flag. How many times have you seen a mock returning mock that returns a mock that ... (ad infinitum)? The main reason for Mocking Hell is due to breaking the The Law of Demeter [5], which implies that information is leaking from one component to another. A small change causes the butterfly effect for both the production code and the corresponding tests.

This may lead to you asking:

- Have I changed a method signature and now need to update 101 places in your code?

- Have I introduced a small change in the business logic, but another test belonging to an unrelated object is now falling?

- Is the design clean and are components loosely coupled?

class NewsletterNotifier {

private final Clock clock;

boolean canSendDailyNewsletter(String userName) {

return userRepo

.findActiveUser(userName).newsletterPreferences()

.isWeekDay(now(clock).dayOfWeek());

}

}

The above example illustrates a changed requirement for the email subscription class. There are two types of newsletters: weekday and weekend. Users are able to choose when they want to receive it.

If you are using a mocking library, it is tempting to implement mocking method-by-method:

- findActiveUser(),

- newsletterPreferences(),

- isWeekDay().

You will end up with a test that is tightly coupled to the implementation, where even the order of the method are the same. How about the following method?

boolean canSendDailyNewsletter(String userName) {

return userRepo.isEligibleForNewsletter(userName, now(clock).dayOfWeek());

}

Below you can see two versions of the same test for this method:

@Test

public void shouldVerifyIfUserHasSubscriptionInSelectedDay() {

String userName = UUID.randomUUID().toString();

User user = Mockito.mock(User.class);

NewsletterPreferences newsletterPreferences = Mockito.mock(NewsletterPreferences.class);

when(user.newsletterPreferences()).thenReturn(newsletterPreferences);

when(newsletterPreferences.isSubscriptionDay(MONDAY)).thenReturn(true);

when(userRepo.findActiveUser(userName)).thenReturn(user);

NewsletterNotifier newsletterNotifier = new NewsletterNotifier(userRepo, Clock.fixed(...));

boolean hasMondaySubscription = newsletterNotifier.canSendDailyNewsletter(userName);

assertThat(hasMondaySubscription).isTrue();

}

@Test

public void shouldVerifyIfUserHasSubscriptionInSelectedDay() {

String userName = UUID.randomUUID().toString();

when(userRepo.isEligibleForNewsletter(userName, MONDAY)).thenReturn(true);

NewsletterNotifier newsletterNotifier = new NewsletterNotifier(userRepo, Clock.fixed(...));

boolean hasMondaySubscription = newsletterNotifier.canSendDailyNewsletter(userName);

assertThat(hasMondaySubscription).isTrue();

}

You may have noticed that there is actually a bug in the first version of canSendDailyNewsletter. Repository returns a null reference when the user cannot be found. An improved version of userRepo could return Optional object. This sounds good, but now you need to change the first test as well. The same applies to any change related to email subscription behaviour. The second version of the method does not have this problem because it encapsulates the daily newsletter subscription logic.

Why trying to predict future scenarios creates unnecessary features

How many times have you seen the code that has been written 'just in case'? We're no good at predicting the future, and that's why this approach doesn't work. So how does this apply to TDD? Do we add unnecessary code when moving between TDD cycles? Surely not, because the test-first approach does not allow you to modify production code without adding falling tests beforehand. If nothing is said about a feature, is there any reason to add a test for it? TDD helps us follow the You Ain’t Gonna Need it [6] (YAGNI) rule, which I believe is another step to a simple design.

Why TDD is the path to better software design

We use TDD to help requirements meet implementation. For me, it also works as a technique to gather feedback about software design – I’d even go as far as saying that TDD could stand for Test Driven Design. In short, start listening to your tests – they give you fast feedback, even before the first line of production code has been written.

If it's something you’d like to know more about, I recommend reading Kent Beck’s Test Driven Development By Example [1], or Growing Object-Oriented Software, Guided by Tests [2] by Steve Freeman and Nat Pryce.

References

[1] Test Driven Development By Example, Kent Beck

[2] Growing Object-Oriented Software, Guided by Tests, Steve Freeman, Nat Pryce

[3] Test double, Martin Fowler

[4] Clean Code, Chapter 10: Classes The Single Responsibility Principle, Robert C. Martin,

[5] Growing Object-Oriented Software, Guided by Tests, Chapter 2, Tell, Don’t Ask, Steve Freeman, Nat Pryce

[6] Yagni, Martin Fowler

Acknowledgements: Thanks to Raeger Tay, Stephan Dowding and Jesstern Rays for valuable feedback on early drafts, and Willem van Ketwich for editorial support.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.