Service Testing for Enterprise Applications

Service Testing for Enterprises

a) How do we validate services for functional requirements? b) How do we manage testing the complexity of testing - 100's of services? c) How do shared services react across multiple applications as services change and evolve? d) How do we enforce governance based testing? e) How do we minimize testing effort during regression agile based testing?

Business Needs

Business demand nowadays is in such a way that IT organizations wish to evolve their IT solutions over time as well as avoid costly integration and re-engineering efforts. SOA enables this by using service, rather than technology, as the basis of applications. However, SOA based application development introduces new challenges in the testing of applications built on top of them. This scenario calls for the following needs:

- How do we validate services for functional requirements?

- How do we manage testing the complexity of testing – 100’s of services?

- How do shared services react across multiple applications as services change and evolve?

- How do we enforce governance based testing?

- How do we minimize testing effort during regression agile based testing?

New Approach To Testing

SOA is a paradigm for building reusable services, driving the concept of building services once but re-using many times, and are accessed through well-defined, platform independent interfaces.

|

Traditional Application Testing |

SOA based testing |

|

Major portion of the testing contributes to UI based testing. |

SOA based services are basically head-fewer services, without a user interface. |

|

Applications developed and maintained by a “Unified group”, sitting on a single application boundary. |

Services are developed and maintained by different application groups. Application scope spreads across different vendor boundaries. |

|

Systems are readily available and up and running for testing. |

Third-party systems may or may not be available. Possibility of system being down during testing. |

|

Testing percentage contributes to functional and performance testing. |

Need to look beyond functional and performance testing, which includes component testing, service testing, service-integration testing etc. |

|

Effective automation, by invoking the properties, methods of different UI objects, for performing test actions. |

Effective automation by validating the interfaces of the services across different boundaries which includes individual service, service integration, system and workflow testing. |

|

Modification to the requirements, impacts the internal systems inside the product/application boundary. In the testing phase only the validation is limited to the specific application/module. |

Modification to the requirements impacts multiple services, across different vendor application boundaries. In the testing phase, test cases related to complete end-to-end business process needs to be validated. |

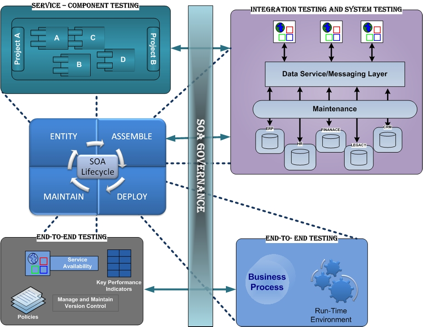

Service-Integration-System-EndtoEnd (SISE) Approach

Service-Component Level Testing (ENTITY in SOA Cycle)

This stage of testing is concentrated more from individual service component perspective. These component objects are light-weight implementations of system services which results in shorter execution times.

For an individual service, test scripts are created for each web methods. XPath Assertions and input conditions form a base for test scripts creation. For example, “Consider a web-service which provides the financial and company information. This web-service contains the web methods namely CompanySearch, Getfullprofile, Getfullprofilebyaccessionnumber, Getserviceinfo etc.”

Based on the above example, all the listed web methods are tested using the technique “Good-Known data”. Each web method output is validated by providing known input and output results; that is by creating a known reference set of data.

This approach ensures the proper functioning of individual web-methods/services; that is the smallest piece of the product.

Integration Testing (ASSEMBLE in SOA Cycle)

Next level of testing is to test the integration of different components/services of the system. This testing is aimed at validating the proper communication of messages between different services.

For example, let us take the following scenario: “To calculate the foreign currency rate, Bank ‘A’s web-service developed in .net communicates with the forex exchange service which is developed in Java” In this scenario, services developed in different technical platform communicate with each other. Both services need to communicate in a standard messaging format, which is understood by both services.

During this stage of the testing, test scripts are written to validate proper messaging format, communication policies between each service, level of data being exposed between services, security related policies, governance policies etc. Testing is conducted using the technique “Good Known data”, a reference set where the input and output is of known good data and services are validated to return the pre-determined output.

Thus 2nd stage of testing ensures, different types of services across multiple platforms seamlessly integrating and communicating with each other.

System Testing (ASSEMBLE in SOA cycle)

Last 2 testing stages focused on testing interfaces, components, services and their interactions. Till this stage we have not considered the operational environment in the services. System tests close this gap and consider the deployment environment, target platform and external systems that the application is dependent on.

System tests allow to test the processing tasks within this complex distributed environment. In this testing stage, the input data to different services are populated from the production replica (test environment). For example, “Consider a cash withdrawal scenario; User enters the correct pin and the amount to be withdrawn. Machine will communicate to the Bank, to verify the available balance and also system will validate whether the machine has enough balance” In the above scenario, services communicate with different systems, databases, business services (ex: biztalk server). Test scripts are developed to validate (proper messaging format, communication policies between each service, level of data being exposed between services, security related policies, governance policies etc) services integration and communication with different systems and services. Test scripts created during the last 2 testing stages are also executed for results, having the input data being populated from the original systems.

Also centralized mock services are deployed to manage asynchronous communications. For example, the authorization server takes higher amount of time, due to the large queue of requests. In this case, mock services are deployed to handle asynchronous communication, which waits for the response from the authorization server.

On top of the mock services, message checkpoints are deployed to route the message to appropriate services. For example, a mock service handles request A from service A, request B from service B and request C from service C. When the mock service receives the response, it needs to deliver the request to appropriate service; this is taken care by the message checkpoints. In this case, the response from service A is directed to service A (as per the function) and response for service B returns to service B (as per the function) etc.

Performance monitors are deployed to collect the response times between components and services. This helps the project team to come up with known limitations/facts/re-engineering in the architecture, solution and recommend appropriate hardware.

End – To – End Testing (DEPLOY AND MAINTAIN in SOA cycle)

End-to-end tests consider complete business cases. The goal is to guarantee a proper integration with other business systems (e.g. an ERP system, mail server, web application etc) besides our current services.

All services and applications of the system as well as of other business systems are taken into consideration. End-to-end tests are used to test complete sense & respond business scenarios in order to verify their correct behaviour from an end user perspective.

During this testing, systems with different protocol requests considered under the business cases are tested. Multiple agents are being deployed in the target environment to collect data. Types of protocols include HTTP, HTTPS, SMTP, SOAP, XML-RPC, IRC etc.

For example, consider this following scenario: “Consider a bank-to-bank transfer scenario, Bank A connects to its accounts database to check the bank balance and authorize the transaction and push this to the transfer message queue. Then the service from Bank A communicates with the standard regulatory authorities and services from Bank B. Bank B queue the request in the message queuing and validates the beneficiary account and approves the request. On approval the request is send to the web application and also a e-mail is send to the account holder about the transaction details”

For the above explained case, the services communicate with banking legacy applications, mail server, web application etc. Communications happen through different protocols SOAP, HTTP, SMTP, HTTPS etc. As explained in the integration testing, mock services and message checkpoints are used appropriately to handle request and route response messages.

During this testing stage, test scripts created during first 3 stages of testing, are used appropriately. For example, if a new version of a service is released it undergoes all the 4 stages of testing having the final boundary as end-to-end testing stage.

Also performance monitors are deployed to calculate the overall response time for an end-to-end business transaction.

SOA Governance

Governance is a key factor in the success of any SOA Implementation. SOA Governance refers to the Standards and Policies that govern the design, build and implementation of a SOA solution and the Policies that must be enforced during runtime. SOA Governance testing is not conducted separately. Governance test cases form a part of our test scripts across the defined testing stages.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.