6 Ways to Speed Up Your Tests

When you’re driven by the enthusiasm of having a good test coverage for your application, you automate the entire workflow**. As a result of this, the test-suite becomes huge and the safety net of these tests become a bottleneck. What starts off as a method to give faster feedback, now becomes a cause for delaying code commits.

This is a common challenge faced by most teams. We faced a similar situation, but we overcame this challenge by taking advantage of the automated test suite. Here’s how we did it:

#1 - Identify flaky tests

What are flaky tests?

Let’s take a typical scenario from our projects…it’s been a couple of hours and you’re still waiting for a successful build to commit your changes to. Not wanting to delay your commit further, you decide to fix these tests yourself. To your amazement, you discover that all the tests are passing in re-run. Tests that fail randomly and pass on re-run without code changes are known as ‘Flaky Tests’.

As Martin says in his blog, these flaky tests can take away the benefits of your bug detector(automated test suite). Flakiness in a test-suite can completely break confidence in the test-suite. Benefits of the automated test-suite can be enjoyed only when it’s reliable and gives timely alerts.

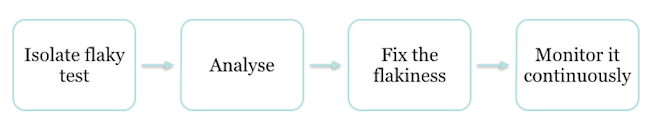

Therefore, our first task at hand is to get a green build and the confidence back. As Martin suggests, follow this workflow:

By now, you’ll probably have a green build and you can commit your changes. Any test failing hereafter will be for genuine reasons.

#2 - Break the tests

Another way to quickly get feedback from tests is to run them in multiple categories. Break the tests into categories according to the functionality or by nature of the tests -

-

Smoke test - the tests needed to ensure that critical functionalities(i.e business & technical behaviors) are intact.

-

Regression tests - the tests which cover all other workflows.

A smoke test could be executed as part of every commit, and regression tests could be executed as multiple commits or on a timely basis. In this manner, we could run only the needed subsets on every commit. This will make sure that the commits aren’t delayed and there is no compromise on tests.

If categorising the tests is a lot to do, there is always an alternative solution - parallelisation.

Parallelisation: There are lot of parallelisation tools available in the market. If you have the luxury of multiple VMs and test parallelisation tools like Selenium grid and Test Load Balancer(TLB), the execution time can be brought down drastically.

Once the delayed commits are taken care of, we can actually start looking at ways to improve the tests. The methods suggested above can bring in some immediate relief, but it isn’t permanent.

# 3 - Create test data smartly

A good automation test is the one which creates and cleans up the needed data after use.

By following this rule of thumb for good automation, we would have ended up with tests which spend more time creating the test data rather than testing it! We can't compromise on good practices in order to have faster builds, can we?

Most of the automated tests create test data through a browser. It would be very effective if we create test data directly in the database. DB calls are cost and time efficient. By reducing the UI interaction, the test becomes fast and more reliable. However, if projects have complex DB interactions then this approach may not be handy.

#4 - Think before you try xpaths

I used to be a fan of xpaths. Most of us fail to realise that locator selectors contribute to the execution speed of tests in a big way. When xpaths are used for object identification, a lot of the background processing/filtering happens. Refer this to understand more about how xpaths are processed.

Xpath identification is generally slower than other forms of object identifications. Therefore, it always helps to identify objects with direct attributes like ID, Name, etc. You can use CSS selectors as an alternative. But try and keep xpaths as your last saviour. The result of these locator strategies can be seen gradually over the period of time.

#5 - Add more caffeine to tests

Wait statements are added in tests when we navigate between pages, or when we wait for the asynchronous call to finish.These wait statements are deadly as they make tests slow, are less reliable and at times flaky/brittle.

Try to consciously eliminate wait statements in your tests. Replace all generic wait statements with conditional waits (such as the wait for a page load to finish or an element to appear etc). Conditional waits will then wait only for a specific action/event to occur and proceed with execution as soon as it's complete.

#6 - Leverage the efficiency of Unit-Tests

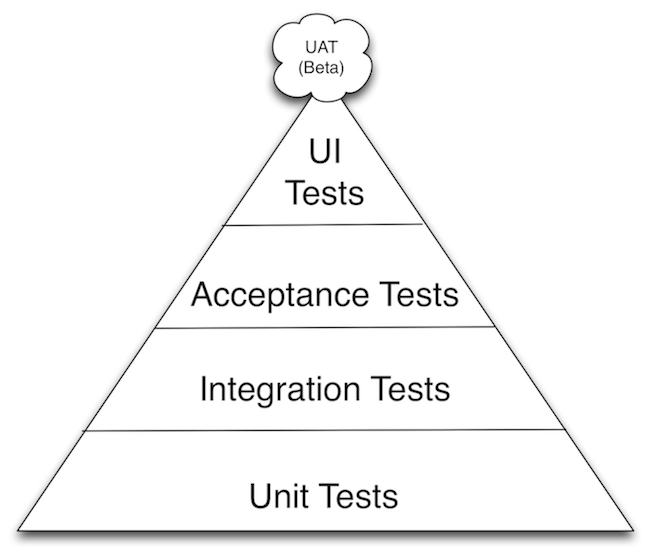

The test pyramid tells us to add more tests to the lower layer of the pyramid and less at the end-to-end layer. Tests can be identified at unit, integration and UI levels.

Tests at the lower level of the pyramid are faster than UI tests. Identify a test which doesn't necessarily need a browser. Actions like verifying the contents of a downloaded file or email can be tricky and time consuming when tested in a browser. Unit tests can handle these functions efficiently with the help of mocks and stubs.

Identifying and pushing tests to a lower level makes tests faster and reliable. Join hands with developers, review the unit-tests and add more to the unit-levels. By reviews, you can avoid test duplication in multiple layers. Add integration test, if applicable. Ensure only high-level workflows are covered in end-to-end/UI tests.

These nifty ways helped my team overcome the side-effects of a large test-suite and reap all benefits of an automated test-suite. I'm sure there are more, so share your ideas with us!

workflow** - From a more abstract or higher-level perspective, workflow may be considered a view or representation of real works.In terms of testing,scripted end-to-end testing which are expected to be utilized by end-user.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.