Machine learning (ML) and artificial intelligence (AI) have become ubiquitous in everyday business. However, it remains a challenge to fully exploit the true potential of ML by integrating it into actual business processes. Many ML experiments never get beyond the prototype stage (according to a report by VentureBeat, this is 87% of all ML projects). This is because at that point, the bulk of the work still lies ahead of the team: integrating the solution into the company's existing IT infrastructure, developing it further and maintaining it. Here, automation is essential, especially if ML is to be deployed on a larger scale. Thoughtworks and AWS have found in their collaboration that specific practices and tools are needed to truly realize the business benefits of ML.

When we implemented our first machine learning projects with customers a few years ago, we already had a lot of experience with DevOps on traditional software projects. We brought this mindset, which relies on collaboration in interdisciplinary teams, with us into the world of Data Science. Up until then, it had been customary to work separately from developers. One team was responsible for building models, another for operationalizing them. This resulted in friction and inefficiencies.

Following the DevOps idea, we joined the two teams in each project. This brought the intended success: data scientists and developers, data engineers and DevOps engineers developed a mutual understanding of their respective ways of working and problems so that they could find solutions together. Team members became empathetic for each other’s roles and were able to work together more productively: the path to the first version of a product was noticeably shortened.

To develop the product further, we typically rely on Continuous Delivery. In an iterative process, a production-ready application is developed that is maintained following the same pattern. We have also adopted these principles to the development of machine learning applications.

In order to do justice to the differences between work on conventional applications and work on ML applications, we have introduced the terms MLOps and CD4ML (Continuous Delivery for Machine Learning). This is because ML projects have their own unique challenges, particularly the added complexity they bring. A machine learning application consists of many moving parts. It is based on:

data that is constantly changing,

a model that can be trained using different parameters,

and code that must be adapted to the latest requirements of the environment, such as a website.

Changes to these components must be documented and tracked. In conventional development, Git has proven its worth for code, but a trained ML model, for example, cannot be conveniently stored in a Git repository. The various ML building blocks require specific version control solutions.

When data scientists work on a model, they often test many hypotheses simultaneously. These experiments also need to be documented appropriately. Only then can it be understood which setup delivered the best results. In addition, documentation is important to enable auditability. It is crucial to keep track of which data was used for which experiments, which code versions and which parameters.

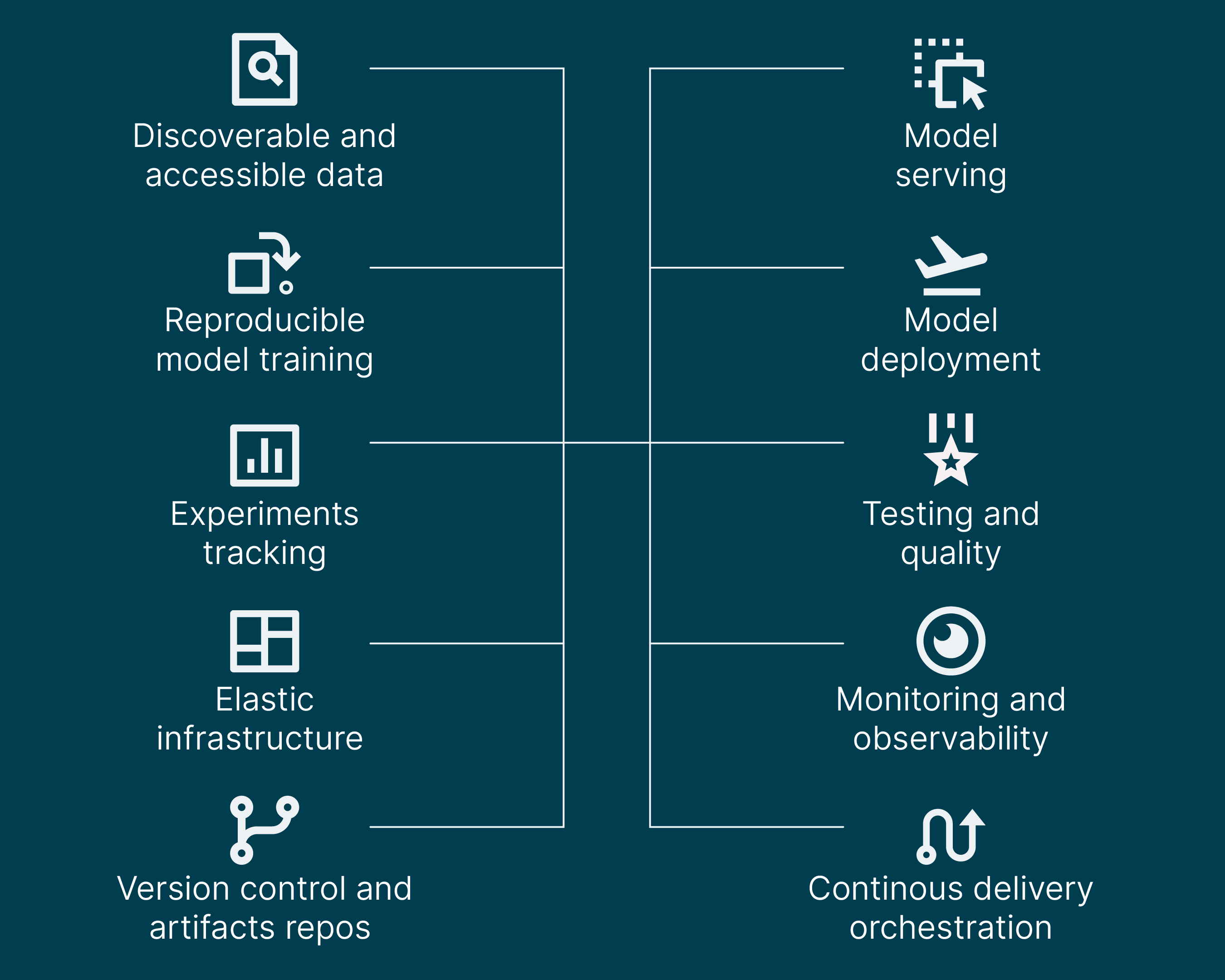

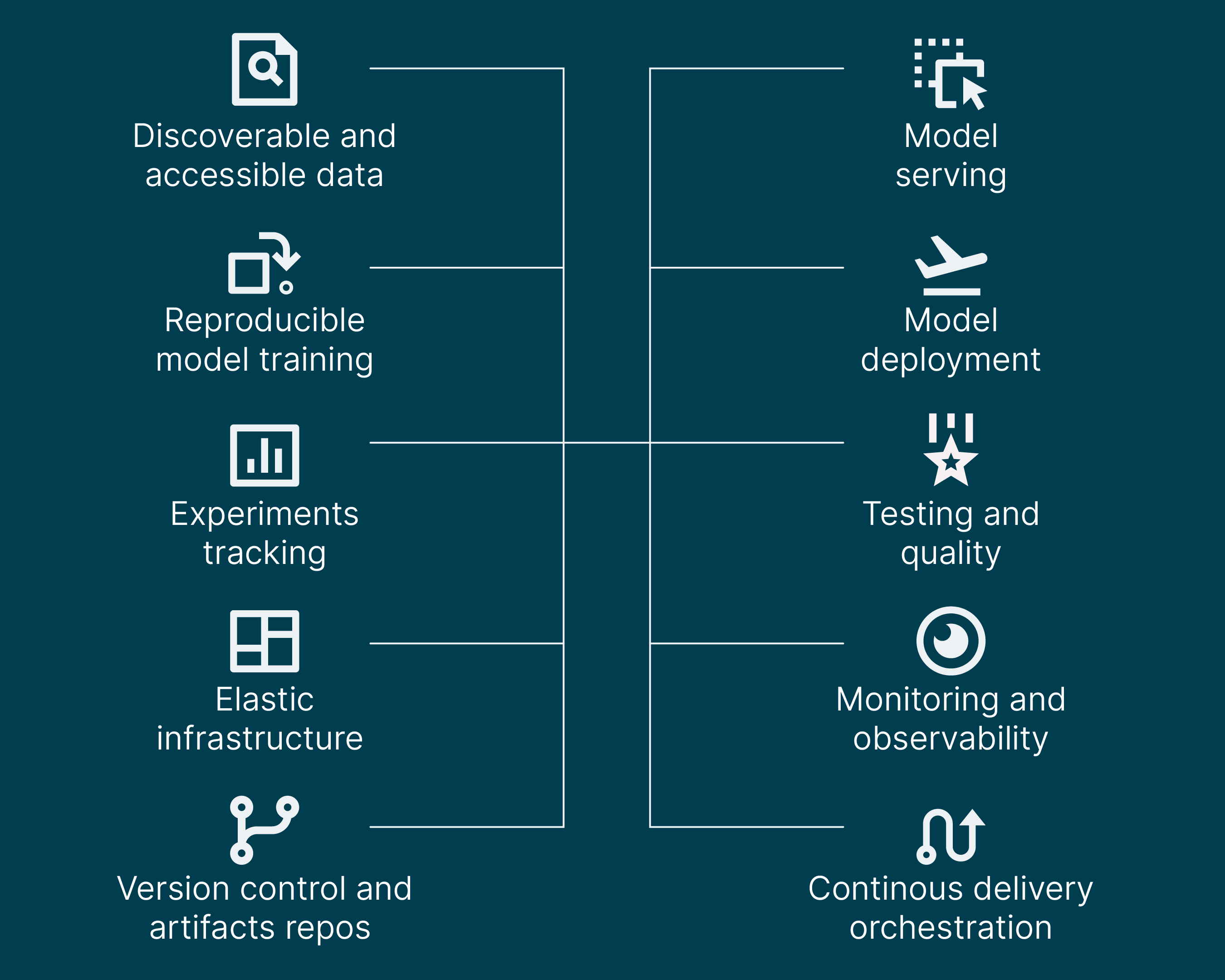

Version control and tracking of experiments are only two of many problems for which CD4ML requires special infrastructure and tools. In fact, many more add to the mix:

At AWS, specific matching cloud services, managed services and pre-trained ML models are available. These support the interdisciplinary teams throughout the entire workflow, from data exploration and preparation to the development, testing and deployment of ML solutions. Active monitoring of the models, triggering re-training and integration into the company's CI/CD processes are also covered.

The interdisciplinarity of the teams is reflected in these integrated tools. In a unified user interface, both developers and data scientists can find the tools they need to develop their project together and deploy it successfully and at scale. One of these managed ML services provided by AWS is Amazon SageMaker. It allows a large number of models to be handled efficiently. Above all, it also enables rapid experimentation — the prerequisite for innovation. Thanks to Amazon SageMaker, experimentation and iteration can be accelerated in many ways through automation. At the same time, the underlying, flexibly scalable cloud infrastructure is automatically managed as well, enabling teams to access the performance they need at optimized costs. Amazon SageMaker scales the infrastructure up or down as needed. In addition, cost-optimized compute services for training and inference can be accessed through the system's managed service.

An important goal of CD4ML is to permanently update models that are deployed in production on the basis of real data. But this requires regular re-training of the model, which can also lead to performance degradation or errors. If an ML model deviates in operative use, the need for re-training is automatically detected. The results of such re-training, as well as other content enhancements, are then quality-checked again automatically. Managed services such as Amazon SageMaker make it possible to automatically subject a new version to tests and to deploy it automatically as long as the tests are successful. However, if quality measures are not in the desired range, the release is held back and a data scientist can be notified of the problem.

By automating routine tasks like retraining and many others throughout the workflow, the ML team can focus on the problems that really matter. We have found that this often means a gain in speed and an increase in the appetite for experimentation. Teams don't have to worry that something will break in production just because they change something at one step in the development cycle. This breeds confidence and ultimately leads to faster iteration cycles and machine learning applications that work with models that are always up-to-date. Also, by separating notebook instances, training instances and inference instances, Amazon SageMaker allows the environment to remain constant between experimentation and production deployment. This prevents errors and altered behavior of models in production.

With MLOps, it is important to tackle the right problems — actual business problems that can indeed be solved by ML. Common use cases for such business problems include:

personalizing the user experience.

predicting demand for specific products to optimize logistics and supply chains, among other things.

the automated processing of document workflows (customer forms).

speech recognition processes and interactive bots.

In addition, an organization must be ready for the use of ML. Machine learning models make predictions and statistical statements that, by nature, are not always 100% accurate. This means that, on the one hand, an organization must be prepared for the fact that not every experiment will succeed. On the other hand, ML may not be the answer to every business problem for this reason. Therefore, a conscious decision must be made as to whether ML is the appropriate choice for a given task.

At the same time, it is important that the MLOps culture is fully supported within the organization. A culture must be allowed to develop organically and needs continuous nurturing and a safe space to do so. New tools alone are not enough to use ML productively and on a large scale. In addition to new ways of working together, they can help to make different perspectives on the ML workflow transparent and integrate them with each other. This then makes it possible to use machine learning models with a high degree of automation in a safe, traceable and scalable way.