In your digital life, when you see helpful predictions for anything from a grammar change in a document to an estimated arrival time for your home delivery, you're seeing real-time inference. Real-time inference is an ideal solution if your business objective needs low-latency and interactive prediction. Batch inference, on the other hand, is a process of generating predictions on large data sets and doesn't need the sub-second latency that real-time inference provides and may be simpler and more resource-efficient as a result. We have already briefly covered how we can blur the lines between live and batch inference from a batch data processing perspective. Let's recap and dive a bit deeper on other challenges such as live-batch inference skew and low latency.

Live-batch inference skew is a difference between predictions via real-time inference and predictions via batch inference. This skew can be caused by:

A discrepancy between your data extraction process in the real-time and batch inference pipelines,

A discrepancy between data type implementations (such as floating point numbers) in different data environments, or

A difference between the code that used to generate predictions.

It is crucial that real-time and batch inference share the same data stores to minimize defects during the feature extraction process. While batch and real-time inference may have different requirements, it is very common to use a feature store to separately address these requirements; use an offline store for model training and batch inference, and an online store to lookup missing features and build a feature set to send to an online model for prediction.

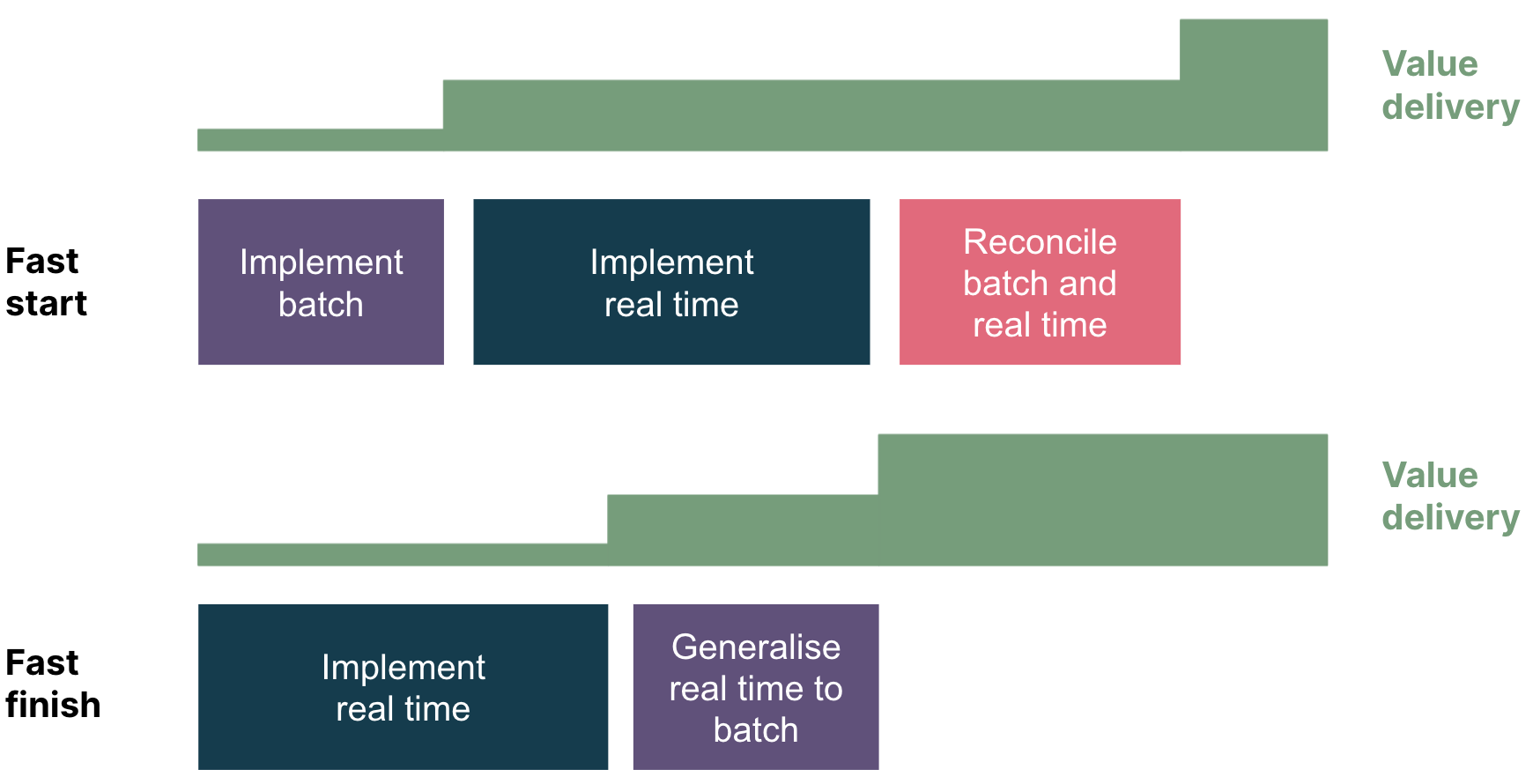

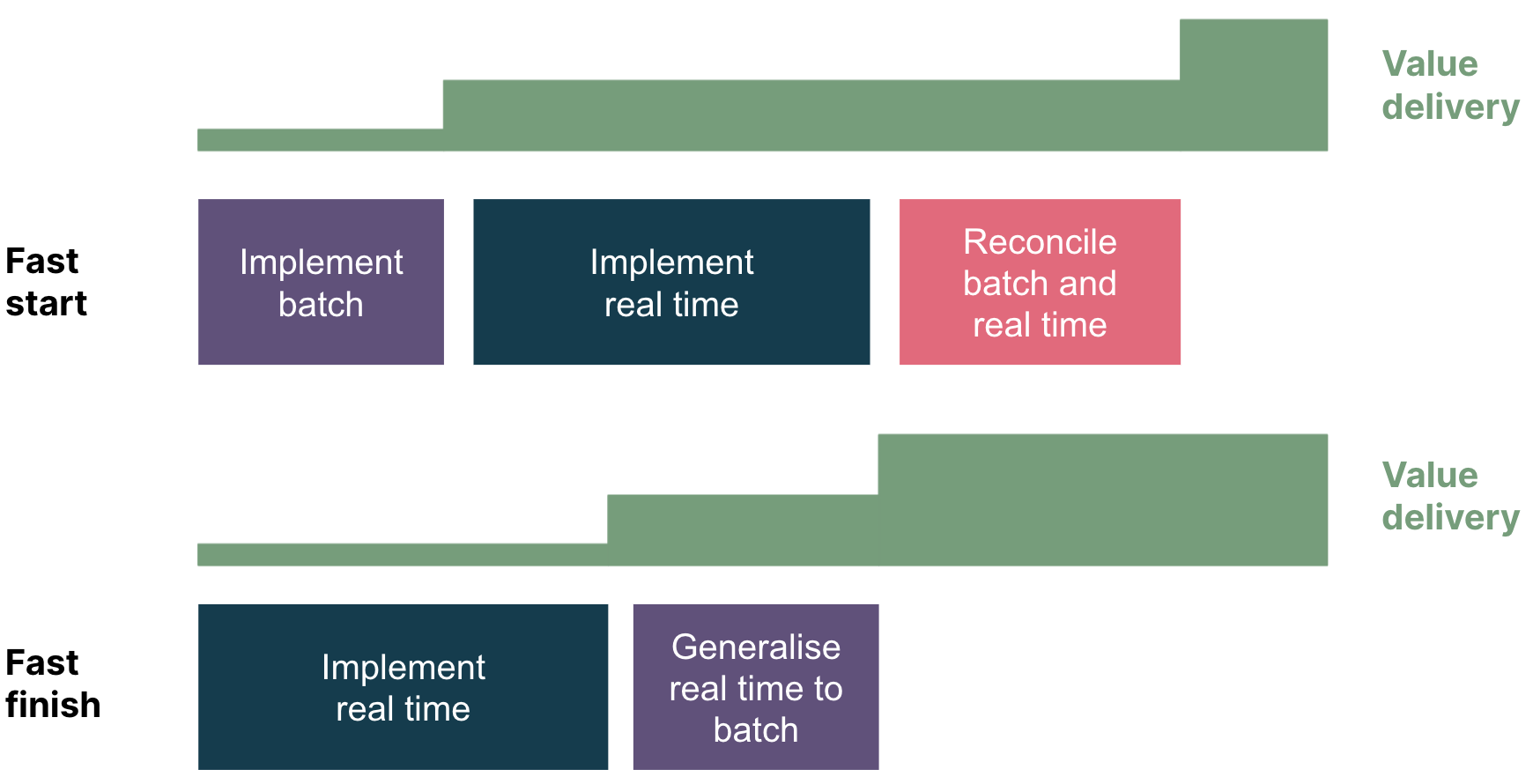

It may feel like an anti-pattern to start dealing with real-time inference first, as many machine learning models don’t have a real-time inference use case in early stages, and batch may be simpler and more resource-efficient, but using a real-time inference first approach could ensure that real-time and batch inference shares the same input feature vector. Real-time inference is really just a mini-batch inference of size 1, so when it comes to full data batch inference, we could simply increase the mini-batch size and scale it out horizontally. This approach fast tracks your development and testing process and eliminates the source of live-batch inference skew.

Comparing inference delivery sequences. Implementing batch alone first provides some value early but may delay the full release of batch and real-time inference due to difficulty reconciling. Implementing real-time first may take longer to first release but may be faster to deliver both real-time and batch.

Not every inference is derived from a single model; many of the most useful and accurate predictions must combine multiple data sources, which can result in ensemble models combining multiple predictions. Ensemble models, despite their good accuracy, raise an extra challenge in low latency. Asynchronous real-time inference submodel requests could be an effective way to reduce latency and increase throughput. However, as this significantly increases system complexity, it is critical to apply continuous delivery in machine learning (CD4ML) when implementing such systems, and to monitor application performance and reconciliation metrics along the way.

If you're interested in discussing how we could help you accelerate real-time inferences, or want to share your experiences on how you're tackling them, we'd love to hear from you!

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.