Cloud

Kubernetes: an exciting future for developers and infrastructure engineering

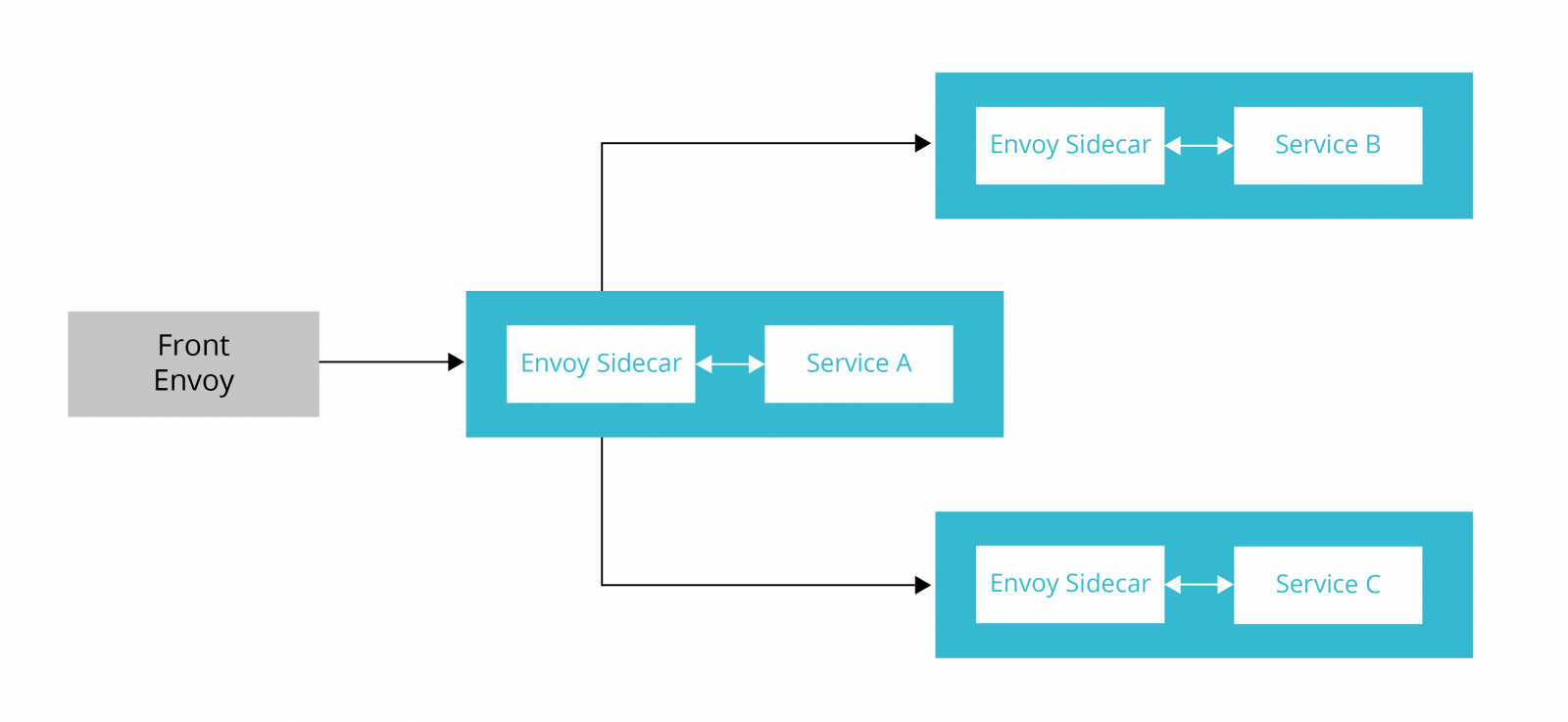

Services setup with sidecar proxies

Services setup with sidecar proxies---

admin:

access_log_path: "/tmp/admin_access.log"

address:

socket_address:

address: "127.0.0.1"

port_value: 9901

static_resources:

listeners:

-

name: "http_listener"

address:

socket_address:

address: "0.0.0.0"

port_value: 80

filter_chains:

filters:

-

name: "envoy.http_connection_manager"

config:

stat_prefix: "ingress"

route_config:

name: "local_route"

virtual_hosts:

-

name: "http-route"

domains:

- "*"

routes:

-

match:

prefix: "/"

route:

cluster: "service_a"

http_filters:

-

name: "envoy.router"

clusters:

-

name: "service_a"

connect_timeout: "0.25s"

type: "strict_dns"

lb_policy: "ROUND_ROBIN"

hosts:

-

socket_address:

address: "service_a_envoy"

port_value: 8786

admin:

access_log_path: "/tmp/admin_access.log"

address:

socket_address:

address: "127.0.0.1"

port_value: 9901

static_resources:

listeners:

-

name: "service-a-svc-http-listener"

address:

socket_address:

address: "0.0.0.0"

port_value: 8786

filter_chains:

-

filters:

-

name: "envoy.http_connection_manager"

config:

stat_prefix: "ingress"

codec_type: "AUTO"

route_config:

name: "service-a-svc-http-route"

virtual_hosts:

-

name: "service-a-svc-http-route"

domains:

- "*"

routes:

-

match:

prefix: "/"

route:

cluster: "service_a"

http_filters:

-

name: "envoy.router"

-

name: "service-b-svc-http-listener"

address:

socket_address:

address: "0.0.0.0"

port_value: 8788

filter_chains:

-

filters:

-

name: "envoy.http_connection_manager"

config:

stat_prefix: "egress"

codec_type: "AUTO"

route_config:

name: "service-b-svc-http-route"

virtual_hosts:

-

name: "service-b-svc-http-route"

domains:

- "*"

routes:

-

match:

prefix: "/"

route:

cluster: "service_b"

http_filters:

-

name: "envoy.router"

-

name: "service-c-svc-http-listener"

address:

socket_address:

address: "0.0.0.0"

port_value: 8791

filter_chains:

-

filters:

-

name: "envoy.http_connection_manager"

config:

stat_prefix: "egress"

codec_type: "AUTO"

route_config:

name: "service-b-svc-http-route"

virtual_hosts:

-

name: "service-b-svc-http-route"

domains:

- "*"

routes:

-

match:

prefix: "/"

route:

cluster: "service_c"

http_filters:

-

name: "envoy.router"

clusters:

-

name: "service_a"

connect_timeout: "0.25s"

type: "strict_dns"

lb_policy: "ROUND_ROBIN"

hosts:

-

socket_address:

address: "service_a"

port_value: 8081

-

name: "service_b"

connect_timeout: "0.25s"

type: "strict_dns"

lb_policy: "ROUND_ROBIN"

hosts:

-

socket_address:

address: "service_b_envoy"

port_value: 8789

-

name: "service_c"

connect_timeout: "0.25s"

type: "strict_dns"

lb_policy: "ROUND_ROBIN"

hosts:

-

socket_address:

address: "service_c_envoy"

port_value: 8790

admin:

access_log_path: "/tmp/admin_access.log"

address:

socket_address:

address: "127.0.0.1"

port_value: 9901

static_resources:

listeners:

-

name: "service-b-svc-http-listener"

address:

socket_address:

address: "0.0.0.0"

port_value: 8789

filter_chains:

-

filters:

-

name: "envoy.http_connection_manager"

config:

stat_prefix: "ingress"

codec_type: "AUTO"

route_config:

name: "service-b-svc-http-route"

virtual_hosts:

-

name: "service-b-svc-http-route"

domains:

- "*"

routes:

-

match:

prefix: "/"

route:

cluster: "service_b"

http_filters:

-

name: "envoy.router"

clusters:

-

name: "service_b"

connect_timeout: "0.25s"

type: "strict_dns"

lb_policy: "ROUND_ROBIN"

hosts:

-

socket_address:

address: "service_b"

port_value: 8082

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: servicea

spec:

replicas: 2

template:

metadata:

labels:

app: servicea

spec:

containers:

- name: servicea

image: dnivra26/servicea:0.6

ports:

- containerPort: 8081

name: svc-port

protocol: TCP

- name: envoy

image: envoyproxy/envoy:latest

ports:

- containerPort: 9901

protocol: TCP

name: envoy-admin

- containerPort: 8786

protocol: TCP

name: envoy-web

volumeMounts:

- name: envoy-config-volume

mountPath: /etc/envoy-config/

command: ["/usr/local/bin/envoy"]

args: ["-c", "/etc/envoy-config/config.yaml", "--v2-config-only", "-l", "info","--service-cluster","servicea","--service-node","servicea", "--log-format", "[METADATA][%Y-%m-%d %T.%e][%t][%l][%n] %v"]

volumes:

- name: envoy-config-volume

configMap:

name: sidecar-config

items:

- key: envoy-config

path: config.yaml

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.