Are Test Coverage Metrics Overrated?

I know a certain developer whose level of anxiety rises to disproportionate levels when his test coverage report returns a less-than-100% coverage. Whenever this happens, he will fiddle with his tests until he achieves the glorious 100% statistic - after which he will have earned not only bragging rights (shudder!), but also the approval of upper management. But let’s take a step back and examine this situation. Does a 100% test coverage result mean that we have achieved testing perfection? The answer may baffle you.

Test coverage (also referred to by some as code coverage) is one of many metrics that are commonly used to give a statistical representation of the state of the code written for a certain piece of software. Other typical metrics include1: cyclomatic complexity, lines of code, maintainability index and depth of inheritance. Each of these, I would argue, is a book of its own.

Test coverage in particular, is a measure of the extent to which the code in question has been tested by a particular test suite2.

The higher the test coverage, the greater the extent to which the code has been tested. This leads to the natural conclusion that higher is better. But how high?

To answer this question, let us examine the way tools that measure test coverage work:

Normally, the tool measuring test coverage will monitor the code during a test suite run. The tool will attempt to check that each written line of code is called at least once during the test suite run. And this is logical - since a line that has not been called during a test suite run is effectively untested.

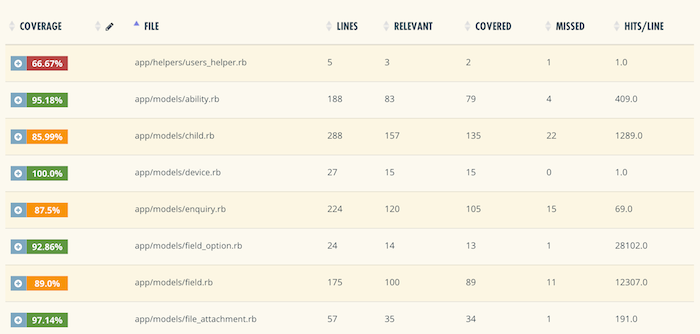

Typically, the tool will then present a report of the various files in the codebase and their discovered test coverage - based on the number of relevant lines called during the test execution (see example below from coveralls)

Based on the above behaviour, it makes (some) sense to conclude that if all lines of code have been executed at least once, then the entire codebase has been tested.

I will admit that the above explanation is overly simplified, and that in reality a number of coverage criteria such as function, statement, branch and condition coverage may be used to arrive at the final statistic. However, the key question to ask then is “Does it mean that if every line of code has been called by the test suite then the code is perfectly tested?” In my opinion, that is a definite NO. Why? I think a 100% test coverage based on that premise implies exactly that - that every line of code has been called by the test suite at least once. This could even have been done without assertions!

What does this all mean then? There are a couple of things I would highlight:

-

Firstly, a high test coverage is not a sufficient measure of effective testing. On the contrary, test coverage more accurately gives a measure of the extent to which the code has not been tested. This means that if we have a low test coverage metric, then we can be sure that there are significant portions of our code that are not tested. The inverse however is not necessarily true. Having high test coverage is not a sufficient indicator that our code has been sufficiently tested.

-

Secondly, while a relatively high test coverage (perhaps in the upper 80s or 90s) would most likely manifest if proper testing is going on, a 100% test coverage should not be one of the targets we unequivocally work towards. This is because there will always be a tradeoff between achieving a 100% test coverage as opposed to actually testing the code that matters. While it is possible to test ALL your code, it is also very likely that the value of your tests diminishes as you approach this limit, given the tendency to write more meaningless tests for the sake of satisfying the coverage requirement.

So are test coverage metrics overrated? Yes! But only if you attempt to use them as an objective overall measure of the quality of the code you have written as opposed to understanding that they are just one informative piece of a large multifaceted puzzle.

In the words of Martin Fowler, test coverage is a useful tool for finding untested parts of a codebase. However, it is of little use as a numeric statement of how good your tests are.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.