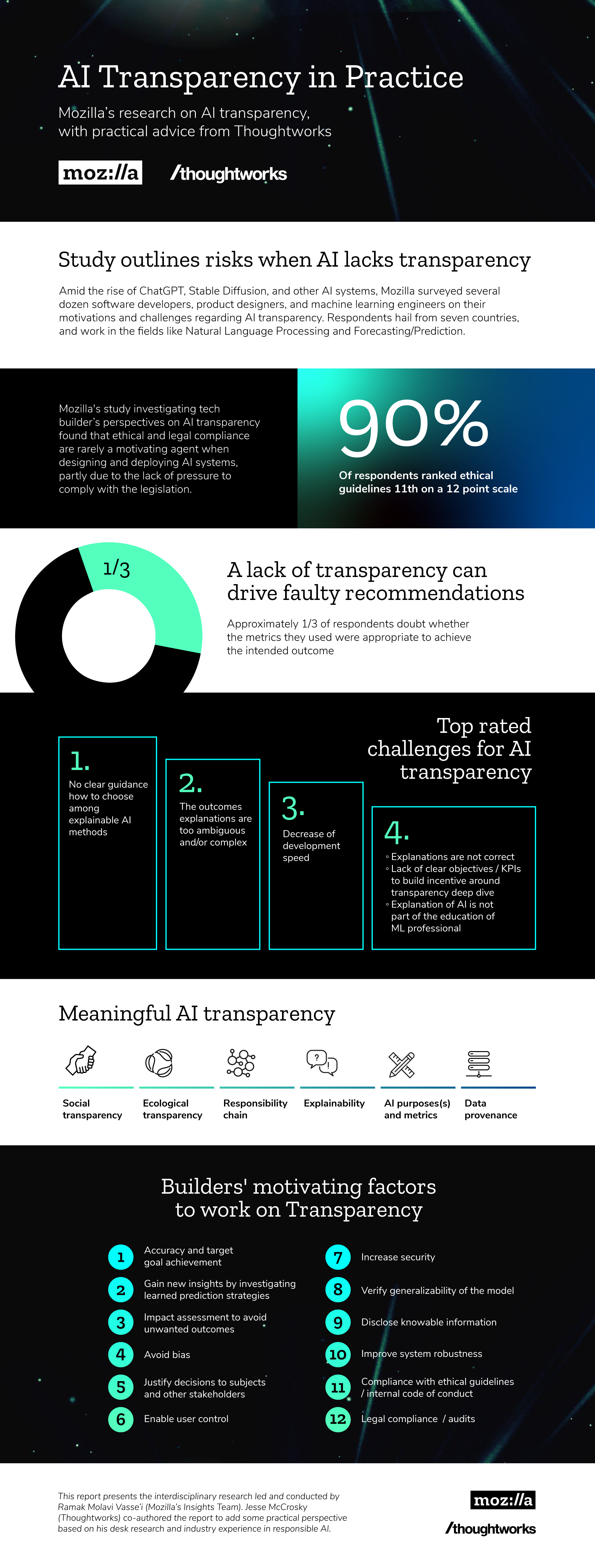

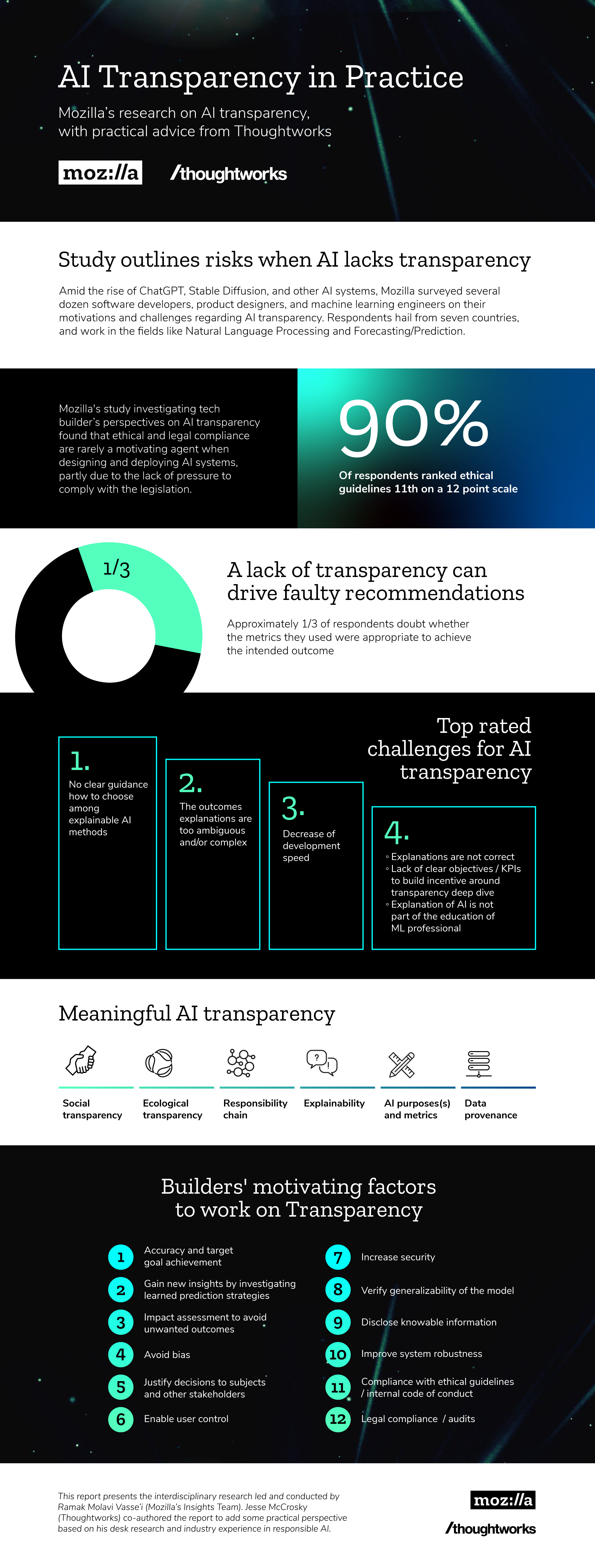

Do you know what the AI you just interacted with is truly designed to do? Is your AI solution really giving your teams the best recommendations? Is there unintended bias affecting the results? Does it matter?

Based on a new study of tech builders conducted by Ramak Molavi Vasse'i from Mozilla's Insights Team with contributions from us at Thoughtworks, it's clear that transparency in AI does matter and that ignoring it has very real risks. Below are some of the key learnings and an invitation to read the full report.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.