Test-driven development, or TDD, involves writing tests first then developing the minimal code needed to pass the tests. TDD is an established practice for feature development that can improve code quality and test coverage. What about other, non-functional requirements such as scalability, reliability, observability, and other architectural “-ilities”? How do we ensure operability and resiliency of features when they go to production? How can we encourage teams to build in these architectural standards, just as test-driven development builds in code quality and test coverage?

Architecture standards evolve constantly. For example, compliance standards today will likely be different a year from now; ongoing changes in those standards should be reflected in gates to production deployment. The idea that architecture can support change was described by Neal Ford, Rebecca Parsons, and Pat Kua in 2017 as evolutionary architecture.

“An evolutionary architecture supports guided, incremental change as the first principle across multiple dimensions.”

What are fitness functions?

How do we enable evolution? Architectural goals and constraints may all change independently of functional expectations. Fitness functions describe how close an architecture is to achieving an architectural aim. During test-driven development we write tests to verify that features conform to desired business outcomes; with fitness function-driven development we can also write tests that measure a system’s alignment to architectural goals.

Regardless of the application architecture (monolith, microservices, or other), fitness function-driven development can introduce continuous feedback for architectural conformance and inform the development process as it happens, rather than after the fact. For example, logging is sometimes added as an afterthought and may not contain consistent or useful debugging information. Leveraging a fitness function to ensure that new code has structured, sensible logging during the development process ensures the operability and debuggability required for a production application.

Where to start

Think of architecture as a product having user journeys, and start by gathering input from stakeholders in business, compliance, operations, security, infrastructure, and application development to understand the architectural attributes they consider most important to the success of the business. These attributes often align with the architectural “-ilities.” After team members jot down their top five or six attributes, group the results into common themes such as resilience, operability, or stability.

Examining these themes often uncovers potential conflicts or architectural tradeoffs. For example, goals for agility and flexibility may conflict with goals for stability and resiliency. Stability and agility are often diametrically opposed because of the way they are pursued. We attempt to achieve stability by establishing control over change and creating gatekeepers, while innovation and agility are enabled by reducing barriers to change in order to minimize the chance of failure. Shifting the mindset to value ‘mean time to recovery’ (MTTR) over ‘mean time between failure’ (MTBF) and embracing constant change is a challenge. Evaluating stakeholder motivation and holistically prioritizing the qualities that are important to the organization is the first step in defining architectural fitness functions. This initial exercise is similar to a cross-functional requirements session with the outcome of producing the desired fitness functions.

After collecting fitness functions, draft them in a testing framework. Ideally, fitness functions should describe the intent of the “-ility.” It addresses it in terms of an objective metric that is meaningful to product teams or stakeholders. Monitoring critical qualities as fitness functions can help product teams avoid architectural drift and objectively measure technical debt. But, where do these fitness functions reside, and how do they signal potential architectural drift? Creating the desired fitness functions — and including them in appropriate delivery pipelines — communicates these metrics as an important aspect of enterprise architecture. Regular fitness function reviews can focus architectural efforts on meaningful and quantifiable outcomes. These reviews also help maintain important system quality attributes for production readiness.

As a result, every new service or piece of software is developed in a way that passes the fitness functions and supports the architectural qualities we value. Fitness function-driven development is a natural extension of continuous integration; gatekeepers are automated, so they don’t block the flow to production.

To ensure that pipelines are compliant with an organization's continuous delivery standards, an initial check can ensure key stages are set, and gatekeepers are in place. For example, we can write a test to ensure that “all build jobs must export logs at the end of their run.” Additional checks can ensure that:

- Your code quality must be above 90% to be promoted to the next stage

- UAT versioning must not deviate more than two versions from production

- No secrets may be committed in plain text

- You must always have a security testing stage

- You must never deploy with another application's service account

- You must always have two approvers before production

And so on.

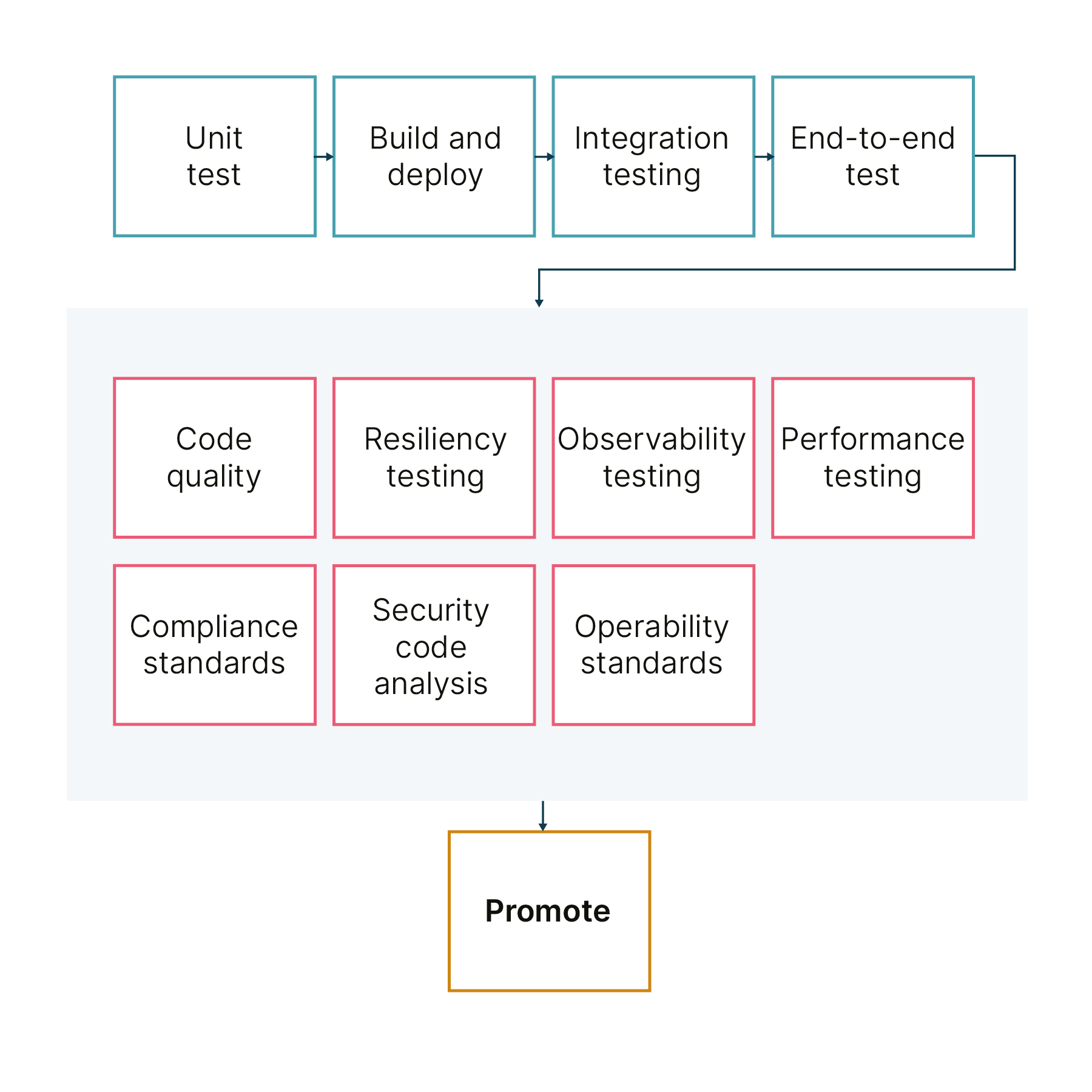

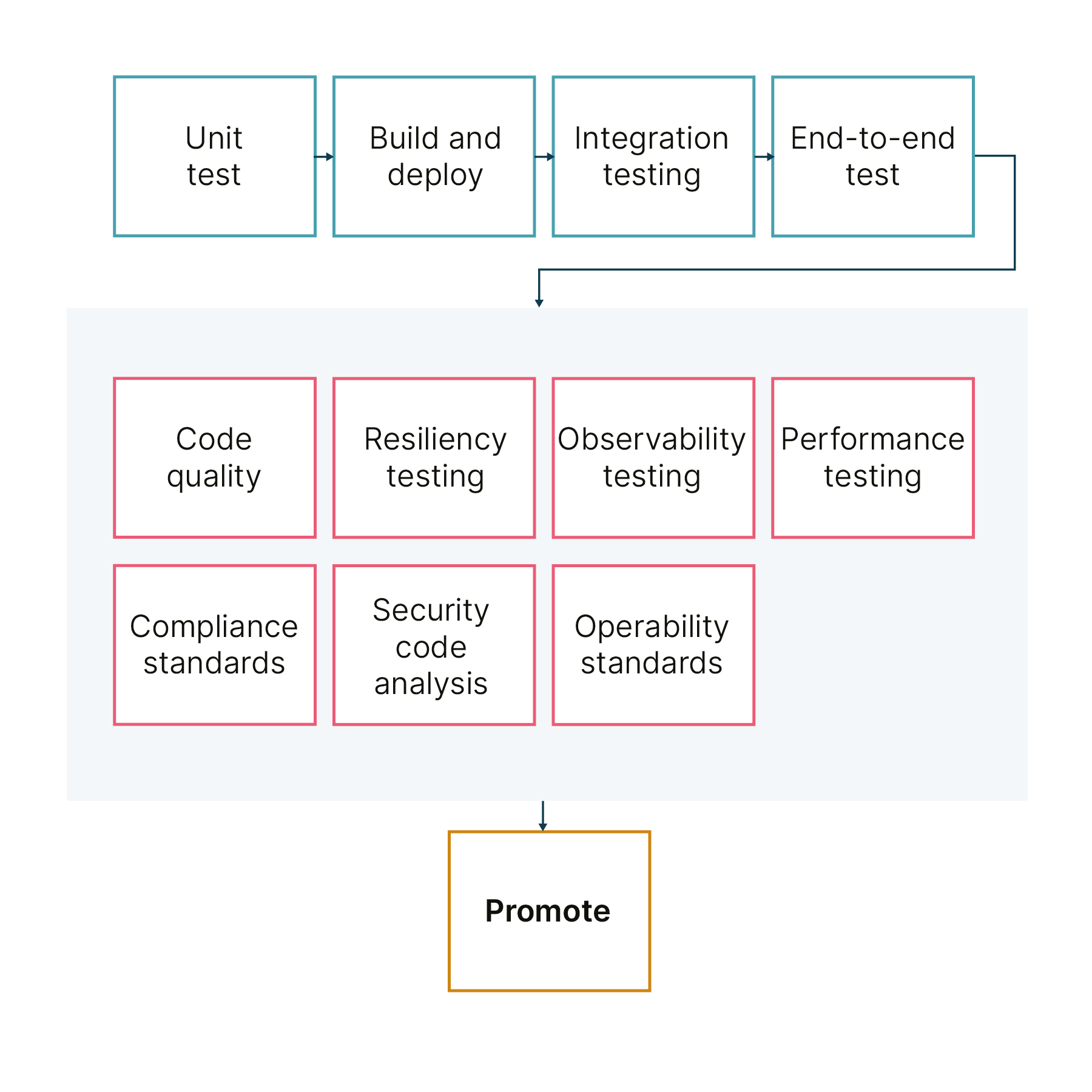

The approach of using build pipelines and fitness functions to monitor alignment with goals such as those above can be applied to general architectural goals. Examples of fitness functions that may align with an organization’s prioritized “-ilities” are illustrated below. These samples illustrate, in code, the intent and metrics for potential architectural fitness functions.

Code quality

Code quality can approximate the maintainability of code. These tests are added to the pipeline to determine if the code is ready for production, and can help establish objective measures for code quality early in the development cycle.

This set of fitness functions can serve as gatekeepers to prevent the promotion of unmaintainable or untested code to production. The example below demonstrates a fitness function that could be provided to a product team with the intent to write a code that allows it to pass, applying a test-driven-development pattern to architectural fitness functions.

describe "Code Quality" do

it "has test coverage above 90%" do

expect(quality.get_test_coverage()).to > .9

end

it "has maintainability rating of .1 or higher (B)" do

expect(quality.get_maintainability_rating()).to < .1

end

end

Resiliency

Tests can be added to the pipeline to ensure the availability of- applications during deployment or failure. A resiliency fitness function might inform developers of code changes needed to improve tolerance or handle retries.

As an example, a test could run a continuous load against a service while a new version of that service is rolled out to a pre-production environment. The result of the test run could report the number of successful versus unsuccessful requests. This type of test could identify tuning opportunities for a rolling update scenario.

describe "Resiliency" do

describe "New Deployment" do

it "has less than 1% error rate for new deployment" do

expect(new_deployment.get_error_rate()).to < .01

end

end

describe "Network Latency" do

it "has less than 5% error rate even if there is network latency" do

expect(network_tests.get_error_rate()).to < .05

end

it "completes a transaction under 10 seconds even if there is network latency" do

expect(network_tests.get_transaction_time()).to < 10

end

end

end

Observability

- To ensure that applications are monitored, shared libraries can implement fitness functions to ensure that architectural standards for observability are met, including:Is the application logging, and are the logs consumed by the log aggregator?

- Are the application metrics consumed by a metrics service?

- Does the application have a health endpoint?

- Does the application log correlation or tracing IDs?

Fitness functions support the implementation of consistent logging, metrics, and tracing capabilities early in the development cycle. Additional observability tests can be added throughout the product lifecycle to confirm that custom metrics specific to the service are captured.

describe "Observability" do

it "streams metrics" do

expect(service.has_metrics()).to be(true)

end

it "has parseable logs" do

expect(service.has_logs_in_aggregator()).to be(true)

end

end

Performance

Performance testing is often done by a separate team before code reaches production; these tests extend delivery timelines and the results are not always immediately visible to product developers. Writing automated performance tests and implementing those tests as fitness functions ensures that performance impacts are tested early, often, and built into the development process. Various tools and frameworks provide mechanisms to build tests and apply load in a variety of scenarios. Performance-related fitness functions can be standardized by business domain and expected use by using an environment that mimics production — such as a user-acceptance testing or staging environment. Using fitness functions, teams can iterate on the potential scalability or stability of code to support production workload and address the performance-related upstream impact on business functions

describe "Performance" do

it "completes a transaction under 10 seconds" do

expect(transaction.check_transaction_round_trip_time()).to < 10

end

it "has less than 10% error rate for 10000 transactions" do

expect(transaction.check_error_rate_for_transactions(10000)).to < .1

end

end

Compliance

Standards for various compliance constraints, whether regulatory, legal, or corporate are often expressed in a specific business or industry language; it’s helpful to generate compliance-related fitness functions with the help of a compliance and audit team. The example below can help illustrate the goals of fitness functions for compliance:

describe "Compliance Standards" do

describe "PII Compliance" do

it "should not have PII in the logs" do

expect(logs.has_pii_content()).to_not be(true)

end

end

describe "GDPR Compliance" do

it "should report types of personal information processed" do

expect(gdpr.reports_PII_types()).to be(true)

end

it "should have been audited in the past year" do

expect(gdpr.audit_age()).to < 365

end

end

end

Security

Security is a primary concern but developers often struggle with the interpretation and translation of security guidelines into enforcement. Also, when security guidelines are revised, it is difficult to know if the code is no longer in compliance. Organizing fitness functions for security enable developers to build in security during the development process, rather than evaluating it post-development and securing it later. There are many security fitness functions that can be generated — a common approach is code analysis for security. Static Application Security Testing (SAST) or image scanning tools are available to scan operating systems, applications, and their dependencies against known vulnerability databases. The scans can be automatically analyzed and used as an additional, automated quality gate during the development cycle.

describe "Security - Code Analysis" do

describe “Code Analysis” do

it "should use corporate-approved libraries only" do

expect(code.only_uses_corporate_libraries()).to be(true)

end

it "should not have any of the OWASP Top 10" do

expect(code.has_owasp_top_10()).to_not be(true)

end

it "should not have plaintext secrets in codebase" do

expect(code.has_secrets_in_codebase()).to_not be(true)

end

end

describe “CVE Analysis” do

it "should not use libraries with known vulnerabilities" do

expect(libraries.have_no_cves()).to be(true)

end

it "should not use a container image with known vulnerabilities" do

expect(container.has_no_cves()).to be(true)

end

end

end

Operability

Before a service goes into production, we need to ensure it’s supported in the event of an incident or problem. For organizations with an operations team that is separated from product development teams, designing code to pass operations fitness functions alleviates the scramble to create documentation or information, ensures a transfer of tacit knowledge, and reduces the potential introduction of operational complexity. Operability fitness functions may focus on the understandability of logs, the presence of runbooks, or the communication of known issues with a service.

describe "Operability Standards" do

describe "Operations Check" do

it "should have a service runbook" do

expect(service.has_runbook()).to be(true)

end

it "should have a README" do

expect(service.has_readme()).to be(true)

end

it "should have alerts" do

expect(service.has_alerts()).to be(true)

end

it "should have tracing IDs" do

expect(service.has_tracing_ids()).to be(true)

end

end

end

Conclusion

Architecture, like business capability and infrastructure, can be expressed in code through the use of appropriate fitness functions, as demonstrated by the examples above. What are the benefits of expressing architecture through the code and driving development with fitness functions?

First, fitness function-driven development objectively measures technical debt and drives code quality. For example, technical debt might be accumulated through poor or inconsistent code instrumentation, which obfuscates troubleshooting and affects log analysis. Implementing a fitness function to check for consistent, parseable log structuring helps teams avoid technical debt related to logging and observability. Fitness functions for security and operational standards enables teams to build in security and operational concerns early in the development cycle, rather than leaving them to be addressed when problems occur. When changes to security or operational standards are reflected in updated fitness functions, teams are quickly notified of the changes, able to react quickly, and have objective tests to validate conformance. Fitness function-driven development can provide real-time and objective feedback to support a set of standards and expectations on test coverage, code smells, and more.

As a second effect, fitness function-driven development can inform coding choices for interfaces, events, and APIs related to downstream processes. We found this to be more visible when applying strangler patterns to legacy applications. When decoupling a portion of business logic, we uncovered an upstream compliance requirement from another vendor; this requirement required confirmation of specific communication to the customer when a transaction was processed. If this compliance fitness function was known prior to development, a different interface could be implemented to provide improved testability, debuggability, and auditability in order to ensure the compliance requirement was met.

Fitness function-driven development communicates architectural standards as code and empowers development teams to deliver features that are aligned with architectural goals. Just as users request feature changes, enterprise architects can request changes related to regulatory, technical, operations, or security concerns. Including these architectural concerns in build, pipelines allows development teams to build secure, compliant, operational services suited to these changes and gain clarity into the “-ilities” that are most important to the organization.

With architecture goals expressed as code, conformance tests can be incorporated in build pipelines to monitor alignment with the architectural “-ilities” that are most critical. As the organization and technology evolve, these fitness functions can help organizations avoid organic architectural drift that often results in legacy or unnecessary complexity that constrains delivery of value.