The Thoughtworks Technology Radar is now in its 10th year, with the 22nd edition of the Radar coming soon. The Radar tries to highlight and give advice on languages, tools, platforms and techniques that are important in today’s IT industry. The Radar, however, is just a snapshot in time — we include ‘blips’ that are relevant for each publication, and often items that are still useful will fade from the Radar as we make room for more up-to-date information.

As we’re celebrating the first 10 years of the Radar, we surveyed Thoughtworkers to get their thoughts on the most enduring good advice from the Radar, regardless of when that advice actually appeared. Although we asked about all of the Radar quadrants, the “techniques” quadrant featured heavily in those responses. The underlying technologies and platforms might change over time, but the best techniques — the ‘how’ of software development — tend to remain stable over the long term. This article is an examination and explanation of these “enduring techniques” from the Radar.

A decade of the IT industry

It’s important to remember where (in time) the Radar comes from. Looking back at the first edition, we featured items such as Amazon EC2 and Google Wave. One of those survived, of course, and one did not, but given that we called out EC2 specifically, rather than taking all of Amazon’s services and labeling them “AWS” gives you an idea of the state of the industry at that time. In the last 10 years of the Radar, a number of storylines have played out, and the techniques featured on the Radar are closely tied to those stories.

The rise of cloud

Probably the most obvious storyline over the life of the Radar has been the emergence and rise to dominance of cloud computing. When the Radar was first created, cloud was still in its infancy. Amazon’s “elastic compute cloud” was used as a way to survive sudden spikes in demand, such as a startup becoming popular or needing a short-term burst of capacity in case its product was featured on the Oprah Winfrey show (this is an actual use-case for a Thoughtworks project). But most IT departments operated on traditional infrastructure. VMware and virtualization had started to make inroads but most organizations would not even consider moving their applications, and especially their data, away from an in-house data centre. Fast-forward 10 years and while there’s still some FUD around use of cloud providers, even large financial organizations are entrusting their core data assets to the cloud. The fortunes of major companies including Microsoft now hinge on the success of their cloud offering. Cloud has come of age and in many organizations cloud is the default — if you want to do on-premise hosting you need an exception, not the other way around.

Agile goes mainstream

At the time of the first Radar, Agile software development was considered new, cutting edge, and definitely risky. Waterfall methodologies and big up-front design and planning were prevalent across the industry. Thankfully, the story of IT in the last decade is also the story of the rise of Agile as a mainstream development practice. Organizations have realized that working in shorter iterations and regularly “shipping” code (whether to a test environment or production) and allowing business users to change direction mid-stream leads to better software, happier end users, and more value created. Two key tenets of Agile — that you must shorten feedback loops and break down silos — have spread beyond the development team and started to impact the entire IT department and even the entire business, reshaping companies around how fast they can experiment and get data and feedback about what they’re doing right and wrong.

Automation unleashes cloud’s potential

In the early days of cloud, most organizations used on-premise hosting. Some had begun to move away from “on the metal” hosting through VMware or other virtualization technology, but even if the infrastructure was virtual, the processes surrounding the machines was very traditional. Operations teams provisioned servers slightly faster because they could click a button instead of waiting for physical hardware, but then manually installed and patched operating systems and software, submitted tickets to the Network team to get an IP address, and handed off to the security team for them to do their work.

None of this worked very well when it came to cloud computing. Amazon’s Elastic Load Balancer (ELB) — the core technology that allowed you to survive being ‘Slashdotted’ — worked by spinning up new virtual machine instances based on disk images. Instances needed to auto-start, auto-configure, and join a set of workers based on a single “go” signal from the load balancer. This was one of the key drivers of the automation and DevOps movement — cloud was only about 10% as effective without strong automation, and there was a ceiling on the sheer number of virtual machines you could manage manually. At scale, companies like Netflix couldn’t afford to have the traditional ratio of one operator per 10 servers and needed to develop automation so that a single operator could manage hundreds or even thousands of virtual machines.

Continuous delivery and DevOps

With the rise of Agile as a mainstream methodology, automated testing also became mainstream. No longer was it acceptable to have a test “script” be a set of steps in an Excel sheet that a human needed to follow and sign off that software was ready for production. Successful Agile relies on a safety net of automated tests that can run end-to-end, testing in the small and the large, simulating user interactions and infrastructure failures, and certifying that the software does what it’s supposed to in a wide array of scenarios. Teams used continuous integration (CI) servers to build and test their software, and it wasn’t long before these techniques were extended to their natural conclusion: an automated “pipeline” from code change to production deployment, with teams able to put software into production at will, known as continuous delivery (CD).

To get CD to be really effective, we needed to break down one more traditional barrier: the development and operations divide.

Here, the DevOps cultural movement was at the forefront of getting developers to think more like operators, and operators to think more like developers. That’s not to say that development or operations are interchangeable or that a team shouldn’t contain people with specific expertise in those areas; DevOps is a cultural change to deliberately reverse the “throw it over the wall” mentality and blame culture that has existed for many years.

We might sound a bit pedantic here but it’s important to note that continuous integration, continuous delivery, and DevOps are all different things. If you use terms like “CI/CD” or “CD/DevOps team” it may mean you’re confused about what specifically you’re trying to achieve. CI, CD, and DevOps are all interrelated and support each other, but they’re fundamentally different things.

Transformation to the Lean business

Thoughtworks gets involved with a lot of “Agile transformation” initiatives. Our customers are often trying to transform their IT department, or “go Agile” or other variations on the theme. But to what end? What use is an IT department that can rapidly develop high quality software if that software turns out to be the wrong thing for the business? An overarching storyline especially of the last few years has been the rise of the “Lean” business. This is an organization with a laser focus on customer value throughout the whole company, with the ability to deliver software to production in small chunks, measure value, and then double down on experiments that are succeeding while killing off stuff that’s not. Whole books have been written on this topic, but the integration of technology and iterative approaches into the business world has been transformative and continues to this day.

Pulling it all together

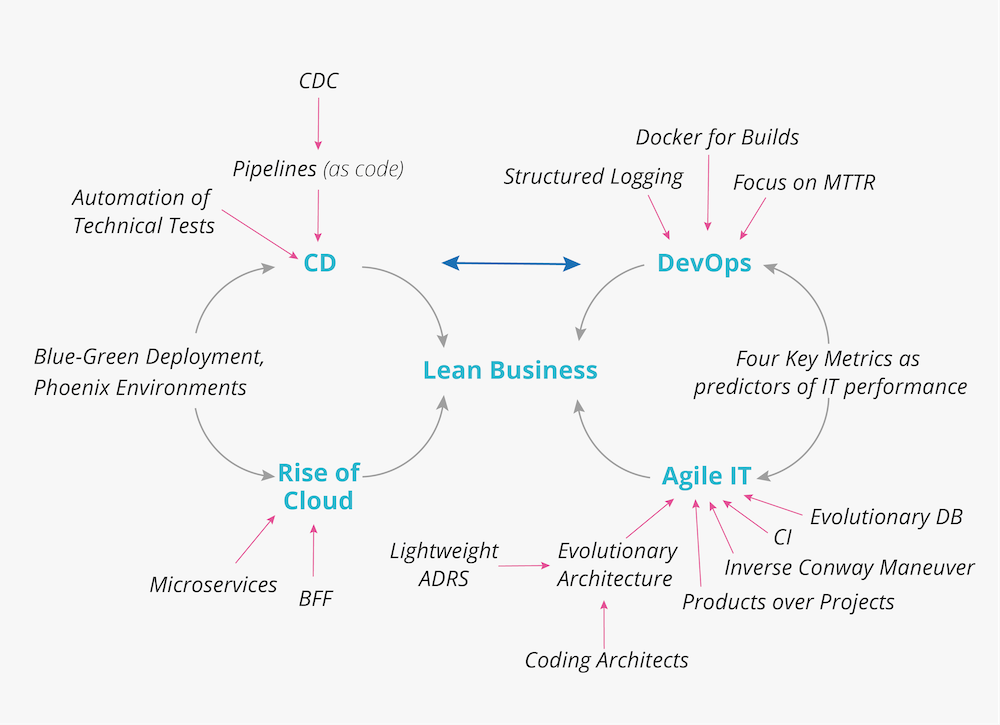

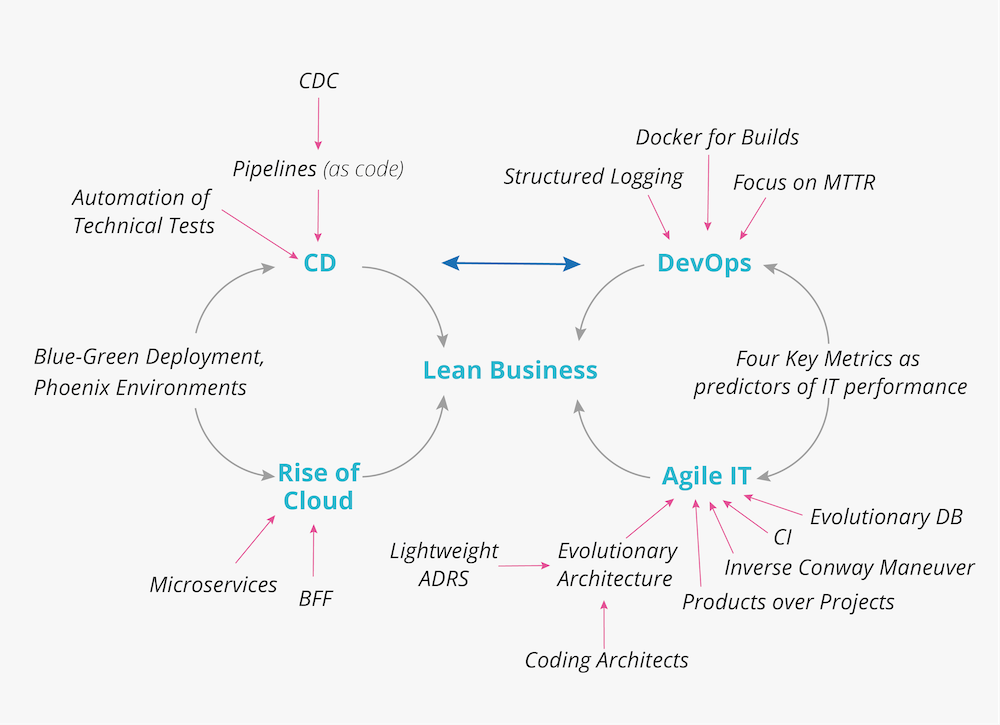

The last decade contains many story lines, but the ones we felt most important are the rise of cloud, the “mainstreaming” of Agile software development, the rise of automation, the invention of Continuous Delivery, and the introduction of DevOps culture. All these together make the Lean Business possible. The following diagram attempts to show some of the major relationships between these storylines, as well as specific techniques from the Radar that made our “most recommended” list.

Agile IT

The core to Agile IT is of course Agile software delivery, and there’s a large amount of content on this from both Thoughtworks and the wider industry. From the Radar, specific techniques we think important include:

- Test at the appropriate level, incorporating unit, functional, acceptance and integration tests to build an effective test pyramid, rather than testing everything through UI testing which is often slow and brittle.

- Coding Architects who work with teams and actually write software, rather than existing as “the Architecture Department” in an ivory tower pontificating on the best ways to write software. This helps architects understand the full context of their recommendations and achieve their long-term technical vision.

- Lightweight Architecture Decision Records provide a useful paper-trail on major decisions without becoming yet another piece of documentation on a wiki that no-one reads.

- While code is malleable, the data storage layers are traditionally less so. Evolutionary database rejects the notion that a database schema is fixed and hard to change and applies refactoring techniques at the database level. This allows the DB to evolve in a similar way to code and avoids a schema that is mismatched to the application that uses it.

- Software architecture has often been about predicting the future, but anyone who witnessed the explosion of Docker, Kubernetes, or the JavaScript-eats-the-world phenomenon will realize that making predictions further than about six months out is very difficult. Evolutionary Architecture accepts this reality and instead focuses on creating systems that are amenable to change in the future. Creating architectural fitness functions to describe the ideal system characteristics is the engine that drives this overall technique.

- A significant trend across modern organizations, even beyond the IT department, is to treat assets such as software and services as products, rather than projects. This is a deep topic and a good place to start is Sriram Narayan’s overview article. A follow-on technique is to applying product management to internal platforms, and Evan Bottcher’s article has good detail on what this might mean for you.

- We’ve gained more understanding over the last few years about how Conway’s Law applies across an organization and how it affects the systems and structures that we build. But what if you don’t have the architecture you want? One strategy is to apply the Inverse Conway Maneuver, structure teams to reflect the systems you aspire to have, and let Conway’s Law restructure those systems for you.

Continuous delivery

The premise of continuous integration was pretty radical 10 years ago — every change made by every software developer should be built and ‘integrated’ against all the other changes, all the time. Continuous delivery took this technique even further by saying that every change to the software should be deployable to production at any time. Jez Humble and David Farley wrote the canonical book on CD, and the approach has gained significant adoption.

- Continuous delivery centers around the concept of an automated deployment pipeline that takes changes from developer workstations through to production release. Pipeline configuration ideally should be source controlled and versioned, using pipelines as code.

- In order to achieve CD, a number of techniques need to come together:

- Automate database deployment to ensure DB updates are correctly matched to the code.

- Use Consumer-driven contract testing to allow many teams to collaborate without stalling each other or creating bottlenecks.

- Decoupling deployment from release allows code to sit in production without being active, making release of a feature a software switch.

- Continuous delivery for mobile devices allows us to apply CD techniques even for native applications.

- Phoenix Environments is a technique to deliberately tear down and destroy an entire environment (dev, test or even production) before recreating it from scratch. This helps ensure that the new environment is created exactly as described within an infrastructure-as-code framework and contains no unexpected hangover or misconfiguration.

- Blue-green deployment allows a team to roll out live upgrades to their software by first creating a clone of production configuration, deploying a new release to the clone, cutting over live traffic and then cleaning up the ‘old’ release.

- Managing build-time dependencies can be tricky. One technique to make it easier is to use Docker for builds, running the compilation step in an isolated environment.

- Many teams have mastered continuous delivery, but with continuous deployment every change that results in a passing build is also deployed automatically to production.

DevOps

Traditional software delivery faces distinct problems in the “last mile” of getting software out of development, into production and then running it operationally. The problems stem from entirely different teams doing development and operations with very different measures for their success, leading to a lot of friction and animosity between the two.

- DevOps is a cultural movement that tries to bring together the two sides and have developers who can think more like an operations person, and operations people who think a little more like developers. There are a lot of subtleties to the right way to do this, and several antipatterns in the industry (such as a “DevOps team”) but the key is to create more empathy between the two.

- The seminal book Accelerate identifies Four Key Metrics as identifiers of IT performance. Improving lead time, deployment frequency, change fail rate and mean time to recovery all directly improve IT performance, and these are generally the exact metrics improved by good DevOps adoption within an organization.

- Focus on Mean Time to Recovery is a specific instance of caring about the key metrics, and advice that we gave before Accelerate was released.

- Structured logging is a technique to improve the data we get from log files, by using a systematic approach to log messages.

- Automation of technical tests allows an organization to take ‘operational’ or cross-functional style testing such as failover and recovery, and automate that testing.

- Testing techniques for software are relatively well known and covered in the continuous delivery sections of this article. But organizations that are storing infrastructure configuration as code can test that infrastructure too: Pipelines for Infrastructure as Code is a technique that allows errors to be found before infrastructure changes are applied to production.

Cloud

It’s clear that the future of infrastructure is in the cloud. Organizations generally cannot compete with the world-class operational ability of the major cloud vendors, all of whom are racing to provide ever more convenience and value for their customers. While effective use of cloud is a big topic, we think there are at least two pieces of enduring advice in the Radar.

- Microservices are the first “cloud native” architecture, allowing us to trade reduced development complexity of each component for higher operational complexity of the overall system. For microservices to be successful, they require cloud to make that tradeoff worth it. As with any popular architectural style there are many ways to misuse microservices, and we caution teams against microservice envy, but they are a reasonable architectural default for cloud.

- With multiple client devices and consuming systems, it can be tempting to try to create a single API that will work for all of them. But client needs vary, and BFF (Backend for Frontends) is an approach that instead creates a simple translation layer allowing many different kinds of clients to access an API efficiently.

Security

With ever increasing reliance on technology, consumers and companies alike rely more and more on software systems, and create valuable, useful data troves. Unfortunately, security has often been an afterthought and implemented poorly, in part because the cost of security measures are paid up front but only a nebulous benefit (did we get hacked?) is ever obtained from those costs. Organizations that have been hacked or even deliberately allowed customer data to be used nefariously have suffered few long-term consequences. While this might sound like it’s all doom and gloom, the good news is that consumer awareness of security and privacy issues is on the rise and governments are creating legislation to better protect data. We advocate an approach to “build security in” to software products rather than treat it as an option or an afterthought. We think the following techniques are particularly important:

- Threat Modeling is a specific process that teams can follow when creating requirements for the software that they build, identifying exactly which threats are most important and worth defending against.

- Decoupling secret management from source code and using Secrets as a service helps to avoid the situation where a developer inadvertently adds credentials into (say) a GitHub repository, making them visible where they should not be.

Conclusion

It’s been a wild ride for software development over the last decade. Increasingly, technology is the business, and both technologists and business people must move fast to keep up. The techniques we’ve written about here can help, and we expect them to endure for at least some of the next decade too. We hope you continue to read and enjoy the Radar as we keep a finger on the pulse of what’s coming next.